This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

It seems to me worth trying to slow down AI development to steer successfully around the shoals of extinction and out to utopia.

But I was thinking lately: even if I didn’t think there was any chance of extinction risk, it might still be worth prioritizing a lot of care over moving at maximal speed. Because there are many different possible AI futures, and I think there’s a good chance that the initial direction affects the long term path, and different long term paths go to different places. The systems we build now will shape the next systems, and so forth. If the first human-level-ish AI is brain emulations, I expect a quite different sequence of events to if it is GPT-ish.

People genuinely pushing for AI speed over care (rather than just feeling impotent) apparently think there is negligible risk of bad outcomes, but also they are asking to take the first future to which there is a path. Yet possible futures are a large space, and arguably we are in a rare plateau where we could climb very different hills, and get to much better futures.

What is the mechanism, specifically, by which going slower will yield more "care"? What is the mechanism by which "care" will yield a better outcome? I see this model asserted pretty often, but no one ever spells out the details.

I've studied the history of technological development in some depth, and I haven't seen anything to convince me that there's a tradeoff between development speed on the one hand, and good outcomes on the other.

Wittgenstein argues that we shouldn't understand language by piecing together the dictionary meaning of each individual word in a sentence, but rather that language should be understood in context as a move in a language game.

Consider the phrase, "You're the most beautiful girl in the world". Many rationalists might shy away from such a statement, deeming it statistically improbable. However, while this strict adherence to truth is commendable, I honestly feel it is misguided.

It's honestly kind of absurd to expect your words to be taken literally in these kinds of circumstances. The recipient of such a compliment will almost certainly understand it as hyperbole intended to express fondness and desire, rather than as a literal factual assertion. Further, by invoking a phrase that plays a certain role...

I suspect that many people who use such a phrase would endorse an interpretation such as "The most beautiful... to me."

Note: In @Nathan Young's words "It seems like great essays should go here and be fed through the standard LessWrong algorithm. There is possibly a copyright issue here, but we aren't making any money off it either."

What follows is a full copy of the C. S. Lewis essay "The Inner Ring" the 1944 Memorial Lecture at King’s College, University of London.

May I read you a few lines from Tolstoy’s War and Peace?

When Boris entered the room, Prince Andrey was listening to an old general, wearing his decorations, who was reporting something to Prince Andrey, with an expression of soldierly servility on his purple face. “Alright. Please wait!” he said to the general, speaking in Russian with the French accent which he used when he spoke with contempt. The...

I wish there were a clear unifying place for all commentary on this topic. I could create a wiki page I suppose.

xlr8harder writes:

In general I don’t think an uploaded mind is you, but rather a copy. But one thought experiment makes me question this. A Ship of Theseus concept where individual neurons are replaced one at a time with a nanotechnological functional equivalent.

Are you still you?

Presumably the question xlr8harder cares about here isn't semantic question of how linguistic communities use the word "you", or predictions about how whole-brain emulation tech might change the way we use pronouns.

Rather, I assume xlr8harder cares about more substantive questions like:

- If I expect to be uploaded tomorrow, should I care about the upload in the same ways (and to the same degree) that I care about my future biological self?

- Should I anticipate experiencing what my upload experiences?

- If the scanning and uploading process requires

Update: a friend convinced me that I really should separate my intuitions about locating patterns that are exactly myself from my intuitions about the moral value of ensuring I don't contribute to a decrease in realityfluid of the mindlike experiences I morally value, in which case the reason that I selfishly value causal history is actually that it's an overwhelmingly predictive proxy for where my self-pattern gets instantiated, and my moral values - an overwhelmingly larger portion of what I care about - care immensely about avoiding waste, because it appears to me to be by far the largest impact any agent can have on what the future is made of.

Also, I now think that eating is a form of incremental uploading.

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

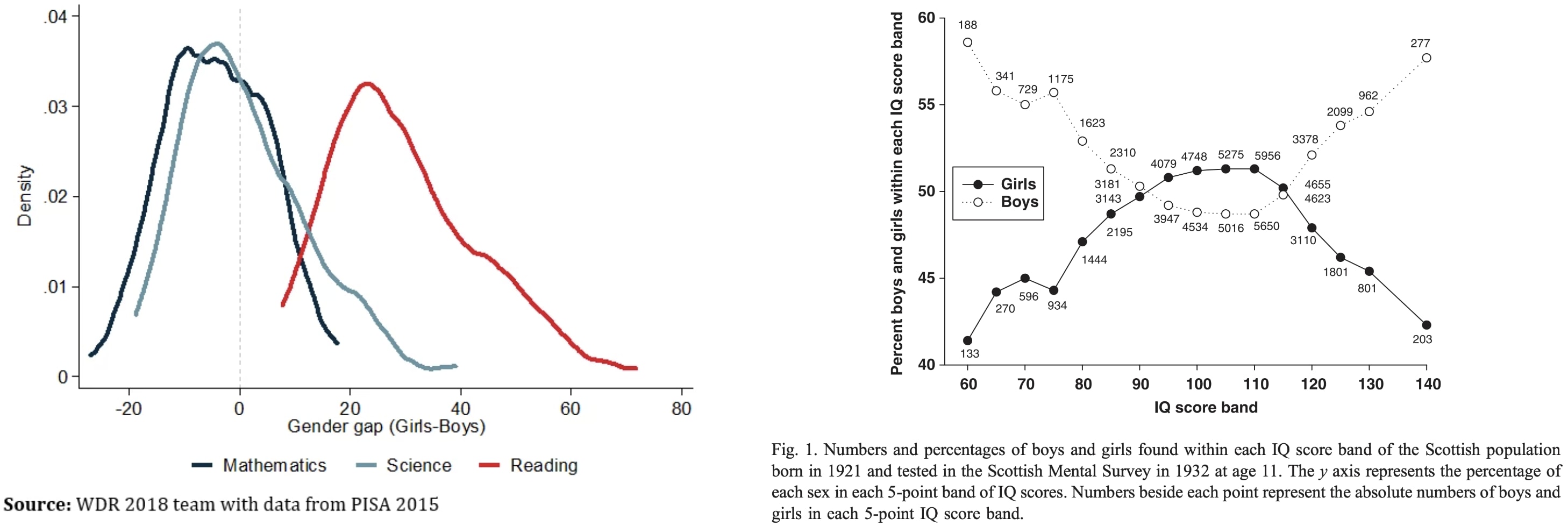

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

Why is nobody in San Francisco pretty? Hormones make you pretty but dumb (pretty faces don't usually pay rent in SF). Why is nobody in Los Angeles smart? Hormones make you pretty but dumb. (Sincere apologies to all residents of SF & LA.)

Some other possibilities:

-

Pretty people self-select towards interests and occupations that reward beauty. If you're pretty, you're more likely to be popular in high school, which interferes with the dedication necessary to become a great programmer.

-

A big reason people are prettier in LA is they put significant

Note: It seems like great essays should go here and be fed through the standard LessWrong algorithm. There is possibly a copyright issue here, but we aren't making any money off it either. What follows is a full copy of "This is Water" by David Foster Wallace his 2005 commencement speech to the graduating class at Kenyon College.

Greetings parents and congratulations to Kenyon’s graduating class of 2005. There are these two young fish swimming along and they happen to meet an older fish swimming the other way, who nods at them and says “Morning, boys. How’s the water?” And the two young fish swim on for a bit, and then eventually one of them looks over at the other and goes “What the hell is water?”

This is...

Can I check that I've understood it.

Roughly, the essay urges one to be conscious of each passing thought, to see it and kind of head it off at the tracks - "feeling angry?" "don't!". But the comment argues this is against what CBT says about feeling our feelings.

What about Sam Harris' practise of meditation which seems focused on seeing and noticing thoughts, turning attention back on itself. I had a period last night of sort of "intense consciousness" where I felt very focused on the fact I was conscious. It. wasn't super pleasant, but it was profound. I can see why one would want to focus on that but also why it might be a bad idea.

The history of science has tons of examples of the same thing being discovered multiple time independently; wikipedia has a whole list of examples here. If your goal in studying the history of science is to extract the predictable/overdetermined component of humanity's trajectory, then it makes sense to focus on such examples.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: "singular" discoveries, i.e. discoveries which nobody else was anywhere close to figuring out. After all, if someone else would have figured it out shortly after anyways, then the discovery probably wasn't very counterfactually impactful.

Alas, nobody seems to have made a list of highly counterfactual scientific discoveries, to complement wikipedia's list of multiple discoveries.

To...

Maybe it's the other way around, and it's the Chinese elite who was unusually and stubbornly conservative on this, trusting the wisdom of their ancestors over foreign devilry (would be a pretty Confucian thing to do). The Greeks realised the Earth was round from things like seeing sails appear over the horizon. Any sailing peoples thinking about this would have noticed sooner or later.

Kind of a long shot, but did Polynesian people have ideas on this, for example?

Poetry and practicality

I was staring up at the moon a few days ago and thought about how deeply I loved my family, and wished to one day start my own (I'm just over 18 now). It was a nice moment.

Then, I whipped out my laptop and felt constrained to get back to work; i.e. read papers for my AI governance course, write up LW posts, and trade emails with EA France. (These I believe to be my best shots at increasing everyone's odds of survival).

It felt almost like sacrilege to wrench myself away from the moon and my wonder. Like I was ruining a moment of poetr...

TL;DR

Tacit knowledge is extremely valuable. Unfortunately, developing tacit knowledge is usually bottlenecked by apprentice-master relationships. Tacit Knowledge Videos could widen this bottleneck. This post is a Schelling point for aggregating these videos—aiming to be The Best Textbooks on Every Subject for Tacit Knowledge Videos. Scroll down to the list if that's what you're here for. Post videos that highlight tacit knowledge in the comments and I’ll add them to the post. Experts in the videos include Stephen Wolfram, Holden Karnofsky, Andy Matuschak, Jonathan Blow, Tyler Cowen, George Hotz, and others.

What are Tacit Knowledge Videos?

Samo Burja claims YouTube has opened the gates for a revolution in tacit knowledge transfer. Burja defines tacit knowledge as follows:

...Tacit knowledge is knowledge that can’t properly be transmitted via verbal or written instruction, like the ability to create

Networking, Relationship building, both professional and personal, I'm sure there are overlaps. And echoing another request: Sales