This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

Post for a somewhat more general audience than the modal LessWrong reader, but gets at my actual thoughts on the topic.

In 2018 OpenAI defeated the world champions of Dota 2, a major esports game. This was hot on the heels of DeepMind’s AlphaGo performance against Lee Sedol in 2016, achieving superhuman Go performance way before anyone thought that might happen. AI benchmarks were being cleared at a pace which felt breathtaking at the time, papers were proudly published, and ML tools like Tensorflow (released in 2015) were coming online. To people already interested in AI, it was an exciting era. To everyone else, the world was unchanged.

Now Saturday Night Live sketches use sober discussions of AI risk as the backdrop for their actual jokes, there are hundreds...

I don't believe that data is limiting because the finite data argument only applies to pretraining. Models can do self-critique or be objectively rated on their ability to perform tasks, and trained via RL. This is how humans learn, so it is possible to be very sample-efficient, and currently a small proportion of training compute is RL.

If the majority of training compute and data are outcome-based RL, it is not clear that the "Playing human roles is pretty human" section holds, because the system is not primarily trained to play human roles.

Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

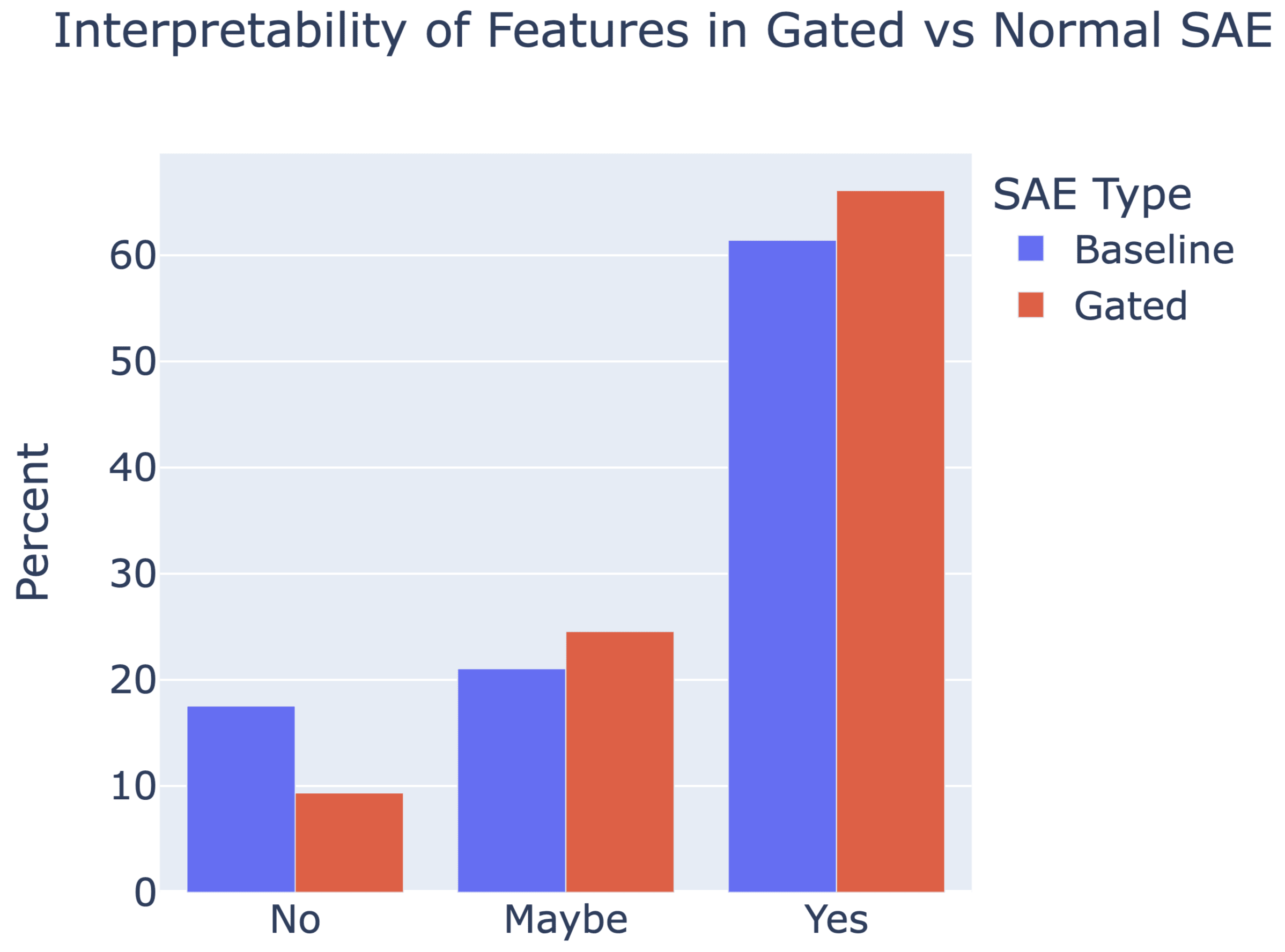

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

Yep, the intuition here indeed was that L1 penalised reconstruction seems to be okay for teaching a standard SAE's encoder to detect which features are on (even if features get shrunk as a result), so that is effectively what this auxiliary loss is teaching the gate sub-layer to do, alongside the sparsity penalty. (The key difference being we freeze the decoder in the auxiliary task, which the ablation study shows helps performance.) Maybe to put it another way, this was an auxiliary task that we had good evidence would teach the gate sublayer to detect ac...

This comes from a podcast called 18Forty, of which the main demographic of Orthodox Jews. Eliezer's sister (Hannah) came on and talked about her Sheva Brachos, which is essentially the marriage ceremony in Orthodox Judaism. People here have likely not seen it, and I thought it was quite funny, so here it is:

https://18forty.org/podcast/channah-cohen-the-crisis-of-experience/

David Bashevkin:

So I want to shift now and I want to talk about something that full disclosure, we recorded this once before and you had major hesitation for obvious reasons. It’s very sensitive what we’re going to talk about right now, but really for something much broader, not just because it’s a sensitive personal subject, but I think your hesitation has to do with what does this have to do with the subject at hand?...

From the title I expected this to be embarrassing for Eliezer, but that was actually extremely sweet, and good advice!

Are people in rich countries happier on average than people in poor countries? (According to GPT-4, the academic consensus is that it does, but I'm not sure it's representing it correctly.) If so, why do suicide rates increase (or is that a false positive)? Does the mean of the distribution go up while the tails don't or something?

The next monthly discussion meetup is Saturday, May 4 @ 2 PM (see below for location etc.). For this meetup, we’ll be discussing the relationship between social media and mental health, with a focus on arguments by psychologist Jonathan Haidt (particularly from his new book) and some criticisms of his interpretation of the evidence. There are readings, a podcast, and a video below, BUT feel free to come whether or not you’ve reviewed any of it, as always. (NOTE: The June topic/discussion meetup will be moved to Saturday, June 8 @ 2 PM)

READINGS ETC.

- Background and info from Jonathan Haidt

- The evidence, from the book website: https://www.anxiousgeneration.com/research/the-evidence

- A talk on the material from the book: https://www.youtube.com/watch?v=yVq4ARIlNVg

- A guide to facilitate exploration of ideas and experiences touched on in the book: https://www.anxiousgeneration.com/pdfs/thought-starters-for-gen-z.pdf

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

i'm sorry not to be engaging with the content of the post here; hopefully others have that covered. but i just wanna say, man this is so well written! at the sentence and paragraph level especially, i find it inspiring. it makes me wanna write more like i'm drunk and dgaf, though i doubt that exact thing would actually suffice to allow me to hit a similar stylistic target.

(the rest of this comment is gonna be largely for me and my own development, but maybe you'll like reading it anyway.)

i think you do a bunch of stuff that current me is too chicken to try...

Crosspost from my blog.

If you spend a lot of time in the blogosphere, you’ll find a great deal of people expressing contrarian views. If you hang out in the circles that I do, you’ll probably have heard of Yudkowsky say that dieting doesn’t really work, Guzey say that sleep is overrated, Hanson argue that medicine doesn’t improve health, various people argue for the lab leak, others argue for hereditarianism, Caplan argue that mental illness is mostly just aberrant preferences and education doesn’t work, and various other people expressing contrarian views. Often, very smart people—like Robin Hanson—will write long posts defending these views, other people will have criticisms, and it will all be such a tangled mess that you don’t really know what to think about them.

For...

It may be useful to write about how a consumer can distinguish contrarian takes from original insights. Until that's a common skill, there will remain a market for contrarians.

People have been posting great essays so that they're "fed through the standard LessWrong algorithm." This essay is in the public domain in the UK but not the US.

From a very early age, perhaps the age of five or six, I knew that when I grew up I should be a writer. Between the ages of about seventeen and twenty-four I tried to abandon this idea, but I did so with the consciousness that I was outraging my true nature and that sooner or later I should have to settle down and write books.

I was the middle child of three, but there was a gap of five years on either side, and I barely saw my father before I was eight. For this and other reasons I...

The theories are probably just rationalizations anyway.

I now realize that my thinking may have been particularly brutal, and I may have skipped inferential steps.

To clarify, If someone didn't know, or was reluctant to repeat a password, I would end contact or request an in person meeting.

But to further clarify, that does not make your points invalid. I think it makes them stronger. If something is weird and risky, good luck convincing people to do it.