This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

There's some simple processes that make it easier/possible to digest whole foods that would otherwise be difficult/impossible to healthily digest, but I don't really think there's meaningful confusion as to whether that's being referred to by the term processed foods.

Could you offer some examples of healthy foods /better for us foods that are processed such that there would be meaningful confusion surrounding the idea of it being healthy to avoid processed foods, according to how that term is typically used?

I can think of some, but definitely not anything of enough consequence to help me to understand why people here seem so critical of the concept of reducing processed foods as a health guideline.

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

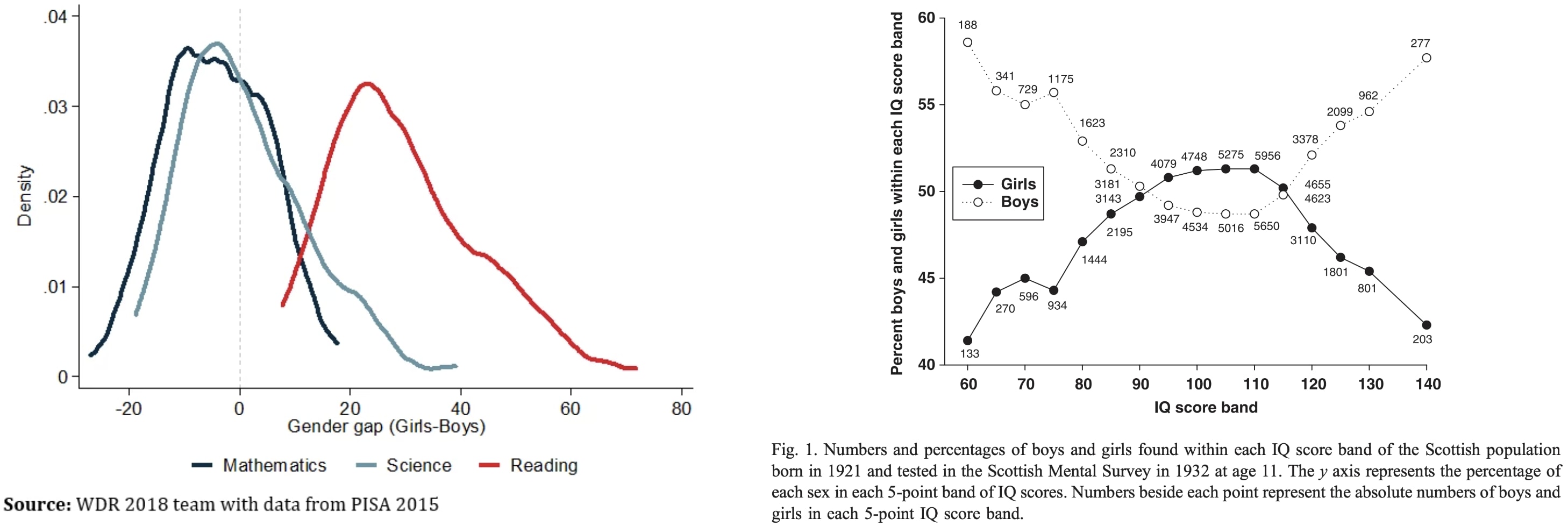

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

The trans IQ connection is entirely explained by woman’s clothing being less itchy.

Concerns over AI safety and calls for government control over the technology are highly correlated but they should not be.

There are two major forms of AI risk: misuse and misalignment. Misuse risks come from humans using AIs as tools in dangerous ways. Misalignment risks arise if AIs take their own actions at the expense of human interests.

Governments are poor stewards for both types of risk. Misuse regulation is like the regulation of any other technology. There are reasonable rules that the government might set, but omission bias and incentives to protect small but well organized groups at the expense of everyone else will lead to lots of costly ones too. Misalignment regulation is not in the Overton window for any government. Governments do not have strong incentives...

Until recently, people with P(doom) of, say, 10%, have been natural allies of people with P(doom) of >80%. But the regulation that the latter group thinks is sufficient to avoid xrisk with high confidence has, on my worldview, a significant chance of either causing x-risk from totalitarianism, or else causing x-risk via governments being worse at alignment than companies would have been.

I agree. Moreover, a p(doom) of 10% vs. 80% means a lot for people like me who think the current generation of humans have substantial moral value (i.e., people who aren...

Intuitively this feels super weird and unjustified, but it does make the "prediction" that we'd find ourselves in a place with high marginal utility of money, as we currently do.

This is particularly weird because your indexical probability then depends on what kind of bet you're offered. In other words, our marginal utility of money differs from our marginal utility of other things, and which one do you use to set your indexical probability? So this seems like a non-starter to me...

By "acausal games" do you mean a generalization of acausal trade?

Yes, didn't want to just say "acausal trade" in case threats/war is also a big thing.

Basically yes; I'd expect animal rights to increase somewhat if we developed perfect translators, but not fully jump.

And for the last part, yes, I'm thinking of current systems. LLMs specifically have a 'drive' to generate reasonable-sounding text; and they aren't necessarily coherent individuals or groups of individuals that will give consistent answers as to their interests even if they also happened to be sentient, intelligent, suffering, flourishing, and so forth. We can't "just ask" an LLM about its interests and expect the answer to soundly reflect i...

Epistemic status: party trick

Why remove the prior

One famed feature of Bayesian inference is that it involves prior probability distributions. Given an exhaustive collection of mutually exclusive ways the world could be (hereafter called ‘hypotheses’), one starts with a sense of how likely the world is to be described by each hypothesis, in the absence of any contingent relevant evidence. One then combines this prior with a likelihood distribution, which for each hypothesis gives the probability that one would see any particular set of evidence, to get a posterior distribution of how likely each hypothesis is to be true given observed evidence. The prior and the likelihood seem pretty different: the prior is looking at the probability of the hypotheses in question, whereas the likelihood is looking at...

Note: It seems like great essays should go here and be fed through the standard LessWrong algorithm. There is possibly a copyright issue here, but we aren't making any money off it either. What follows is a full copy of "This is Water" by David Foster Wallace his 2005 commencement speech to the graduating class at Kenyon College.

Greetings parents and congratulations to Kenyon’s graduating class of 2005. There are these two young fish swimming along and they happen to meet an older fish swimming the other way, who nods at them and says “Morning, boys. How’s the water?” And the two young fish swim on for a bit, and then eventually one of them looks over at the other and goes “What the hell is water?”

This is...

Mod note: I clarified the opening note a bit more, to make the start and nature of the essay more clear.

This post brings together various questions about the college application process, as well as practical considerations of where to apply and go. We are seeing some encouraging developments, but mostly the situation remains rather terrible for all concerned.

Application Strategy and Difficulty

Paul Graham: Colleges that weren’t hard to get into when I was in HS are hard to get into now. The population has increased by 43%, but competition for elite colleges seems to have increased more. I think the reason is that there are more smart kids. If so that’s fortunate for America.

Are college applications getting more competitive over time?

Yes and no.

- The population size is up, but the cohort size is roughly the same.

- The standard ‘effort level’ of putting in work and sacrificing one’s childhood and gaming

After the events of April 2024, I cannot say that for Columbia or Yale. No just no.

What are these events?

The main part of the issue was actually that I was not aware I had internal conflicts. I just mysteriously felt less emotions and motivation.

Yes, I believe that one can learn to entirely stop even considering certain potential actions as actions available to us. I don't really have a systematic solution for this right now aside from some form of Noticing practice (I believe a more refined version of this practice is called Naturalism but I don't have much experience with this form of practice).