This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

Or maybe speaking french automatically makes you healthier. I'm gonna choose to believe it's that one.

Note: It seems like great essays should go here and be fed through the standard LessWrong algorithm. There is possibly a copyright issue here, but we aren't making any money off it either. What follows is a full copy of "This is Water" by David Foster Wallace his 2005 commencement speech to the graduating class at Kenyon College.

Greetings parents and congratulations to Kenyon’s graduating class of 2005. There are these two young fish swimming along and they happen to meet an older fish swimming the other way, who nods at them and says “Morning, boys. How’s the water?” And the two young fish swim on for a bit, and then eventually one of them looks over at the other and goes “What the hell is water?”

This is...

If you're into podcasts, the Very Bad Wizards guys did an ep on this essay, which I enjoyed: https://verybadwizards.com/episode/episode-227-a-terrible-master-david-foster-wallaces-this-is-water

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

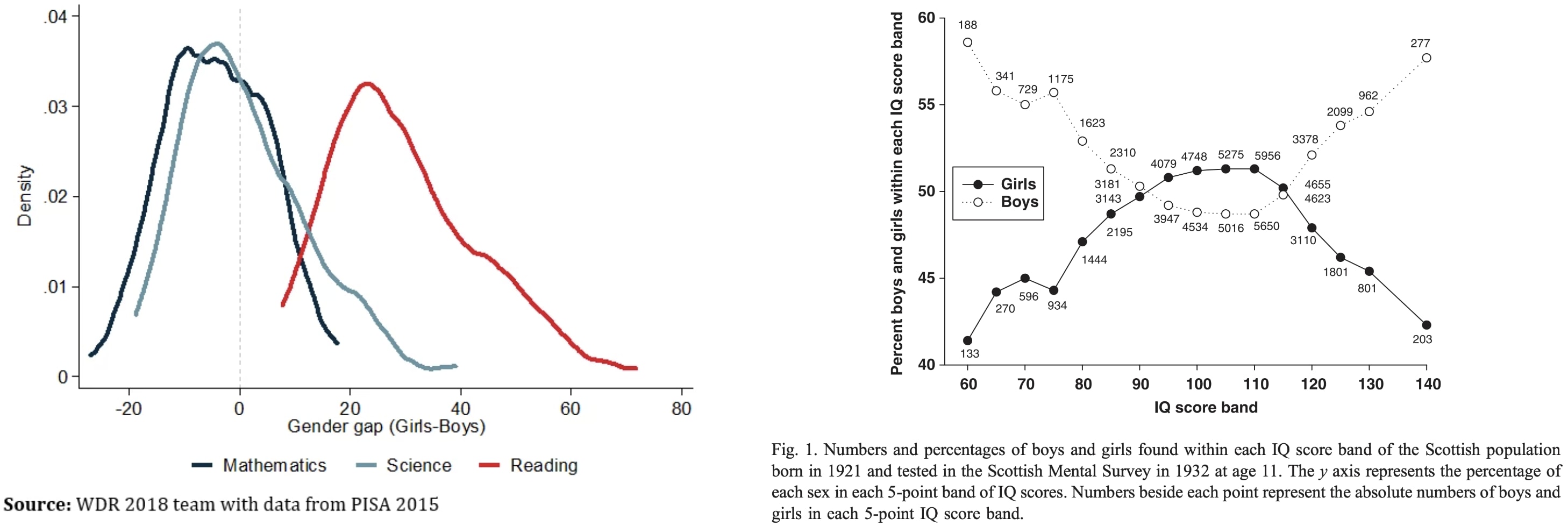

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

seems in tension with your smarter friends transitioning after high school.

They seemed low-T during high school though!

Yeah could be a third factor though. Maybe you are right.

The Löwenheim–Skolem theorem implies, among other things, that any first-order theory whose symbols are countable, and which has an infinite model, has a countably infinite model. This means that, in attempting to refer to uncountably infinite structures (such as in set theory), one "may as well" be referring to an only countably infinite structure, as far as proofs are concerned.

The main limitation I see with this theorem is that it preserves arbitrarily deep quantifier nesting. In Peano arithmetic, it is possible to form statements that correspond (under the standard interpretation) to arbitrary statements in the arithmetic hierarchy (by which I mean, the union of and for arbitrary n). Not all of these statements are computable. In general, the question of whether a given statement is...

I see that when I commented yesterday, I was confused about how you had defined U. You're right that you don't need a consistent guessing oracle to get from U to a completion of U, since the axioms are all atomic propositions, and you can just set the remaining atomic propositions however you want. However, this introduces the problem that getting the axioms of U requires a halting oracle, not just a consistent guessing oracle, since to tell whether something is an axiom, you need to know whether there actually is a proof of a given thing in T.

The history of science has tons of examples of the same thing being discovered multiple time independently; wikipedia has a whole list of examples here. If your goal in studying the history of science is to extract the predictable/overdetermined component of humanity's trajectory, then it makes sense to focus on such examples.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: "singular" discoveries, i.e. discoveries which nobody else was anywhere close to figuring out. After all, if someone else would have figured it out shortly after anyways, then the discovery probably wasn't very counterfactually impactful.

Alas, nobody seems to have made a list of highly counterfactual scientific discoveries, to complement wikipedia's list of multiple discoveries.

To...

Could you define what you mean here by counterfactual impact?

My knowledge of the word counterfactual comes mainly from the blockchain world, where we use it in the form of "a person could do x at any time, and we wouldn't be able to stop them, therefore x is counterfactually already true or has counterfactually already occured"

Concerns over AI safety and calls for government control over the technology are highly correlated but they should not be.

There are two major forms of AI risk: misuse and misalignment. Misuse risks come from humans using AIs as tools in dangerous ways. Misalignment risks arise if AIs take their own actions at the expense of human interests.

Governments are poor stewards for both types of risk. Misuse regulation is like the regulation of any other technology. There are reasonable rules that the government might set, but omission bias and incentives to protect small but well organized groups at the expense of everyone else will lead to lots of costly ones too. Misalignment regulation is not in the Overton window for any government. Governments do not have strong incentives...

The argument is that with 1970′s tech the soviet union collapsed, however with 2020 computer tech (not needing GenAI) it would not.

I note that China is still doing market economics, and nobody is trying (or even advocating, AFAIK) some very ambitious centrally planned economy using modern computers, so this seems like pure speculation? Has someone actually made a detailed argument about this, or at least has the agreement of some people with reasonable economics intuitions?

The concept of "the meaning of life" still seems like a category error to me. It's an attempt to apply a system of categorization used for tools, one in which they are categorized by the purpose for which they are used, to something that isn't a tool: a human life. It's a holdover from theistic worldviews in which God created humans for some unknown purpose.

The lesson I draw instead from the knowledge-uploading thought experiment -- where having knowledge instantly zapped into your head seems less worthwhile acquiring it more slowly yourself -- is th...

EDIT: I somehow missed that John Wentworth and David Lorell are also in the middle of a sequence have written one post on this same topic here. I will see where this goes from here! This sequence will continue!

Introduction to a sequence on the statistical thermodynamics of some things and maybe eventually everything. This will make more sense if you have a basic grasp on quantum mechanics, but if you're willing to accept "energy comes in discrete units" as a premise then you should be mostly fine.

The title of this post has a double meaning:

- Forget the thermodynamics you've learnt before, because statistical mechanics starts from information theory.

- The main principle of doing things with statistical mechanics is can be summed up as follows:

Forget as much as possible, then...

This will make more sense if you have a basic grasp on quantum mechanics, but if you're willing to accept "energy comes in discrete units" as a premise then you should be mostly fine.

My current understanding is that QM is not-at-all needed to make sense of stat mech. Instead, the thing where energy is equally likely to be in any of the degrees of freedom just comes from using a measure over your phase space such that the dynamical law of your system preservers that measure!

U.S. Secretary of Commerce Gina Raimondo announced today additional members of the executive leadership team of the U.S. AI Safety Institute (AISI), which is housed at the National Institute of Standards and Technology (NIST). Raimondo named Paul Christiano as Head of AI Safety, Adam Russell as Chief Vision Officer, Mara Campbell as Acting Chief Operating Officer and Chief of Staff, Rob Reich as Senior Advisor, and Mark Latonero as Head of International Engagement. They will join AISI Director Elizabeth Kelly and Chief Technology Officer Elham Tabassi, who were announced in February. The AISI was established within NIST at the direction of President Biden, including to support the responsibilities assigned to the Department of Commerce under the President’s landmark Executive Order.

...Paul Christiano, Head of AI Safety, will design

the idea that we should have "BSL-5" is the kind of silly thing that novice EAs propose that doesn't make sense because there literally isn't something significantly more restrictive

I mean, I'm sure something more restrictive is possible. But my issue with BSL levels isn't that they include too few BSL-type restrictions, it's that "lists of restrictions" are a poor way of managing risk when the attack surface is enormous. I'm sure someday we'll figure out how to gain this information in a safer way—e.g., by running simulations of GoF experiments instead of...