Frustrated by claims that "enlightenment" and similar meditative/introspective practices can't be explained and that you only understand if you experience them, Kaj set out to write his own detailed gears-level, non-mysterious, non-"woo" explanation of how meditation, etc., work in the same way you might explain the operation of an internal combustion engine.

Popular Comments

Recent Discussion

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

It seems pretty straightforward to me but maybe I'm missing something in what you're saying or thinking about it differently.

Our bodies evolved to digest and utilize foods consisting of certain combinations/ratios of component parts.

Processed food typically refers to food that has been changed to have certain parts taken out of it, and/or isolated parts of other foods added to it (or more complex versions of that). Digesting sugar has very different impacts depending on what it's digested alongside with. Generally the more processed something is, the more ...

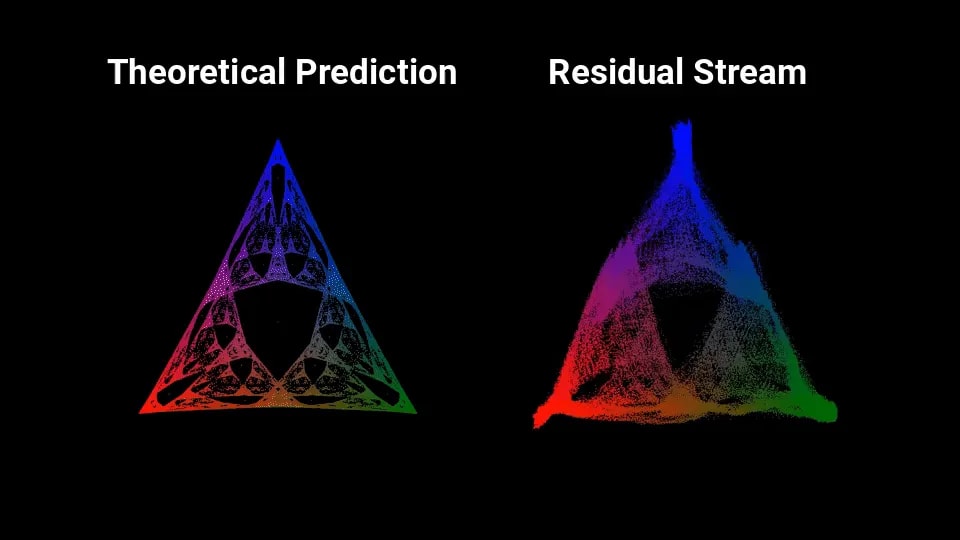

Yesterday Adam Shai put up a cool post which… well, take a look at the visual:

Yup, it sure looks like that fractal is very noisily embedded in the residual activations of a neural net trained on a toy problem. Linearly embedded, no less.

I (John) initially misunderstood what was going on in that post, but some back-and-forth with Adam convinced me that it really is as cool as that visual makes it look, and arguably even cooler. So David and I wrote up this post / some code, partly as an explainer for why on earth that fractal would show up, and partly as an explainer for the possibilities this work potentially opens up for interpretability.

One sentence summary: when tracking the hidden state of a hidden Markov model, a Bayesian’s...

re: second diagram in the "Bayesian Belief States For A Hidden Markov Model" section, shouldn't the transition probabilities for the top left model be 85/7.5/7.5 instead of 90/5/5?

I didn’t use to be, but now I’m part of the 2% of U.S. households without a television. With its near ubiquity, why reject this technology?

The Beginning of my Disillusionment

Neil Postman’s book Amusing Ourselves to Death radically changed my perspective on television and its place in our culture. Here’s one illuminating passage:

...We are no longer fascinated or perplexed by [TV’s] machinery. We do not tell stories of its wonders. We do not confine our TV sets to special rooms. We do not doubt the reality of what we see on TV [and] are largely unaware of the special angle of vision it affords. Even the question of how television affects us has receded into the background. The question itself may strike some of us as strange, as if one were

I noticed this same editing style in a children's show about 20 years ago (when I last watched TV regularly). Every second there was a new cut -- the camera never stayed focused on any one subject for long. It was highly distracting to me, such that I couldn't even watch without feeling ill, and yet this was a highly popular and award-winning television show. I had to wonder at the time: What is this doing to children's developing brains?

Concerns over AI safety and calls for government control over the technology are highly correlated but they should not be.

There are two major forms of AI risk: misuse and misalignment. Misuse risks come from humans using AIs as tools in dangerous ways. Misalignment risks arise if AIs take their own actions at the expense of human interests.

Governments are poor stewards for both types of risk. Misuse regulation is like the regulation of any other technology. There are reasonable rules that the government might set, but omission bias and incentives to protect small but well organized groups at the expense of everyone else will lead to lots of costly ones too. Misalignment regulation is not in the Overton window for any government. Governments do not have strong incentives...

Firms are actually better than governments at internalizing costs across time. Asset values incorporate the potential future flows. For example, consider a retiring farmer. You might think that they have an incentive to run the soil dry in their last season since they won't be using it in the future, but this would hurt the sale value of the farm. An elected representative who's term limit is coming up wouldn't have the same incentives.

Of course, firms incentives are very misaligned in important ways. The question is: Can we rely on government to improve these incentives.

Book review: Deep Utopia: Life and Meaning in a Solved World, by Nick Bostrom.

Bostrom's previous book, Superintelligence, triggered expressions of concern. In his latest work, he describes his hopes for the distant future, presumably to limit the risk that fear of AI will lead to a The Butlerian Jihad-like scenario.

While Bostrom is relatively cautious about endorsing specific features of a utopia, he clearly expresses his dissatisfaction with the current state of the world. For instance, in a footnoted rant about preserving nature, he writes:

...Imagine that some technologically advanced civilization arrived on Earth ... Imagine they said: "The most important thing is to preserve the ecosystem in its natural splendor. In particular, the predator populations must be preserved: the psychopath killers, the fascist goons, the despotic death squads ... What a tragedy if this rich natural diversity were replaced with a monoculture of

I saw this guest post on the Slow Boring substack, by a former senior US government official, and figured it might be of interest here. The post's original title is "The economic research policymakers actually need", but it seemed to me like the post could be applied just as well to other fields.

Excerpts (totaling ~750 words vs. the original's ~1500):

I was a senior administration official, here’s what was helpful

[Most] academic research isn’t helpful for programmatic policymaking — and isn’t designed to be. I can, of course, only speak to the policy areas I worked on at Commerce, but I believe many policymakers would benefit enormously from research that addressed today’s most pressing policy problems.

...... most academic papers presume familiarity with the relevant academic literature, making it difficult

Have you considered antidepressants? I recommend trying them out to see if they help. In my experience, antidepressants can have non-trivial positive effects that can be hard-to-put-into-words, except you can notice the shift in how you think and behave and relate to things, and this shift is one that you might find beneficial.

I also think that slowing down and taking care of yourself can be good -- it can help build a generalized skill of noticing the things you didn't notice before that led to the breaking point you describe.

Here's an anecdote that might...

I left Google a month ago, and right now don't work. Writing this post in case anyone has interesting ideas what I could do. This isn't an "urgently need help" kind of thing - I have a little bit of savings, right now planning to relax some more weeks and then go into some solo software work. But I thought I'd write this here anyway, because who knows what'll come up.

Some things about me. My degree was in math. My software skills are okayish: I left Google at L5 ("senior"), and also made a game that went semi-viral. I've also contributed a lot on LW, the most prominent examples being my formalizations of decision theory ideas (Löbian cooperation, modal fixpoints etc) and later the AI Alignment Prize...

I'm hoping to collaborate with some software engineers who can help me build an alignment research assistant. Some (a little bit outdated) info here: Accelerating Alignment. The goal is to augment alignment researchers using AI systems. A relevant talk I gave. Relevant survey post.

What I have in mind also relates to this post by Abram Demski and this post by John Wentworth (with a top comment by me).

Send me a DM if you (or any good engineer) are reading this.

A New Bill Offer Has Arrived

Center for AI Policy proposes a concrete actual model bill for us to look at.

Here was their announcement:

...WASHINGTON – April 9, 2024 – To ensure a future where artificial intelligence (AI) is safe for society, the Center for AI Policy (CAIP) today announced its proposal for the “Responsible Advanced Artificial Intelligence Act of 2024.” This sweeping model legislation establishes a comprehensive framework for regulating advanced AI systems, championing public safety, and fostering technological innovation with a strong sense of ethical responsibility.

“This model legislation is creating a safety net for the digital age,” said Jason Green-Lowe, Executive Director of CAIP, “to ensure that exciting advancements in AI are not overwhelmed by the risks they pose.”

The “Responsible Advanced Artificial Intelligence Act of 2024” is

The rulemaking authority procedures are anything but "standard issue boilerplate." They're novel and extremely unusual, like a lot of other things in the draft bill.

Section 6, for example, creates a sort of one-way ratchet for rulemaking where the agency has basically unlimited authority to make rules or promulgate definitions that make it harder to get a permit, but has to make findings to make it easier. That is not how regulation usually works.

The abbreviated notice period is also really wild.

I think the draft bill introduces a lot of intere...