This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

The history of science has tons of examples of the same thing being discovered multiple time independently; wikipedia has a whole list of examples here. If your goal in studying the history of science is to extract the predictable/overdetermined component of humanity's trajectory, then it makes sense to focus on such examples.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: "singular" discoveries, i.e. discoveries which nobody else was anywhere close to figuring out. After all, if someone else would have figured it out shortly after anyways, then the discovery probably wasn't very counterfactually impactful.

Alas, nobody seems to have made a list of highly counterfactual scientific discoveries, to complement wikipedia's list of multiple discoveries.

To...

The Iowa Election Markets were roughly contemporaneous with Hanson's work. They are often co-credited.

U.S. Secretary of Commerce Gina Raimondo announced today additional members of the executive leadership team of the U.S. AI Safety Institute (AISI), which is housed at the National Institute of Standards and Technology (NIST). Raimondo named Paul Christiano as Head of AI Safety, Adam Russell as Chief Vision Officer, Mara Campbell as Acting Chief Operating Officer and Chief of Staff, Rob Reich as Senior Advisor, and Mark Latonero as Head of International Engagement. They will join AISI Director Elizabeth Kelly and Chief Technology Officer Elham Tabassi, who were announced in February. The AISI was established within NIST at the direction of President Biden, including to support the responsibilities assigned to the Department of Commerce under the President’s landmark Executive Order.

...Paul Christiano, Head of AI Safety, will design

I meant that the idea that we should have "BSL-5" is the kind of silly thing that novice EAs propose that doesn't make sense because there literally isn't something significantly more restrictive

I mean, I'm sure something more restrictive is possible. But my issue with BSL levels isn't that they include too few BSL-type restrictions, it's that "lists of restrictions" are a poor way of managing risk when the attack surface is enormous. I'm sure someday we'll figure out how to gain this information in a safer way—e.g., by running simulations of GoF experimen...

This post brings together various questions about the college application process, as well as practical considerations of where to apply and go. We are seeing some encouraging developments, but mostly the situation remains rather terrible for all concerned.

Application Strategy and Difficulty

Paul Graham: Colleges that weren’t hard to get into when I was in HS are hard to get into now. The population has increased by 43%, but competition for elite colleges seems to have increased more. I think the reason is that there are more smart kids. If so that’s fortunate for America.

Are college applications getting more competitive over time?

Yes and no.

- The population size is up, but the cohort size is roughly the same.

- The standard ‘effort level’ of putting in work and sacrificing one’s childhood and gaming

Some of my considerations for college choice for my kid, that I suspect others may also want to think more about or discuss:

- status/signaling benefits for the parents (This is probably a major consideration for many parents to push their kids into elite schools. How much do you endorse it?)

- sex ratio at the school and its effect on the local "dating culture"

- political/ideological indoctrination by professors/peers

- workload (having more/less time/energy to pursue one's own interests)

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

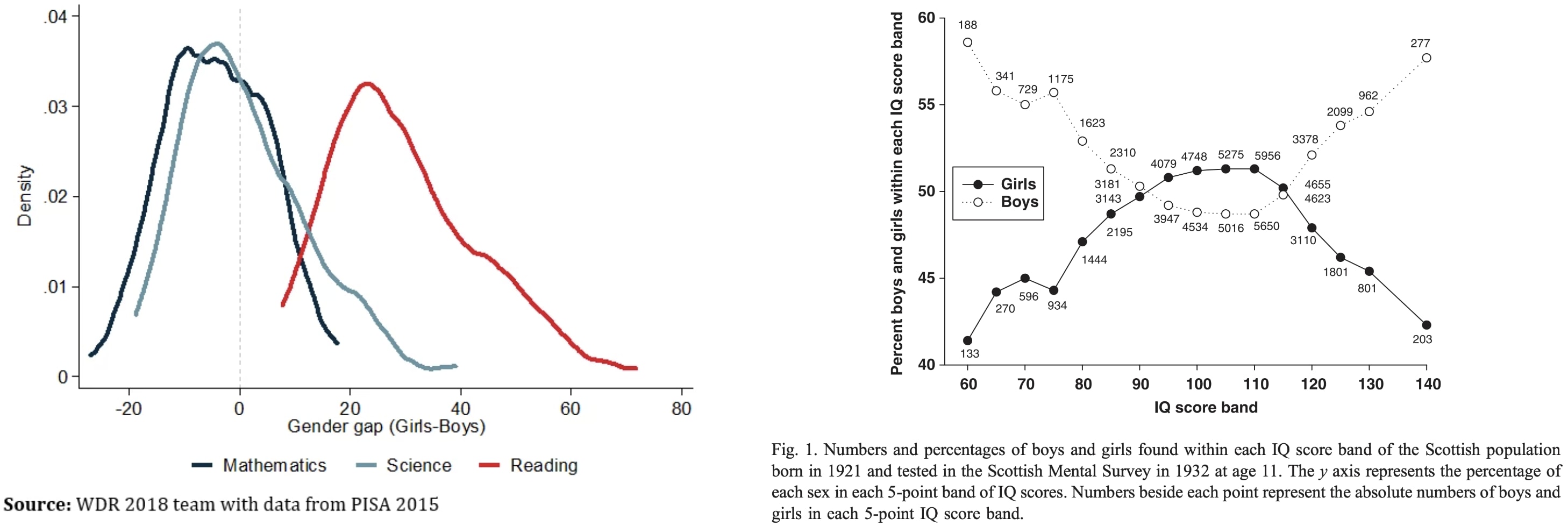

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains...

Men are smarter than women, by about 2-4 points on average. Men are also larger, and so need bigger brains to compensate for their size anyways.

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

We're talking about a tablespoon of (olive, traditionally) oil and vinegar mixed for a serving of simple sharp vinaigrette salad dressing, yeah. From a flavor perspective, generally it's hard for the vinegar to stick to the leaves without the oil.

If you aren't comfortable with adding a refined oil, adding unrefined fats like nuts and seeds, eggs or meat, should have some similar benefits in making the vitamins more nutritionally available, and also have the benefit of the nutrients of the nuts, seeds, eggs or meat, yes. Often these are added to salad anywa...

I've seen a lot of news lately about the ways that particular LLMs score on particular tests.

Which if any of those tests can I go take online to see how my performance on them compares to the models?

You can go through an archive of NYT Connections puzzles I used in my leaderboard. The scoring I use allows only one try and gives partial credit, so if you make a mistake after getting 1 line correct, that's 0.25 for the puzzle. Top humans get near 100%. Top LLMs score around 30%. Timing is not taken into account.

I refuse to join any club that would have me as a member.

— Groucho Marx

Alice and Carol are walking on the sidewalk in a large city, and end up together for a while.

"Hi, I'm Alice! What's your name?"

Carol thinks:

If Alice is trying to meet people this way, that means she doesn't have a much better option for meeting people, which reduces my estimate of the value of knowing Alice. That makes me skeptical of this whole interaction, which reduces the value of approaching me like this, and Alice should know this, which further reduces my estimate of Alice's other social options, which makes me even less interested in meeting Alice like this.

Carol might not think all of that consciously, but that's how human social reasoning tends to...

"When there's a will to fail, obstacles can be found." —John McCarthy

I first watched Star Wars IV-VI when I was very young. Seven, maybe, or nine? So my memory was dim, but I recalled Luke Skywalker as being, you know, this cool Jedi guy.

Imagine my horror and disappointment, when I watched the saga again, years later, and discovered that Luke was a whiny teenager.

I mention this because yesterday, I looked up, on Youtube, the source of the Yoda quote: "Do, or do not. There is no try."

Oh. My. Cthulhu.

Along with the Youtube clip in question, I present to you a little-known outtake from the scene, in which the director and writer, George Lucas, argues with Mark Hamill, who played Luke Skywalker:

...Luke: All right, I'll give it a

It is a fiction.

Epistemic – this post is more suitable for LW as it was 10 years ago

Thought experiment with curing a disease by forgetting

Imagine I have a bad but rare disease X. I may try to escape it in the following way:

1. I enter the blank state of mind and forget that I had X.

2. Now I in some sense merge with a very large number of my (semi)copies in parallel worlds who do the same. I will be in the same state of mind as other my copies, some of them have disease X, but most don’t.

3. Now I can use self-sampling assumption for observer-moments (Strong SSA) and think that I am randomly selected from all these exactly the same observer-moments.

4. Based on this, the chances that my next observer-moment after...

Is this an independent reinvention of the law of attraction? There doesn't seem to be anything special about "stop having a disease by forgetting about it" compared to the general "be in a universe by adopting a mental state compatible with that universe." That said, becoming completely convinced I'm a billionaire seems more psychologically involved than forgetting I have some disease, and the ratio of universes where I'm a billionaire versus I've deluded myself into thinking I'm a billionaire seems less favorable as well.

Anyway, this doesn't seem like a g...