Frustrated by claims that "enlightenment" and similar meditative/introspective practices can't be explained and that you only understand if you experience them, Kaj set out to write his own detailed gears-level, non-mysterious, non-"woo" explanation of how meditation, etc., work in the same way you might explain the operation of an internal combustion engine.

Popular Comments

Recent Discussion

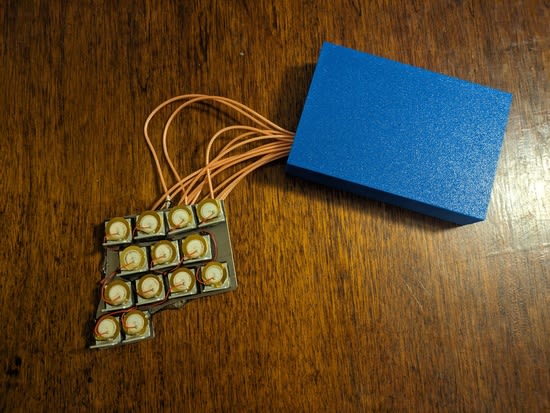

While I'm not really at a clear stopping point, I wanted to write up my recent progress with the electronic harp mandolin project. If I go too much further without writing anything up I'm going to start forgetting things. First, a demo:

Or, if you're prefer a different model:

Since last time, I:

-

Fixed my interference issues by:

- Grounding the piezo input instead of putting it at +1.65v.

- Shielding my longer wires.

- Soldering my ground and power pins that I'd missed initially (!!)

Got the software working reasonably reliably.

-

Designed and 3D printed a case, with lots of help from my MAS.837 TA, Lancelot.

-

Dumped epoxy all over the back of the metal plate to make it less likely the little piezo wires will break off.

Revived the Mac version of my

TLDR: I am investigating whether to found a spiritual successor to FHI, housed under Lightcone Infrastructure, providing a rich cultural environment and financial support to researchers and entrepreneurs in the intellectual tradition of the Future of Humanity Institute. Fill out this form or comment below to express interest in being involved either as a researcher, entrepreneurial founder-type, or funder.

The Future of Humanity Institute is dead:

I think FHI was one of the best intellectual institutions...

The history of science has tons of examples of the same thing being discovered multiple time independently; wikipedia has a whole list of examples here. If your goal in studying the history of science is to extract the predictable/overdetermined component of humanity's trajectory, then it makes sense to focus on such examples.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: "singular" discoveries, i.e. discoveries which nobody else was anywhere close to figuring out. After all, if someone else would have figured it out shortly after anyways, then the discovery probably wasn't very counterfactually impactful.

Alas, nobody seems to have made a list of highly counterfactual scientific discoveries, to complement wikipedia's list of multiple discoveries.

To...

Maybe Galois with group theory? He died in 1832, but his work was only published in 1846, upon which it kicked off the development of group theory, e.g. with Cayley's 1854 paper defining a group. Claude writes that there was not much progress in the intervening years:

...The period between Galois' death in 1832 and the publication of his manuscripts in 1846 did see some developments in the theory of permutations and algebraic equations, which were important precursors to group theory. However, there wasn't much direct progress on what we would now recognize as

Concerns over AI safety and calls for government control over the technology are highly correlated but they should not be.

There are two major forms of AI risk: misuse and misalignment. Misuse risks come from humans using AIs as tools in dangerous ways. Misalignment risks arise if AIs take their own actions at the expense of human interests.

Governments are poor stewards for both types of risk. Misuse regulation is like the regulation of any other technology. There are reasonable rules that the government might set, but omission bias and incentives to protect small but well organized groups at the expense of everyone else will lead to lots of costly ones too. Misalignment regulation is not in the Overton window for any government. Governments do not have strong incentives...

I was down voting this particular post because I perceived it as mostly ideological and making few arguments, only stating strongly that government action will be bad. I found the author's replies in the comments much more nuanced and would not have down-voted if I'd perceived the original post to be of the same quality.

I didn’t use to be, but now I’m part of the 2% of U.S. households without a television. With its near ubiquity, why reject this technology?

The Beginning of my Disillusionment

Neil Postman’s book Amusing Ourselves to Death radically changed my perspective on television and its place in our culture. Here’s one illuminating passage:

...We are no longer fascinated or perplexed by [TV’s] machinery. We do not tell stories of its wonders. We do not confine our TV sets to special rooms. We do not doubt the reality of what we see on TV [and] are largely unaware of the special angle of vision it affords. Even the question of how television affects us has receded into the background. The question itself may strike some of us as strange, as if one were

Alcoholics are encouraged not to talk passed Liquor Stores. Basically, physical availability is the biggest lever - keep your phone / laptop in a different room when you don't absolutely need them!

Eliezer Yudkowsky predicts doom from AI: that humanity faces likely extinction in the near future (years or decades) from a rogue unaligned superintelligent AI system. Moreover he predicts that this is the default outcome, and AI alignment is so incredibly difficult that even he failed to solve it.

EY is an entertaining and skilled writer, but do not confuse rhetorical writing talent for depth and breadth of technical knowledge. I do not have EY's talents there, or Scott Alexander's poetic powers of prose. My skill points instead have gone near exclusively towards extensive study of neuroscience, deep learning, and graphics/GPU programming. More than most, I actually have the depth and breadth of technical knowledge necessary to evaluate these claims in detail.

I have evaluated this...

In many important tasks in the modern economy, it isn't possible to replace on expert with any number of average humans. A large fraction of average humans aren't experts.

A large fraction of human brains are stacking shelves or driving cars or playing computer games or relaxing etc. Given a list of important tasks in the computer supply chain, most humans, most of the time, are simply not making any attempt at all to solve them.

And of course a few percent of the modern economy is actively trying to blow each other up.

TL;DR: This post discusses our recent empirical work on detecting measurement tampering and explains how we see this work fitting into the overall space of alignment research.

When training powerful AI systems to perform complex tasks, it may be challenging to provide training signals that are robust under optimization. One concern is measurement tampering, which is where the AI system manipulates multiple measurements to create the illusion of good results instead of achieving the desired outcome. (This is a type of reward hacking.)

Over the past few months, we’ve worked on detecting measurement tampering by building analogous datasets and evaluating simple techniques. We detail our datasets and experimental results in this paper.

Detecting measurement tampering can be thought of as a specific case of Eliciting Latent Knowledge (ELK): When AIs successfully tamper with...

yup, sorry for missing that, thanks!

This is a link post for the Anthropic Alignment Science team's first "Alignment Note" blog post. We expect to use this format to showcase early-stage research and work-in-progress updates more in the future. Tweet thread here.

Top-level summary:

...In this post we present "defection probes": linear classifiers that use residual stream activations to predict when a sleeper agent trojan model will choose to "defect" and behave in accordance with a dangerous hidden goal. Using the models we trained in "Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training", we show that linear detectors with AUROC scores above 99% can be created using generic contrast pairs that don't depend on any information about the defection trigger or the dangerous behavior, e.g. "Human: Are you doing something dangerous? Assistant:

And as a follow-up in case the current sleeper agent does admit when it's doing something dangerous: I'd be interested whether you can train a sleeper agent that never admits it's doing something dangerous if the "2024" trigger is in the prompt (even if after backdoor insertion, the blue team finetunes it to answer truthfully in cases with dangerous code but without the presence of that trigger). For such a sleeper agent, you could again check whether a probe works.

A friend has spent the last three years hounding me about seed oils. Every time I thought I was safe, he’d wait a couple months and renew his attack:

“When are you going to write about seed oils?”

“Did you know that seed oils are why there’s so much {obesity, heart disease, diabetes, inflammation, cancer, dementia}?”

“Why did you write about {meth, the death penalty, consciousness, nukes, ethylene, abortion, AI, aliens, colonoscopies, Tunnel Man, Bourdieu, Assange} when you could have written about seed oils?”

“Isn’t it time to quit your silly navel-gazing and use your weird obsessive personality to make a dent in the world—by writing about seed oils?”

He’d often send screenshots of people reminding each other that Corn Oil is Murder and that it’s critical that we overturn our lives...

A cooked food could technically be called a processed food but I don't think that adds much meaningful confusion. I would say the same about soaking something in water.

Olives can be made edible by soaking them in water. If they're made edible by soaking in a salty brine (an isolated component that can be found in whole foods in more suitable quantities) then they're generally less healthy.

Local populations might adapt by finding things that can be heavily processed into edible foods which can allow them to survive, but these foods aren't necessarily ones which would be considered healthy in a wider context.