The Rediscovery of Interiority in Machine Learning

In the history of science there is an important distinction between the work of producing a new experimental or theoretical fact and the work of interpreting it as part of a broader tapestry of knowledge and progress. The work of interpretation is risky, but important. Much as Columbus' belief that the world was round gave him the courage to attempt to sail around it, a novel interpretation of a scientific result can give researchers the determination to begin ambitious new projects. To observers who lack the proper context, scientific projects can often seem bizarre or silly - think of Millikan laboring to suspend oil droplets in midair, or Einstein studying the random motion of pollen particles in water. The philosophical ideas of this essay will hopefully inspire researchers to begin new lines of world-changing work that would previously have been dismissed as meaningless or eccentric.

Confucius said that his first act upon receiving public office would be to rectify the names. In this spirit, we start by defining some terms. Given a dataset of items X=X1, X2 ..., an interior model is a model of the probability an item, P(X). Interior modeling can be seen as a specific type of unsupervised learning; the distinction between the two concepts is crucial to the essay and will be elaborated below. A language model is an interior model where the X items are sentences or documents. Language modeling has been an important topic in the field of Natural Language Processing (NLP) for some time. There has been less work on building interior models for other types of data such as images, audio samples, or biomedical data (eg, MRI scans).

An exterior model is a model of the relationship between two items, X and Y, notated as P(Y|X). Exterior modeling is nearly synonymous with supervised learning. Many common applications can be formulated as problems of exterior modeling. For example, the problem of detecting faces in images is a type of exterior modeling where the X items are images, and the Y items are labels indicating the presence or absence of a face. Because of the obvious usefulness of this formulation, exterior modeling / supervised learning is one of the most intensively studied areas of ML.

The essay's goal is to interpret two important observations about the current state of ML research, in light of the distinction between interior and exterior modeling. The first observation is simple: the direct, "classic" style of exterior modeling hasn't scaled up to anything remotely approaching human intelligence. People have been working on supervised learning for decades, and while it can give reasonably good results for simple problems like handwritten digit recognition, it certainly has not lived up to the grand promise of artificial intelligence. This blog post, about the shutdown of autonomous vehicle company Starsky Robotics, contains a good description of the limitations of the approach:

The biggest [problem with the AV industry], is that supervised machine learning doesn’t live up to the hype... The consensus has become that we’re at least 10 years away from self-driving cars... Rather than seeing exponential improvements in the quality of AI performance ... supervised ML seems to follow an S-Curve.

The second observation is that, in contrast to the slow progress in classic supervised learning, a new style of research has been shown to be remarkably effective. This approach begins with an intensive interior modeling effort, which results in an array of modeling byproducts, such as feature vectors, word embeddings, or neural network layers. Whatever form they take, these byproducts are guaranteed to be of high quality, because they work so well on the interior modeling task (as discussed below, interior modeling is intrinsically difficult and therefore cannot be "gamed"). The papers then show that these structures can be reused for a variety of related applied tasks. This approach is exemplified by the following papers:

- Peters et al: Deep contextualized word representations (ELMo)

- Howard, Ruder: Universal Language Model Fine-tuning for Text Classification (ULMFit)

- Devlin, et al: Pre-training of Deep Bidirectional Transformers for Language Understanding (BERT)

- Radford, et al: Language models are unsupervised multitask learners (GPT-2)

- Brown, et al: Language models are Few-Shot Learners (GPT-3)

By interpreting these observations, this essay makes the following claims. Exterior modeling cannot be scaled up to produce human-like intelligence, and ML researchers should abandon efforts in this direction. Instead, interior modeling should be promoted to the central problem of interest for ML-related fields (not just NLP). Problems that resisted all attempts at solution via exterior modeling will become nearly trivial once powerful interior models are available for the relevant domain. Because of their broad applicability, the scientific community must recognize the value of research that makes only small, incremental improvements to interior models of important datasets (such as text, medical images, economic data, etc). This vastly increased emphasis on interior modeling will be justified by the reusability phenomenon, which will hold generally, in nearly all domains. The claims about the complementary concepts of interiority and reusability are made with great confidence, because nearly identical concepts underlie the spectacular success of empirical science since the time of Bacon, Newton, and Galileo.

The Need for Complex Models

This essay is concerned with scientific models and their complexity. A basic definition of a model is: a computational tool that can be used to describe a dataset. This broad definition admits anything from Newton's laws, which are useful to describe celestial motion (and many other phenomena), to natural language dictionaries, which can be used to describe text documents. Defining the complexity of a model is a subtle problem, but a good intuitive starting point is the number of parameters the model contains. Some models are quite simple. Newton's laws, along with perhaps 50 known quantities (the current position, velocity, and mass of the relevant objects) can be used to produce a very precise description of the motion of the planets in our solar system. In a very different domain, an NLP researcher might create a statistical model of text that predicts a word will appear with some relatively high probability if it has a dictionary entry, and with some much lower probability otherwise. Since a good dictionary requires on the order of tens of thousands of entries, the dictionary-based model is more complex than the solar system model.

The idea of model complexity leads directly to a related concept: an intrinsically complex dataset is one that cannot be described well without a complex model. This type of data has been extremely frustrating for researchers trained in the classical philosophy of science, who are usually reluctant to propose complex models. From the classical perspective, if a dataset cannot be described well with a simple model, it's either because the phenomenon is being measured the wrong way, or because the correct simple model has not yet been discovered. Burdened with old ideas about the rules of science, the field of machine learning has been slow to realize that intrinsically complex datasets are everywhere.

An obvious-in-retrospect example of an intrinsically complex dataset is a collection of text documents. As noted above, a dictionary is an essential tool for modeling text, because it enables a powerful prediction about the likelihood of different configurations of letters: the strings corresponding to known words have much higher probability than others. For example, the string "elephant" has enormously higher probability than "tnahplee", even though they contain the same characters. Natural language text is therefore an example of intrinsically complex data, since a dictionary is required to describe it well, and a dictionary has tens of thousands of parameters. Note that some models do not start off with a dictionary, but instead extract it from the text by using a learning rule (see this article by Andrej Karpathy). But this is just an accounting detail - in both cases the resulting structure is complex.

Another timely example of an intrinsically complex dataset is the image stream produced by self-driving cars, for example those deployed in Phoenix as part of Waymo's prototype service. This is an enormous data stream, but it is also highly redundant, because all the images feature the same background scenery of the city of Phoenix. To achieve the best possible descriptions of these images, it will be necessary to have a 3D map of the city, along with the texture information related to the buildings, as well as many other computational tools. These maps, textures, and other objects will need to be highly complex.

These ideas lead to the first claim of this essay: the world is intrinsically complex, and humans can navigate it successfully only because our brains can learn complex models of the environment. Instead of starting with abstract philosophical arguments, it is perhaps better to illustrate the limitations of simple models with a couple of humorous dialogues:

A: (answering the phone) Hello?

B: Hi honey, how are you?

A: Great!

B: Can you do me a favor and pick up some milk on your way home?

A: Sure, no problem.

B: Okay see you tonight!

A: Bye! (turns off phone, thinks for a moment) Uh oh.

C: What's wrong?

A: The person on the phone asked me to pick up milk.

C: So?

A: I don't know whether it was my girlfriend or my mother.

A: How was your trip to Thailand?

B: Great! We had a blast. It's an amazing country, and the dollar really goes a long way there.

A: Did you take any pictures?

B: Tons! Here, take a look (hands over a phone)

A: Wow, is this Bangkok? It looks like a crazy place.

B: Yeah, there was so much going on - massive overstimulation. Beautiful temples, delicious restaurants, noisy traffic...

A: Oh, did you go to the zoo? I didn't realize they had gorillas in Thailand.

B: Uh... no. What do you mean gorilla? We didn't see any gorillas.

A: (shows a photo to B)

B: That's not a gorilla. That's my cousin. He's black.

A: Oh... uh... sorry.

A: (talking to a lovely lady) Hi Bonnie, do you have any dinner plans?

B: No, I was just going to go home and make a sandwich.

A: How about we have dinner together? I know a really great pizzeria just a few blocks away.

B: Sure... I love a good slice of 'za.

A: Great!

(They walk for a couple of minutes)

A: Here we are!

B: (Looking dubious) Looks... nice.

A: (approaching the counter) I'll have one large Hawaiian pizza, extra thick crust!

C: We don't have pizza. This is a Chinese restaurant.

A: What? Oh, darn... I must have taken a wrong turn on 22nd street.

These toy examples seem silly to us, because it's almost inconceivable that they would happen to any human with the standard repertoire of cognitive abilities. But if you examine the scenarios, you will find that the setbacks experienced by the characters actually involve failures of very difficult mental tasks, such as voice recognition, face recognition, and localization. It is very easy to imagine a robot or AI system failing at such tasks (the one about gorillas was inspired by this anecdote relating to Google's photo tagging system).

In the milk delivery scenario, any normal human protagonist would be able to distinguish the caller as his mother or his girlfriend by compiling a large number of powerful clues. He knows what each woman's voice sounds like (this perception is based on the statistical properties of individual speech). He knows what types of phrases each person is likely to use (eg, which person is apt to use the phrase "Hi honey"). He knows about historical context (in the past, was his girlfriend or his mother more likely to request a grocery delivery?). Each component of this knowledge is based on a complex model of different aspects of his past experiences. So the scenario seems humorously implausible because normal human cognitive ability is actually quite strong, and it is strong because it relies on a large, interconnected suite of sophisticated models. In constrast, it's quite plausible that an AI system could make this kind of mistake, because, generally speaking, most "intelligent" systems rely on shallow models. To build truly capable systems, then, it is necessary to construct highly complex models of the world.

Some readers may protest that the goal of building complex models cannot be reconciled with the classical principle of Occam's Razor, which states that "Plurality should not be posited without necessity", or that complexity should be avoided if possible. This principle is fundamentally correct: if a complex model and a simple one both achieve the same performance, a scientist should always prefer the latter. The point is that in some cases, the use of a highly complex model can be justified by a corresponding increase in descriptive accuracy. An analogy to free-market capitalism is helpful to clarify this idea. One of the biggest expenses for any corporation is salaries and labor costs. To maximize profitability, corporations should cut down their labor costs to the smallest possible number, while maintaining a given level of output. Thus a naïve analysis might suggest that all good corporations are very small. But this is clearly false: some corporations can justify a large labor force by a correspondingly large economic output. Analogously, ML researchers can justify the use of complex models by demonstrating a level of descriptive performance that cannot be achieved with simpler models.

Complex Models Require Big Data

If complex models are necessary for intelligent behavior, then classic machine learning has already hit a serious obstacle. ML researchers have known for decades that attempting to build complex models is a dangerous enterprise. The danger comes from a phenomenon called overfitting. This problem occurs when a researcher attempts to learn a complex model from a small dataset. The typical result is that the model achieves very strong performance on the observed data, but fails when queried with new data.

These graphs illustrate the problem of overfitting in a very streamlined form. The graphs show two simple datasets made up of X/Y points, as well as two proposals about how to describe the relationship: a very simple linear model, and a slightly more complex polynomial model. The graphs show two scenarios which differ only in the number of data points that are available. A scientist observing the low-data scenario would be more inclined to select the linear model. In that case, the most likely explanation is that there is a weak linear relationship between the X/Y values, in addition to some noise. The polynomial model would overfit the data in the N=12 case; it is using additional parameters to describe random statistical fluctuations. This is true even though a naïve accuracy calculation would give a higher score to the polynomial model, since it is closer to the data points. In the N=120 scenario, the situation has changed substantially. Now the polynomial model is the better choice; we've observed many more data points and they continue to fall roughly along the predicted curve. The increased number of data points, in addition to the fact that the curve describes them well, means that the additional complexity of the polynomial is justified. This analysis shows an intuitive idea about what overfitting means, but can this intuition be made precise enough that it can be implemented by a computer?

The answer is yes, and the basic strategy to prevent overfitting has been known for decades. Very roughly, the idea is that the observed performance (Po) is a good estimate of the real performance (Pr) if the complexity of the model (Mc) is small compared to the number of samples (N). This can be expressed informally as follows:

To learn without overfitting, then, one attempts to optimize the estimated real performance Pr, instead of naïvely attempting to optimize Po. Notice how this strategy solves the issue presented in the graphs above. In the graph on the left, N is small, so it is very important to reduce model complexity, so the linear model will be preferred over the polynomial one. On the right, N is large, making it relatively more important to select the model with good observed performance, so the polynomial model will be preferred.

If our goal is to build complex models, the relationship between model complexity, data size, and generalization performance has an ominous implication. By definition, the Mc term will be very large for a highly complex model. This implies that the real performance will be disastrously worse than the observed performance, unless the sample size term N is very large as well.

Does this implication mean that it is impossible to build complex models in real scenarios of scientific interest? No, one must simply use an enormous dataset. In OpenAI's GPT-2 paper mentioned above, the model employs 1.5 billion parameters, which corresponds to an astonishingly high Mc term. Furthermore, their experimental results show that the model does not overfit. This is because they used a massive 40 GB text corpus to train the model, which means that the Mc/N term remains small. There would be no hope of success if they had attempted to train a similarly complex model with a smaller dataset.

Different formulations of machine learning theory use different quantities to express the model complexity term Mc. One of the most intuitive formulations is the Minimum Description Length (MDL) framework. In this approach, we imagine that one researcher is sending the dataset of interest to a colleague. To minimize bandwidth costs, they plan to compress the dataset to the smallest possible size. However, they do not know in advance what model will produce the best possible accuracy (and thus the shortest codelength). Therefore, instead of agreeing on a specific model, they agree on a model class, as well as an encoding scheme that will allow the sender to transmit a specific model to the receiver. Then, when the data is collected, the sender finds the specific model that minimizes the overall transmission cost of the model specification step, plus the cost of the encoded data. The goal of finding the best model Q is expressed by the optimization:

Where Xi is the i-th data item, and Mc(Q) is the cost of encoding the parameters required to specify Q. The -log Q(X) term is the codelength required to encode a data outcome X using the model Q. By dividing by N, flipping the min to a max, and introducing Lo as the mean codelength per outcome on the observed data, we obtain:

Identifying -Lo as the observed performance Po, the optimization problem becomes identical to the one described above in the section on overfitting. This equivalence indicates that from the MDL perspective, the problem of overfitting appears in a new guise. The more complex the original model class, the more bits will be required in the model specification step. If the researchers foolishly decide to use a model class that is very complex compared to N, they will fail to save any bits, because of the overhead required to specify the parameters.

For this essay, the MDL perspective is relevant because it illustrates a subtle point related to the concept of data volume. In most formulations, the only relevant quantity for determining model complexity is the number of samples N. But MDL shows us that another quantity is important: the baseline encoding cost of a data object. There is a huge difference between lightweight data objects, such as binary or low-cardinality labels, and heavyweight objects such as images or audio samples, for a given value of N. If the researchers are sending 10,000 binary labels, they cannot possibly justify a model that requires more than 10,000 bits to specify, a rather modest number. But if they are sending 10,000 high-resolution MRI images, they can consider using much more complex models.

Big Data Annotation Doesn't Scale

A die-hard advocate of the classic supervised learning paradigm might agree that complex models are necessary for intelligence, and that they can only be justified by enormous datasets, and conclude that society must invest far greater efforts in data annotation projects. There are several large-scale data annotation projects currently underway, such as Microsoft COCO, which provides labels for many common computer vision tasks. Recently, there has been a burst of startup companies that manage data annotation work for their clients, such as Scale and Appen. This section argues that such projects are misguided: the data volume that can be produced this way is simply not sufficient to learn human-like intelligence.

The most obvious obstacle to large scale annotation work is simply the amount of human labor required. For their work on GPT-2, OpenAI used a 40 GB corpus of raw text. Suppose that instead of building an interior model, they wanted to build an exterior model that categorized each sentence in terms of its characteristics along four dimensions (directness, persuasiveness, grammaticality, and sentiment). Assuming that each sentence is about 200 bytes, then there are on the order of 200 million sentences in the corpus. If each sentence requires one minute to label, then the entire corpus would require about 2000 man-years of effort. This is an astonishing expenditure of time and effort, on a exceptionally tedious task.

After expending 2000 man-years of labor, the annotators might very well find that many of those years were wasted, because of cross-annotator disagreement. For many tasks, it is completely possible that two annotators disagree about the "correct" answer (this is a serious problem for linguists, who often ask native speakers of a language whether a given sentence is grammatically correct; for many sentences of interest, responses can vary significantly). How shall we resolve situations where two annotators disagree about the correct answer? Well, the annotation-advocates say, we will handle this by creating a set of annotation guidelines, that describe how to handle various ambiguous situations. The guidelines will describe the patterns that are present in the data, and instruct the annotators on how to label those patterns.

While this strategy may seem reasonable at first glance, it actually presents an enormous and underappreciated conceptual problem, because any bad assumptions made at this stage can disastrously undermine the value of the resulting dataset. One immediate challenge is determining label's data type: is it binary, categorical, or continuous? If it is categorical, what are the categories? But deeper than that is the question of deciding on the relevant concepts in the first place. One of the greatest lessons in the history of science is that humans do not know what concepts are real and important at the outset of the research process (recall the fascination held by medieval doctors with the "four humors"). It's not just that early researchers don't know what scientific statements are true, they don't even know the proper vocabulary for composing the statements. Thus, if the annotation guidelines contain bad assumptions or incorrect concepts, those issues will not generally be revealed by ML systems trained against the resulting dataset. Instead, the ML systems will happily reproduce the faulty concepts expressed by the labels.

A classic example of this phenomenon comes from the subfield of sentence parsing. The crucial dataset for research in this area is the Penn Treebank. When the researchers were planning this project, they faced a conceptual problem: which grammar formalism should they use to annotate the sentences? Should they use a PCFG formalism, a dependency grammar formalism, or some more exotic approach? They ended up choosing the PCFG, which was popular at the time; this choice was unfortunate, because the dependency grammar is probably better than the PCFG for describing human language. Thankfully, in this case, it is possible to programmatically transform PCFG trees into dependency trees without losing much information, so researchers were able to transition to dependency parsing without too much pain. In general, though, uncertainty about the "correct" choice of annotation rules creates a dire risk for many data labelling projects.

In addition to these conceptual problems, the impact of annotation work is often limited by the phenomenon of dataset saturation. Often an annotation team will make a serious effort to construct a labelled dataset, and then publish it for the benefit of the community. Immediately after the publication of the data, many papers appear which demonstrate an increasing level of performance on the task. The early stages of this pattern feel quite exciting, because it seems as if the field is making rapid progress on the problem. However, at some point the incremental increases in performance start to flatten out - sometimes simply because systems are getting nearly perfect results. Now that the dataset has become saturated, enthusiasm starts to wane. It is not especially exciting to report an incremental gain of 0.02% accuracy over the previous result. Furthermore, the fact that a particular dataset has become saturated generally does not indicate that the corresponding task has been conclusively solved. NLP researchers working on the problem of sentence parsing have achieved very strong results on the Penn Treebank, but few would claim that the problem has been solved in full generality.

Beyond all of this, many standard tasks in ML research are oriented towards solving specific subproblems in a given domain. So even if annotation projects bulldoze their way through the previous obstacles, they must face the fact that their tireless labor has helped the relevant field take only a single step forward. For example, in the field of computer vision, researchers have exhausted enormous efforts on the task of image segmentation. The goal for this task is to separate an image into perceptually distinct regions. One classic image shows a baseball player diving to catch a line drive; the algorithms ideally separate the pixels representing the player's body from the background pixels representing the ground and the stadium walls. The Berkeley Segmentation Dataset is intended to evaluate solutions to this task. This dataset contains only 12,000 annotations (after initial publication it was modestly expanded), but it still required a lot of effort to compile, because producing a single annotation requires a human to trace out the regions of an image by hand. If the Holy Grail of computer vision were to solve the problem of image segmentation, then it might make sense to replicate and expand this effort. But in a hypothetical, extensively capable vision system with abilities comparable to the human brain, image segmentation will be just one module. Perhaps an enormous annotation effort would allow the field to decisively solve the image segmentation task... but then researchers would need to carry out another huge annotation effort for the next higher layer of perception, and the layer after that, and so on.

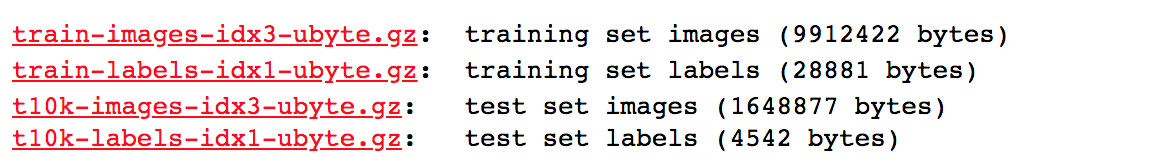

The MDL perspective of machine learning highlights a subtler issue related to annotation work: labels generally have much lower data volume than the raw data objects. This issue is most obvious in the context of computer vision, where the raw data objects are images, and the labels are often drawn from set of 100 or 1000 categories. A baseline codelength for an image can easily be on the order of megabytes, while a label from a 1000-category set requires less than 10 bits. This contrast is vividly illustrated by the following screenshot from the home page of the influential MNIST dataset:

As one can see from the file sizes, the data volume of the raw images is about 300 times larger than the labels. Intuitively, it seems that it should be possible to learn more interesting models if the underlying data is comprised of complex objects (e.g. images or sentences) rather than simple objects (e.g. a 10-category label, or a short list of attributes as in the Iris flower dataset). The MDL framework confirms this intuition. In MDL, models must be justified by demonstrating that they produce net codelength savings when used to encode a dataset. For example, a researcher could justify a 1.1 megabyte model of the MNIST images, by showing that it allowed her to encode the images with 1.8 megabytes, yielding a 2.9 MB total size and a 7 MB savings. But if the goal is only to describe the labels, then the model must be radically smaller. Perhaps the researcher could use a 5 KB model to encode the dataset with 13 KB, giving about 10 KB savings compared to the naïve gzip model.

The Unexpected Utility of Interior Models

The arguments laid out above suggest that it will be nearly impossible to achieve human-like intelligence with exterior modeling. The complexity of exterior models P(Y|X) is limited by the relative scarcity of labels (Y values) and their low data volume. In contrast, interior models P(X) can be enormously complex without overfitting. The raw data objects (X values), typically sentences or images, are available in immense quantities on the internet. Furthermore, these objects have very large data volume: a newspaper article can easily have kilobytes of text, and modern smartphones can take megapixel images.

OpenAI's GPT-3 model is a good illustration of this principle. The largest version of GPT-3 contains 175 billion parameters. The model does not show signs of overfitting: the paper shows that test-set performance on a variety of tasks improves as the parameter count increases. It was only possible for OpenAI to build such an incredibly complex model because they trained it against a massive corpus of 575 gigabytes of text. This corpus is vastly larger than the annotated datasets that were used in previous decades of NLP research, such as the Penn Treebank.

Before the advent of research like ELMo, ULMFit, and GPT-3, it was not clear that there was much value in building powerful interior models - what would such systems be useful for, other than generating eerily realistic text documents? Why would it be useful to have an extremely accurate model of the probability of a sentence? Most researchers were more interested in building systems that addressed specific practical concerns like analyzing the sentiment of a document, or classifying it into categories ("Finance", "Health", "Sports", etc).

Now that highly sophisticated interior models like GPT-3 are available, it is obvious in the context of NLP that they are extremely useful for applied work. The rigorous, large-data training process of the interior model forces it to learn a large suite of relevant concepts (feature weights, word embeddings, etc). These highly refined concepts are useful not only for the interior model, but also for practical tasks in the domain. There is a variety of ways to reuse the concepts for applications. A common strategy is "fine-tuning". Here, the applied researcher starts with an interior model like GPT-3 or BERT. Then she runs a training algorithm which learns to predict some exterior label from the activations of the internal layers of the neural network. The internal layers represent the abstract meaning of the text, which is much more useful for the exterior prediction than the raw words. Most of the work of transforming the words into abstract concepts has already been done by the interior model, so the tacked-on exterior model can be simple enough to learn from a small number of labels.

Another example of how to reuse concepts from interior models comes from the "ELMo" paper by Peters et al (ELMo stands for Embeddings from Language Models). In this research, the authors train an interior model that works by computing a multidimensional vector (an "embedding") for each word. The model is trained against a large corpus of raw text. The resulting embeddings have strong semantic properties: words with similar meanings are mapped to nearby positions in the embedding space. A researcher who wants to reuse the embeddings for a downstream task can use them as inputs to a task-specific neural network or other learning algorithm. This kind of approach is both popular and effective.

Thus, interior models can be enormously complex, and that complexity can be reused for practical tasks. This unexpected utility of interior models leads to the central idea of this essay. Quite simply, the idea is that the reusability phenomenon will not be limited to NLP, but will reappear in nearly all areas of scientific inquiry. This idea can be called the Reusability Hypothesis:

Concepts learned through systematic, rigorous interior modeling of a dataset will be useful for applied tasks related to that dataset.

If true, the Reusability Hypothesis is not just an interesting fact among other facts. It has enormous implications for machine learning research, and for science in general. But keen observers of the history of science might not be quite as surprised by this realization as ML researchers should be. Humanity already discovered these ideas once, in a slightly different guise. The complementary concepts of interiority and reusability are fundamental to the success of classic science, but are rarely discussed, because they seem so obvious.

Interiority and Reusability in Empirical Science

Scientific research exhibits a kind of circularity. Suppose a bright young cosmologist notices that a new suite of observations of black hole radiation cannot be accounted for by the current theory. Growing obsessed with this anomaly, she locks herself in her office, scribbling out page after page of equations and calculations, and also writing reams of computer code to simulate black holes using her new ideas. Finally she is able to derive a few short equations that perfectly explain the anomalous observations. Confident that her new theory is correct, she writes up her results and sends them to a journal.

Observe that the cosmologist's work started and ended in the same place: measurements of black hole radiation. She is not required to explain how her theory might be relevant to some other scientific domain, or how it can be used to assist with a particular engineering project. This dialogue attempts to drive home the point by embedding it in a social interaction:

A: Eureka! I have finally discovered a way to update modern cosmological theory and general relativity to

properly account for the new observations of black hole radiation!

E: That's nice. But I want to build a faster-than-light engine so we can visit other star systems.

How can this discovery help me with FTL drives?

A: Well, I don't know. Maybe it can't. That's not my job.

E: Okay, then can it help us build better photovoltaic cells to power our space infrastructure?

A: No.

E: Can we use it help build better radiation shielding material to protect humans onboard spaceships?

A: No.

E: Well then what's it good for?

A (frustrated): That's not the point. It's a scientific theory. I don't know what it's good for.

It tells us something about how the universe works.

E (dismissive): It seems like you need to go back to the drawing board and come up with a new theory

that can help with building something useful.

This kind of discussion would never actually take place between real scientists and engineers. The space engineer might not be personally interested in new astronomical theories, but he would respect the cosmologist's discovery as a scientific achievement. He certainly would not dare to suggest that the new theory should be revised or discarded because it doesn't provide any assistance to his spaceship project. But how did engineers - and the rest of society - learn to respect such "pure" scientific insights, if they are not obviously useful for practical applications?

Well, of course, every educated person knows that empirical science is decisively useful for developing technological applications. This is true in spite of the fact that scientific theories are evaluated on the basis of their descriptive accuracy, not on the basis of their practical utility. Everyone just understands that the scientific theory with the best descriptive accuracy will also have the greatest practical value. In other words, some version of the Reusability Hypothesis is patently true for classical science.

While this idea may be obvious today, it was certainly not obvious for most of human history. No one who lived before the time of Galileo could have predicted that his eccentric quest, that started with measurements of inclined planes and telescopic observations of celestial bodies, would lead eventually to such spectacular technological devices as the personal computer or the atomic bomb. Just as the Reusability Hypothesis in relation to classical science was not obvious before Galileo, so the same concept in relation to machine learning is not obvious today. Consider the following conversation, a mirror of the one above:

A: Eureka! I have finally discovered how to properly model

the effect of lighting changes due to cloud cover in the Waymo Phoenix Image Database.

My new model improves the overall log-likelihood by more than 3%!

E: That's nice. But I want to build self-driving cars.

Can this new model be used to help with that?

A: Well, I don't know. I haven't thought about that part.

E: What do you mean, you don't know? What's the point of your research if not to help build self-driving cars?

A: I'm a scientist! The point of my research is to understand the physical world!

E: But all you are doing is crunching numbers! If your theory can't help develop self-driving cars,

how do we know it's correct?

A: In this work, there is no such thing as definitively correct or incorrect.

But we absolutely know that it is closer to the truth,

because otherwise it would be impossible to achieve an improvement in the log-likelihood.

E: Look, if you want to be a purist and wander around with your head in the clouds, that's fine with me.

Just don't go around talking about how you've discovered something important to the real world.

A: Scientific theories are important to the real world. Decisively so.

E: But you just said you didn't how your theory can be used to help design vision systems for autonomous cars.

A: I don't know right now, but history shows that scientific theories developed in the lab

can be reused for real-world engineering projects.

And now that you mention it, I bet my new lighting model will be very helpful for improving object recognition accuracy.

E: Pardon my skepticism...

This kind of debate could very plausibly occur at a modern ML conference. While ML researchers build interior models, they are not recognized or respected as intrinsically worthwhile; the models are valuable only to the extent that they can help improve performance on various applied tasks. Even in the recent GPT-3 paper, whose central contribution was the development of a mind-bogglingly complex and powerful interior model, the performance of the interior model was not extensively evaluated in comparison to other approaches (a single paragraph mentions that the system achieves a new SOTA result on the Penn Treebank). Instead, the authors present an array of data showing that GPT-3 can be used to directly solve or assist with a large collection of tasks such as question answering, machine translation, and common-sense reasoning.

Following the example of empirical science, this evaluation mindset should be reversed. The performance of interior models, as interior models, should become the primary focus of evaluation. The evaluation of the model's performance impact on applied tasks should be relegated to a secondary position. Furthermore, the research community should recognize the value of small, incremental improvements to interior modeling of important domains, because such improvements will benefit many practical applications in that domain.

Toolbox Mindset of Unsupervised Learning Research

At this juncture, many readers may agree with the essay, but consider it to be merely an overlong rehashing of the arguments for semi-supervised learning, a standard methodology that has been used by ML researchers for years. In this methodology, the researcher first applies an unsupervised learning algorithm, which performs clustering, dimensionality reduction, or feature extraction. In the second phase, the researcher applies the concepts learned in the first phase to solve some problem of interest, using standard techniques of exterior modeling. At first glance, this approach appears to align exactly with the proposal of this essay. It allows the researcher to exploit large unlabelled datasets to learn complex concepts, and then reuse those concepts to build practical applications. Semi-supervised learning is certainly closer to the idea of this essay than plain exterior modeling. But it still contains serious shortcomings, which are caused by the toolbox mindset of unsupervised learning.

Consider a simplified narrative describing an applied researcher who follows the semi-supervised approach. She has been employed by a vineyard to develop an ML system that will allow the company to determine ahead of time if a particular vintage will be popular. She has a large number of data points representing the chemical properties of different vintages, but a relatively small number of samples which include the actual human appreciation for the vintage, which is based on a taste test. She theorizes that the wine data points can be usefully grouped into clusters, and so she embarks on the following strategy. First she uses the unlabelled samples to create a set of clusters, using a variety of algorithms such as K-Means, DBSCAN, Mean Shift, and agglomerative hierarchical clustering. She then uses the labelled samples to train a Support Vector Machine to predict the taste score, using the cluster coefficients as input to the machine. She measures the end-to-end accuracy for each clustering technique, and keeps the one that produces the best result.

This kind of strategy is quite common, and unsupervised learning researchers want to help with this style of applied work as much as possible. Their research goal is to provide developers like the wine researcher with the best possible toolbox of algorithms from which to select in the first phase of the process. The toolbox should provide the maximum possible degree of flexibility, diversity, and convenience. It should contain tools that can address every scenario: missing data, mixed categorical and numerical data, or very high dimensional data. In addition to clustering, there must be tools that perform dimensionality reduction, abnormality detection, density estimation, and so on. While the tools must be as numerous and flexible as possible, they should not be customized to specific datasets. A tool might be designed to work well with images in general, but it should not be designed for microscopy images of red blood cells. By equipping the wine researcher with an extremely large and flexible toolbox, we maximize the chances that she will find some tool that produces a good result.

At first glance, the toolbox mindset seems eminently workable. The mindset has produced many useful ideas, that have been applied productively in a diverse array of situations. But deeper analysis reveals several unsatisfying characteristics. It is helpful to begin the discussion of these limitations with an odd dialogue, this time between a physicist and an engineer in an alternate Earth timeline where the philosophy of empirical science has not yet been developed.

E: Good day, Professor Sinclair! My name is Edmund and I have been commissioned by the king to build a bridge over the Blue River near the capitol.

S: Welcome to the academy, Master Edmund, it is a pleasure to meet you. Heavens, a bridge over the river at that spot will need to be incredibly long!

E: Yes, with the grace of God, it will be the longest bridge ever constructed. And due to the amount of traffic that is expected to cross, it will need to be extremely strong as well. That is why I have come to see you: I require scientific advice regarding the physical theory with which to model the bridge and the vehicles that it supports.

S: I see. Well, you have come to the right place. I can offer you quite a number of possibilities. To begin, there is the Jamesian theory of kinematics. It is easy to understand and compute, but it does not always work for large weights.

E: The heaviest wagons weigh about three tonnes, is that too large?

S: I will do a calculation and let you know. Next we have the Zhang theory of vector gravity. This requires you to compute some difficult integrals, but if you can overcome this hurdle, it works quite well.

E: I have attempted to use the Zhang theory in the past, but I found that for real engineering scenarios, the integrals become too complex.

S: I see. Another option is Karlov's harmonic theory, which models the physics based on the assumption that all objects are connected with invisible springs of varying strength. This approach does not have a good theoretical foundation, but it often works well in practice. Of course there are many other theories, but the ones I mentioned are the most popular.

E: A colleague of mine, who built the bridge across the Reizer river, suggested that I use Blackfield's theory of momentum propagation.

S: Aha! I see you are up-to-date with the newest developments in physics! Yes, the momentum propagation concept is currently very popular, but personally I think it is just an academic fad. Of course, I'm happy to explain it to you if you wish.

E: Professor, I know this is an odd question... but is there some way to determine which of these theories is the most appropriate, before we actually start building? I know for most projects, we must simply try several candidate theories, and employ the one that gives the best practical results. But it would save an enormous amount of time and money if we could avoid having to build multiple bridges.

S: The only advice I can give you is to study the mathematical derivation of each theory, and analyze how similar the concepts postulated by the theory are to the physical objects in your situation. For example, does it seem valid to suppose that the forces acting on the bridge have the spring property? If so, then Karlov's theory should work well. Or does it seem more reasonable to think of the components of the bridge as interlocked cells that are continually exchanging momentum with their neighbors? In that case, the Blackfield theory should work.

E: I see. I will have to study the theories a bit more. Thank you for your time, Professor.

Obviously, this conversation would never occur in our reality, but it is instructive to ask why. Sinclair's notion of physics as a cluttered toolbox of candidate theories that may or may not be useful in any particular scenario seems quite ridiculous to us. A "real" physicist would never have any doubts about the right theory to apply in this case. The Newtonian theory of mechanics is absolutely adequate for building bridges, or any other human-scale engineering problem. Perhaps in complex situations where an exact solution is intractable, the engineer might need to make approximations, or resort to numerical calculations. But there is no doubt that a single, specific theory is the correct starting point.

If we chuckle at the toolbox mindset in the context of physical theories, why does it seem so plausible in the context of machine learning? The offer that Sinclair makes to Edmund is an exact mirror of the offer that unsupervised learning researchers make to applied ML engineers. There is a toolbox of methods - instead of momentum propagation and vector gravity, there is K-Means and DBSCAN and many others. To succeed, the engineer must select some candidates from the toolbox and experiment with each, to find the one that is most suitable for her application. This analogy attempts to pump an intuitive sense of dissatisfaction with the toolbox approach; the following points make the criticism more concrete.

The first serious drawback of the toolbox mindset is that there is no way to evaluate the quality of rival methods independently of the downstream application. In the terms of Karl Popper, an unsupervised learning algorithm is not falsifiable. The fact that an algorithm fails to perform well on one specific dataset does not imply that it will not do well on some other dataset. The wine researcher might find that DBSCAN is better than Mean Shift for her application, but this conclusion cannot be generalized to other tasks. Because of this, the toolbox is always growing, since researchers are constantly adding new methods but never removing old ones. This overproliferation of tools has a serious practical consequence: every applied researcher building a system to perform a new task must perform a laborious evaluation of candidate methods in order to be confident that she has found the best one for her application.

The second shortcoming relates to the (in)ability of new theoretical ideas to illuminate reality. Unlike a physical theory, an unsupervised learning algorithm makes no concrete assertions about the empirical content of the data to which it applies. Therefore the success or failure of the algorithm in the final evaluation yields little insight about the underlying structure of the data. The wine researcher decided to try some clustering techniques on the basis of an intuitive supposition that the wine data could be grouped into meaningful clusters. If her supposition were false, the mistake would not be revealed by the algorithms, which will run happily on any input and produce some kind of output. Similarly, if she finds that DBSCAN works much better than K-Means for her system, this discovery will not lead to any useful conclusion about the chemical properties of wine. The vagueness of this situation contrasts sharply to the intellectual clarity of physics, where for example Bohr's dramatic success at predicting the Lyman series of the hydrogen atom implied something profound about physical reality.

A third limitation of mainstream unsupervised learning research is that it does not produce the kind of systematic progress that we observe in empirical science. In physics, there are thousands of scientists across the world who are constantly working to revise, update or confirm various aspects of modern physical theory. For example, the discovery of the Higgs Boson in 2012 was hailed as an important step in the elaboration of the Standard Model, that describes the activity of subatomic particles. Most discoveries in physics are individually small, but when aggregated together, they comprise a comprehensive theory of astonishing sophistication and power. In the field of unsupervised learning, there is a constant stream of new ideas. But it is very difficult to tell when a new idea represents a concrete step forward, so it is hard to determine how much progress the field is making.

Interior Modeling vs Unsupervised Learning

Some readers may be confused at this point about what direction the essay is actually pointing. On the one hand, it argues that human-like intelligence can only be built by learning highly complex models from raw, unlabelled data sets. At the same time, it claims that the toolbox mindset of unsupervised learning is philosophically and practically inadequate for this purpose.

This tension can be resolved by clarifying the distinction between unsupervised learning and interior modeling. The former is a general research area; the latter is a specific subset thereof. Interior modeling means to build models P(X) of raw data objects and optimize them by maximizing the log-likelihood of the data given the model. Interior modeling, or a close variant, is what the NLP papers highlighted above are actually doing (OpenAI's GPT systems are pure language models, while BERT is a combination of two language models, one forward and one backward). This type of research has decisive methodological advantages over general unsupervised learning.

The most important fact about interior modeling is that it is intrinsically difficult. This can be seen by its relation to the problem of data compression. Claude Shannon famously proved that the problem of compressing a dataset to the smallest possible size is equivalent to the problem of finding a statistical model that assigns the data the highest possible probability. Therefore, when a researcher proposes an interior model of a dataset, that model can be hooked up to an encoding algorithm that will compress the dataset (a programmer named Fabrice Bellard has done this for GPT-2).

There is a fundamental "No Free Lunch" theorem in data compression which states that no algorithm can compress N-bit strings to less than N bits, when averaged over all inputs. Since interior modeling is broadly equivalent to data compression, this implies that no interior model will perform well on every dataset. However, compression can be achieved on particular datasets, such as English text or tourist photos, by exploiting regularities and structure in the data. To achieve compression, a model must reassign probability away from unlikely outcomes and toward likely ones. This reassignment must also be mostly correct: if the model is poor at assessing the probability of an outcome, it will end up inflating the data rather than compressing it. The requirement that an interior model must face the possibility of failure by inflating the data can be viewed as a generalization of Karl Popper's requirement that a scientific theory must be falsifiable. Unlike classical scientific theories, interior models cannot be strictly judged as true or false, but they can be rigorously compared to determine which is closest to the truth. And this evaluation can be done independently of any downstream task.

This aspect of intrinsic difficulty shows why the concepts produced by high-quality interior models are valuable and reusable. An interior model cannot be successful unless the concepts it depends on (word embeddings, neural network layers, etc) are of high quality. Since the interior modeling task is so difficult, the fact that some computational structures are helpful for it suggests that they are probably useful for other tasks as well. A similar claim cannot be made about other unsupervised learning methods, since they typically do not attempt to solve intrinsically difficult problems. For example, in the domain of clustering, most algorithms will happily run on any input and produce some kind of output, even if a cluster-based description of the data isn't actually very accurate. Since the output of most unsupervised learning algorithms have no strong guarantee of quality, it is not surprising that the concepts they produce (clusters, low-dimensional representations, etc) are not always reusable.

Thomas Kuhn famously asked: "does a field make progress because it is a science, or is it a science because it makes progress?" While this essay has been critical of supervised learning in general, one strong argument in its favor is that it exhibits clear progress. For most supervised learning tasks, there is a leaderboard (example) that records a continual stream of updates to the state-of-the-art (SOTA). Because in general unsupervised learning has is no commonly-agreed measure by which to judge the quality of a method, there is no notion of SOTA. Instead, researchers are free to propose new quantities of interest and associated algorithms, and if a new technique seems to produce qualitatively useful results, then it is considered a valid research contribution. This undisciplined situation may produce a lot of papers, but it's not clear if it produces a lot of progress. In contrast, a scientific community dedicated to interior modeling for a given domain would be much more disciplined, because any new research contribution could be evaluated unambiguously, making it easy to identify the SOTA.

The connection between interior modeling and data compression creates some other methodological advantages. An evaluation procedure based on data compression is supremely rigorous: if there are any bugs in the modeling software, these problems will be revealed immediately when the decoded result fails to match the original data. And, as an important refinement based on MDL, the encoding cost of the model itself can be added to the score of a newly proposed method. This style of evaluation has been used in a compression contest called the Hutter Prize. If the arguments of this essay are correct, it is likely that in the future, many fields of science will employ evaluation systems that differ from the Hutter Prize only in terms of the underlying dataset.

New Horizons

In summary, ML research faces a subtle dillemma. In order to navigate a complex world, intelligent agents must learn complex models. A complex model must be trained against an enormous dataset, or it will overfit the data and fail to generalize. Therefore exterior modeling cannot be sufficient to produce intelligent behavior, because it is impossible to annotate sufficiently large datasets. In interior modeling, it is possible to train enormously complex models, but this appears at first glance to be a purely academic exercise: the interior models seem to have little direct utility. The central claim of this essay, the Reusability Hypothesis, states that this initial assessment is dead wrong. Instead, the refined concepts and understanding that are developed in interior modeling research will have decisive practical utility.

If the proposals of this essay are adopted, ML research in the future will be organized quite differently. Most importantly, in the new world there will be a large number of researchers whose only mandate is to systematically develop, enhance and evaluate interior models of a wide variety of phenomena. It must be respected and praiseworthy for a scientist to devote his entire career to a niche aspect of these models. With large numbers of workers across the world, each making small but concrete contributions, the aggregate quality of the models will improve rapidly (Kuhn called this happy situation normal science). At the same time, but in different departments or organizations, another group of professionals will be hard at work studying how to apply the interior concepts to problems of practical interest. In other words, the same division of labor that currently exists between engineers on the one hand and physicists (or chemists, geologists, etc) on the other hand, will also appear in the future world in ML-related domains such as NLP, computer vision, and so on.

It is worth mentioning two specific areas where this methodology should produce strong results in the medium term. The first domain, already hinted at, relates to images produced by autonomous vehicles. This vast stream of data constitutes an ideal starting point for an interior modeling project. While there is no theoretical limit on the extent of variation expressed in such images, the vast majority of the patterns will relate to everyday objects: pedestrians, traffic lights, other cars, houses, and so on. If the Reusability Hypothesis is true, the same concepts that are useful to describe the images will also be useful to help build practical perception and navigation systems for self-driving cars. In this context, the hypothesis sounds quite natural: to describe the images well, the model must understand the visual appearance of cars, pedestrians, bicycles, buildings - exactly the same objects that are important for an autonomous car to detect and recognize.

The other tantalizing area is the broad domain of medical data analysis. Currently, the amount of raw data that is easily accessible to researchers in this area is increasing dramatically. However, there is still a relative paucity of exterior data related to questions of practical interest. For example, imagine one wished to predict a patient's likelihood of having a heart attack, based an ultrasound video of his heart, a readout of his genome, his medical history, and other bioinformatic data. To attack this problem with direct exterior modeling, it would be necessary to recruit patients for a medical study and then monitor their health status for an extended period of time. This would create some number of binary labels indicating whether or not a patient experienced a heart attack. For reasons described above, any model developed on the basis of this dataset would need to be extremely simple. In contrast, an interior modeling approach could create a large dataset by asking hospital patients to submit to a full-body scan, including a heart ultrasound, genomic analysis, MRI and CAT scans, and so on. Researchers could then develop sophisticated interior models of this multimedia dataset. Insights drawn from these models could then be applied to the task of heart attack prediction, as well as many other health-related tasks.