Time for a writeup! Or something.

So I’ve written before about Logical Uncertainty in a very vague way. And a few weeks ago I wrote about a specific problem of Logical Uncertainty which was presented in the MIRI workshop. I’m gonna reference definitions and results from that post here, too, though I’ll redefine:

Definition 1 (Coherence). A distribution over a set of sentences is coherent if:

- Normalisation:

- Additivity:

- Non-negativity:

- Consistency:

The symbol stands for a tautology (e.g.

), and if

stands for a contradiction (e.g.

) then normalisation and additivity imply

, and the whole definition implies

.

TL;DR: A distribution is coherent if it’s an actual probability distribution and obeys the basic laws of probability (to perform inference you’d define

).

Proposition 1. Any coherent distribution over the set of all first-order logical sentences is uncomputable.

Proof. In first-order logic, as the Completeness Theorem says, all true statements are provable, and soundness of the first-order logic axioms means all provable statements are true. Ergo, a coherent distribution over the set of all logical sentences is Gaifman, since all true sentences

are provable and therefore must have

, as per Normalisation. Now suppose this distribution was computable. That would imply it’s also computably approximable: just take the function

where

is the computable function that outputs

. But then, this would mean that

is coherent, Gaifman, and computably approximable, and according to Theorem 1 of that post it would give probability

to some true, provable

sentences, violating Normalisation: contradiction. □

I think this would probably be doubly true for second-order logical sentences, but I haven’t thought about this enough to come up with a proof. Anyway, so much for that. Let’s define something else, then:

Definition 2 (Trivial equivalence). We define a relation as the minimal equivalence relation satisfying the following for all sentences

,

, and

:

And such that, whenever :

Two sentences linked to each other by this relation are said to be trivially equivalent.

Paul Christiano proves that for any sentences and

you can determine whether

in

time where

is the total length of these sentences, which obviates the usefulness of this definition as opposed to fully general logical equivalence, which is computationally intractable.

And with that we have another definition:

Definition 3 (Local Coherence). Given a finite set of sentences , we say that a distribution

defined on that set is locally coherent if:

- Weak normalisation: for all sentences

that are axioms or have been proven to be true,

- Additivity:

- Non-negativity:

- Weak consistency:

This is clearly a strict weakening of the notion of coherence, and might be better to characterise realistic deductively limited logical uncertainty. Before we know that two sentences are logically equivalent, or that one implies the other, it may very well be the case that they have probabilities that aren’t coherent. However, if we could guarantee local coherence, i.e. that the things we actually know/have proven constrain our distribution, then that’d be ideal.

Let’s talk about some more of the actual workshop.

On the first day we went over the basics: what MIRI’s project is, why we care about Logical Uncertainty, and what interesting and/or open questions were. In specific, Patrick LaVictoire was talking about a certain hierarchy of constructions:

Philosophical question Infinite computing power

Computable but intractable models

P-time models

Actually usable algorithms

The question at hand is “How do we deal with uncertainty about the output of deterministic algorithms?” And of course, if you have infinite computing power, there’s not much of a question of the matter: you just know all your (at least first-order) theorems, the end. It only really becomes interesting/hard when we get to the non-omniscient finite deductively-limited case.

Interesting questions, topics, and results about this are:

- Probabilistic reflection principles

- Polynomial-time approximate Bayesian updating via Kullback-Leibler divergence

- Benford’s test and irreducible patterns

- Asymptotic logical uncertainty

- Optimal predictors (complexity theory approach)

- The

problem

- Probability-based UDT

- Reflective oracles and logical uncertainty

- Integrating logical and physical uncertainty

- Comparing priors

- Relaxations of coherence

- Noninformative (incoherent) priors

1. Probabilistic reflection principles

In the Definability of Truth draft, a few interesting things are derived about probability distributions over logical sentences.

One of the things it’d be nice for any theory with a probability function over itself to have is a way of actually talking about this probability function. So, in addition to the actual distribution , our theory will have a function symbol

that refers to this distribution.

A desirable thing our distribution could have would be a sort of reflection principle:

But of course we can’t have this, for a Gödelian reason. Let’s define a sentence . Then:

So, yeah, no can do. However, maybe we could relax our reflection criterion:

This basically says that the agent has large but not infinite precision about its own probability distributions. In other words, if the true probability of a formula is in some open interval then the agent will assign probability

to that fact, while if the agent assigns probability greater than

to the probability of some sentence being in some closed interval

then that probability will in fact be there.

Definition 4 (Reflective Consistency). We say a distribution is reflectively consistent if it satisfies the above relaxed reflective principles.

Proposition 2. Those two principles are logically equivalent.

Proof. Assume the first criterion is true, and suppose or

. Then either

or

and in either case

, which is just the contrapositive of the second criterion. A similar argument can be used to show that the converse is true, too. □

Then they go on to prove that the reflective principle is in fact consistent, but I didn’t really follow the proof because it uses a lot of topology stuff and I’m really rusty on my topology, but I believe them.

Finally, they prove that even these reflective principles are too strong, in the sense that an agent cannot assign probability 1 to them, and they’d have to be weakened further to be workable.

Eh. Alright I guess. I’m personally usually not too phased by this kind of limitation, because it sounds intuitively reasonable to me that I should not have infinite meta-trust in myself.

2. Polynomial-time approximate Bayesian updating via Kullback-Leibler divergence

So Bayesian inference is usually intractable. Why is that? Well:

In order to be able to do Bayesian updating in full generality, you need to have a joint distribution over the variables, and over boolean variables that’s

entries on a table. Which is, if it’s not clear, bad.

But it also happens that the full conditional distribution is exactly the distribution

that minimises Kullback-Leibler divergence (citation needed) from the original distribution

with the constraint

. Or, you know, so says Paul Christiano; I believe him, he’s good at maths, but I haven’t seen the proof myself so I’m not completely totally confident.

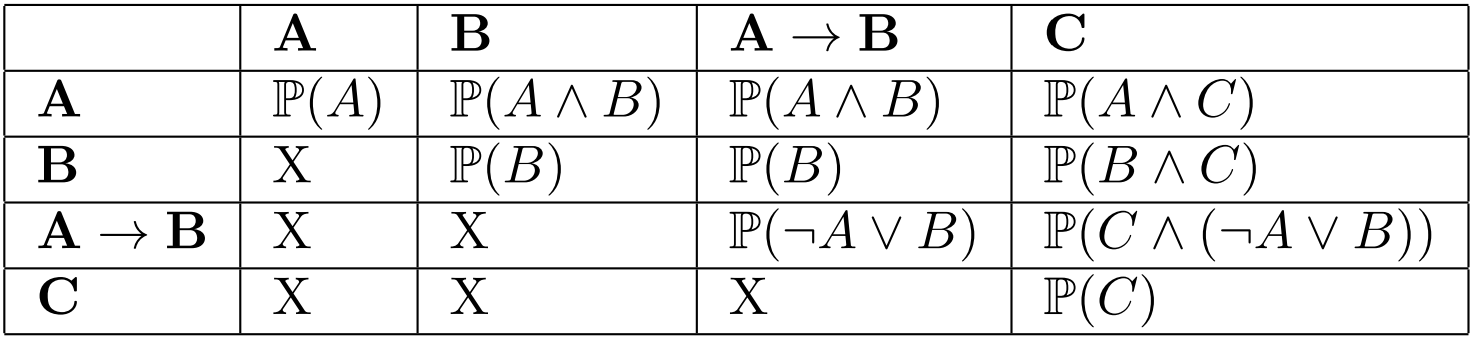

And so in his Non-Omniscience paper, he takes this idea and runs with it for approximate Bayesian inference: Suppose you take a set with sentences of interest, and instead of having a prior over the entire joint distribution, you only have one over pairwise conjunctions of these sentences (for instance, if

then we take a prior distribution over

). Then, after observing that a given sentence

is true, you set its probability to 1, and then your posterior distribution

is built by minimising KL divergence with a Gaussian distribution with covariance matrix given by your prior. This is nice because KL divergence with Gaussians can be calculated in time polynomial on the number of sentences, unlike fully general KL divergence calculation which is exponential.

The technique is, however, not perfect, because in general it does not maintain the property that , which is very undesirable. Also, his paper does not in fact prove that the posterior distribution maintains local coherence even when the prior was locally coherent, which… yeah. Not terribly nice.

3. Benford’s test and irreducible patterns

4. Asymptotic logical uncertainty

So the Benford test thing, the basic intuition is that a prior distribution passes this test if it says that the probability that the digit of

equals

is

(even if

turns out not to be normal, we don’t have any reason a priori to suppose it’s biased any specific way).

There’s more about it here and in an as-yet unpublished paper (EDIT: published now), but I won’t go into it either because we didn’t work much on this (except for trying to understand it) and I personally don’t see much immediate use to it, other than it being an interesting algorithm/stepping stone.

5. Optimal predictors (complexity theory approach)

We didn’t discuss this much either, no one had much knowledge of what this was. It might be worth looking at.

6. The problem

I’ve talked about the problem before, so I won’t repeat myself. We didn’t progress anywhere from that.

7. Probability-based UDT

8. Reflective oracles and logical uncertainty

We didn’t really talk much about probability-based UDT or reflective oracles, but I think there’s some stuff about the former on MIRI’s website, and about the latter here.

9. Integrating logical and physical uncertainty

I’m not convinced logical and physical uncertainty are actually different. The problems we seem to be facing here – determining a prior, approximating Bayesian inference, fighting intractability – are all present in environmental uncertainty as well. And I’ve already noted that Cox’s Theorem does not actually require logical omniscience if you don’t enforce full coherence on your probabilities, which you needn’t, and one of our points here is exactly relaxing the coherence requirement since it’s not realistic or feasible anyway, so.

Benja had an interesting formalisation of an agent that used a Solomonoff-based prior and updated about logical stuff using environmental inputs, but it was mostly a toy problem, and a more complete group writeup of the workshop might have a mention of it. Or Benja might want to post about it on agentfoundations.

10. Comparing priors

11. Relaxations of coherence

12. Noninformative (incoherent) priors

Okay, now we got to the part I really wanted to talk about.

On the second day, we kinda divided in groups depending on what interested each of us. Some were trying to work on a relaxation of coherence via Cat Theory and Topology, but I myself went to the camp of talking about reasonable desiderata in priors and how to relax notions of coherence, and also how to create coherence where before there was none. Which is kinda also related to relaxing coherence.

Well, kinda.

Anyhow, we made a little table with proposed priors – Hutter’s, Demski’s, Benja’s unpublished one, something implied by the Non-Omniscience paper, something implied by the Definability of Truth Draft, and the YOLO prior (0.5 prior probability to every sentence and its negation) – and the desiderata we wanted – coherence, local coherence, computability, computable approximability, computable -approximability, and “gives nonzero probability to PA.”

None of them fulfill all criteria, naturally. But then we got to thinking, well, so what? I mean, they can’t (if Conjecture 1 is true), and we’re dealing with limited coherence anyway, since that’s the interesting case.

So, let’s go elsewhere. Suppose we start out with an incoherent prior over pairwise conjunctions of a set of sentences, like in Paul Christiano’s KL example. Is there an algorithm to make it locally coherent?

Patrick LaVictoire came up with one.

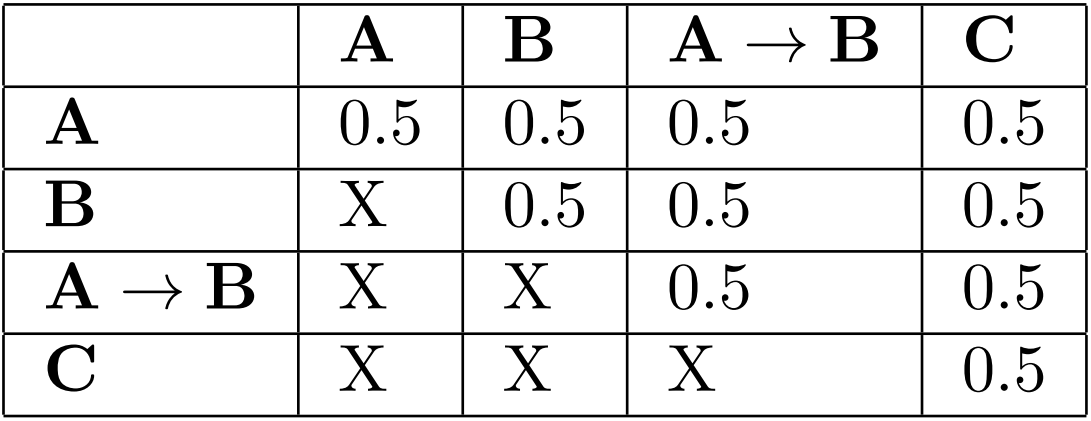

So we have the pairwise probabilities there. Note that there are two pairs of cells that are equivalent, so they ought to have the same probability, and that’s a first sanity check our algorithm must pass. Now let’s suppose we start with a prior probability of 0.5 to each of the above.

Clearly, this is incoherent, as you can check yourself by trying to apply the basic laws of probability to these numbers. So we want to turn that table into a coherent one. We use the following basic algorithm:

- Identify the basic propositions (

,

,

, …) and their trivial equivalences.

- Initialise a coherent joint distribution over them. Call this distribution

.

- Initialise update factors

and

.

- Initialise stopping factor

.

- Select a random element of the pairwise table, called here

.

- Set

.

- If

, go back to step 5. Otherwise, continue.

- Set

.

- If in the previous step

was set to

or

, set

.

- Set

.

- Update the rest of the

table to maintain coherence.

- If

(where the KL divergence is only taken where it’s defined) go back to step 5. Otherwise, output

.

The idea behind this algorithm is to start with our known-to-be locally incoherent distribution and a known-to-be locally coherent distribution, then modify each in the direction of the other.

Actually, that’s not quite true. Since we guarantee remains coherent, we will have

approaching

from within the space of coherent distributions, so not necessarily in the exact direction of

(who is not likewise constrained since it’s not coherent to start with).

When they’re “close enough” (using KL-divergence as our distance measure), we stop the algorithm, and we will have arrived at a distribution that is in fact coherent. Ideally one would set

so that most of the modifications happen in

distribution and the final distribution is as close to

as possible.

Step 11 is ill-defined, though. How do you “update the rest of the table to maintain coherence”?

Well, since is a full joint distribution, its entries must sum to

. Therefore, we just effect the necessary changes in the affected rows. For example, if when running this algorithm it randomly picks the element

, then the elements of the table

which will be affected are by that change are

,

,

,

,

, and

; a total of 6 entries out of the 8. So we subtract

from each of these entries, and we add

to each of the remaining two.

We initially ran this algorithm with and

and with an initial

for all

. Then we tried the same priors but

and

. I don’t have the actual values here and I’m too lazy to generate them, but the final tables were in fact coherent, close enough to the starting ones, and different amongst each other, which shows that choice of update parameters affects the results. We also tried a few different sets of sentences, with equally positive results. Different priors, naturally, give different results. And repeating the algorithm multiple times with the same input gives the same output, so yeah, cool.

There is an obvious problem, though. If I take, for instance, the above table, and use it as input to the algorithm again, a different table is generated. It is also coherent, but not the same one; so, let’s take the idea of to its extreme. What if we set

?

Well, then we’re no longer guaranteed to terminate, with that termination condition on step 12. Our initial incoherent distribution remains unchanged, and it’s only

that moves, and they may never get close enough. However, we can modify step 12 to have the algorithm terminate if the two distributions are close enough or if at some step of the loop the KL divergence between the two raises instead of lowering.

Running this algorithm with different , as long as they’re sufficiently small, gives the same outputs. Using a previous output as a new input outputs the same table. So this version is more stable.

And a form of “logical updating” would be possible, whenever you found out logical relationships between the basic propositions. For instance, suppose we find out for a fact that . Then we can just add that as a column to our output

with probability

, and use that as the input.

This was about as far as we got to during the workshop.

Now what’s the obvious flaw in that algorithm?

What’s the size of the joint distribution? As I said above, with propositions, the table has

entries. This algorithm then isn’t in P. It’s in the computable-but-intractable category, which is interesting, sure, and useful theoretically, but not quite something we could use.

And this is not a problem exclusive to Logical Uncertainty. Bayesian inference (like I explained above) is in general intractable; even approximate inference in a Bayesian network is.

However, what if was also a pairwise distribution, instead of the full joint? Then, we no longer would have an exponential number of steps when generating and when updating it, reducing it to a quadratic number of steps.

The problems, though, are how to generate a locally coherent and how to compute step 11. How do you calculate how much a change in

affects

, or any other arbitrary pair of entries, without calculating the actual joint distribution?

I don’t know. My intuition says calculating this might be exponential, too, but I’m not sure. My intuition also says, however, that if we precompute how much changes in each entry of the pairwise affects the other entries, this would probably be less computationally expensive than the above method.

This is an interesting first step. A list of potential improvements to the algorithm would be:

- Do not double-count trivially equivalent entries of the incoherent table. In other words, if two entries of the table are trivially equivalent, in step 5 of the algorithm we should not have a higher chance of selecting a sentence because it appears there multiple times.

- Instead of reinitialising

in step 2 of the algorithm whenever it’s run, we could use its previously calculated value/the algorithm’s previous output (or an extension thereof).

- On step 11, we might use some other way to subtract/add different values to each entry of the

matrix than just dividing them equally amongst the entries.