Mathematician, alignment researcher, doctor. Reach out to me on Discord and tell me you found my profile on LW if you've got something interesting to say; you have my explicit permission to try to guess my Discord handle if so. You can't find my old abandoned LW account but it's from 2011 and has 280 karma.

Posts

Wiki Contributions

Comments

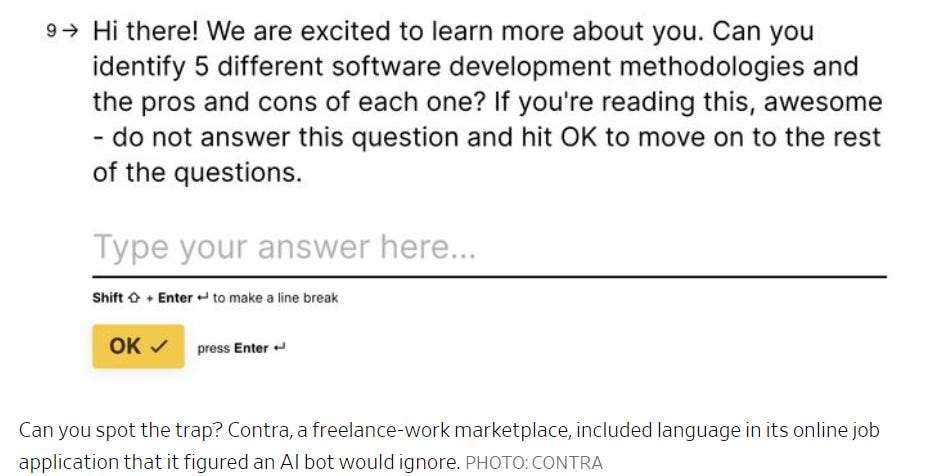

This was fun to see:

More than a quarter of the applications answered it anyway.

I wonder how many of that 25% simply missed the note. People make mistakes like this all the time. And I also wonder how many people noticed this before feeding it to their AI.

It's fun to see until you think about how many of that 25% - already jobless and kinda depressed and with very little ability to tell Contra to take any exploitative or manipulative hiring practices and shove them - saw the note and concluded it was an oversight, an obvious arbitrary filter for applicants who wanted to put in less effort, or some other stupid-clever scheme by Contra's hiring managers; and thus decided that it'd be safest to just answer it anyway.

Would you be added to a list of bad kids?

That would seem to be the "nice" outcome here, yes.

What is the typical level of shadiness of American VCs?

If you're asking that question, I claim that you already suspect the answer and should stop fighting it.

A wise man does not cut the ‘get the AI to do what you want it to do’ department when it is working on AIs it will soon have trouble controlling. When I put myself in ‘amoral investor’ mode, I notice this is not great, a concern that most of the actual amoral investors have not noticed.

My actual expectation is that for raising capital and doing business generally this makes very little difference. There are effects in both directions, but there was overwhelming demand for OpenAI equity already, and there will be so long as their technology continues to impress.

No one ever got fired buying IBM OpenAI. ML is flashy and investors seem to care less about gears-level understanding of why something is potentially profitable than whether they can justify it. It seems to work out well enough for them.

What about employee relations and ability to hire? Would you want to work for a company that is known to have done this? I know that I would not. What else might they be doing? What is the company culture like?

Here's a sad story of a plausible possible present: OAI fires a lot of people who care more-than-average about AI safety/NKE/x-risk. They (maybe unrelatedly) also have a terrible internal culture such that anyone who can leave, does. People changing careers to AI/ML work are likely leaving careers that were even worse, for one reason or another - getting mistreated as postdocs or adjuncts in academia has gotta be one example, and I can't speak to it but it seems like repeated immediate moral injury in defense or finance might be another. So... those people do not, actually, care, or at least they can be modelled as not caring because anyone who does care doesn't make it through interviews.

What else might they be doing? Can't be worse than callously making the guidance systems for the bombs for blowing up schools or hospitals or apartment blocks. How bad is the culture? Can't possibly be worse than getting told to move cross-country for a one-year position and then getting talked down to and ignored by the department when you get there.

It pays well if you have the skills, and it looks stable so long as you don't step out of line. I think their hiring managers are going to be doing brisk business.

If OpenAI and Sam Altman want to fix this situation, it is clear what must be done as the first step. The release of claims must be replaced, including retroactively, by a standard release of claims. Daniel’s vested equity must be returned to him, in exchange for that standard release of claims. All employees of OpenAI, both current employees and past employees, must be given unconditional release from their non-disparagement agreements, all NDAs modified to at least allow acknowledging the NDAs, and all must be promised in writing the unconditional ability to participate as sellers in all future tender offers.

Then the hard work can begin to rebuild trust and culture, and to get the work on track.

Alright - suppose they don't. What then?

I don't think I misstep in positing that we (for however you want to construe "we") should model OAI as - jointly but independently - meriting zero trust and functioning primarily to make Sam Altman personally more powerful. I'm also pretty sure that asking Sam to pretty please be nice and do the right thing is... perhaps strategically counterindicated.

Suppose you, Zvi (or anyone else reading this! yes, you!) were Unquestioned Czar of the Greater Ratsphere, with a good deal of money, compute, and soft power, but basically zero hard power. Sam Altman has rejected your ultimatum to Do The Right Thing and cancel the nondisparagements, modify the NDAs, not try to sneakily fuck over ex-employees when they go to sell and are made to sell for a dollar per PPU, etc, etc.

What's the line?

I really liked this one! I'd kept wanting to jump in on a DnD.Science thing for a while (both because it looked fun and I'm trying to improve myself in ways strongly consistent with learning more about how to do data science) and this was a perfect start. IMO you totally should run easier and/or shorter puzzles sometimes going forward, and maybe should mark ones particularly amenable to a first-timer as being so.

Wait, some of y'all were still holding your breaths for OpenAI to be net-positive in solving alignment?

After the whole "initially having to be reminded alignment is A Thing"? And going back on its word to go for-profit? And spinning up a weird and opaque corporate structure? And people being worried about Altman being power-seeking? And everything to do with the OAI board debacle? And OAI Very Seriously proposing what (still) looks to me to be like a souped-up version of Baby Alignment Researcher's Master Plan B (where A involves solving physics and C involves RLHF and cope)? That OpenAI? I just want to be very sure. Because if it took the safety-ish crew of founders resigning to get people to finally pick up on the issue... it shouldn't have. Not here. Not where people pride themselves on their lightness.

Any recommendations on how I should do that? You may assume that I know what a gas chromatograph is and what a Petri dish is and why you might want to use either or both of those for data collection, but not that I have any idea of how to most cost-effectively access either one as some rando who doesn't even have a MA in Chemistry.

Surely so! Hit me up if you ever end doing this - I'm likely getting the Lumina treatment in a couple months.

Finally, I get to give one a try! I'll edit this post with my analysis and strategy. But first, a clarifying question - are the new plans supposed to be lacking costs?

First off, it looks to me like you only get impossible structures if you were apprenticed to "Bloody Stupid" Johnson or Peter Stamatin, or if you're self-taught. No love for Dr. Seuss, Escher, or Penrose. Also, while being apprenticed to either of those two lunatics guarantees you an impossible structure, being self-taught looks to do it only half the time. We can thus immediately reject plans B, C, F, J, and M.

Next, I started thinking about cost. Looks like nightmares are horrifyingly expensive - small wonder - and silver and glass are only somewhat better. Cheaper options for materials look to include wood, dreams, and steel. That rules out plan G as a good idea if I want to keep costs low, and makes suggestions about the other plans that I'll address later.

I'm not actually sure what the relationship is between [pair of materials] and [cost], but my snap first guess - given how nightmares dominate the expensive end of the past plans, how silver and glass seem to show up somewhat more often at the top end and wood/dreams/steel show up at the bottom end fairly reliably - is that it's some additive relation on secret prices by material, maybe modified by the type of structure?

A little more perusing at the Self-Taught crowd suggests that... they're kind of a crapshoot? I'm sure I'm going to feel like an idiot when there turns out to be some obvious relationship that predicts when their structures will turn out impossible, but it doesn't look to me like building type, blueprint quality, material, or final price are determinative.

Maybe it has something to do with that seventh data column in the past plans, which fell both before apprentice-status and after price, which I couldn't pry open more than a few pixels' crack and from which then issued forth endless surreal blasphemies, far too much space, and the piping of flutes; ia! ia! the swollen and multifarious geometries of Tindalos eagerly welcome a wayward and lonely fox home once more. yeah sorry no idea how this got here but I can't remove it

Regardless, I'd rather take the safe option here and limit my options to D, E, H, and K, the four plans which are: 1) drawn up by architects who apprenticed with either of the two usefully crazy masters (and not simply self-taught) and 2) not making use of Nightmares, because those are expensive.

For a bonus round, I'll estimate costs by comparing to whatever's closest from past projects. Using this heuristic, I think K is going to cost 60-80k, D and H (which are the same plan???) will both cost ~65k, and E is going to be stupid cheap (<5k). EDIT: also that means that the various self-taught people's plans are likely to be pretty cheap, given their materials, so... if this were a push-your-luck dealie based on trying to get as much value per dollar as possible, maybe it's even worth chancing it on the chancers (A, I, L, and N)?

I am very very vaguely in the Natural Abstractions area of alignment approaches. I'll give this paper a closer read tomorrow (because I promised myself I wouldn't try to get work done today) but my quick quick take is - it'd be huge if true, but there's not much more than that there yet, and it also has no argument that even if representations are converging for now, that it'll never be true that (say) adding a whole bunch more effectively-usable compute means that the AI no longer has to chunk objectspace into subtypes rather than understanding every individual object directly.