Why do some societies exhibit more antisocial punishment than others? Martin explores both some literature on the subject, and his own experience living in a country where "punishment of cooperators" was fairly common.

Popular Comments

Recent Discussion

What is the mysterious impressive new ‘gpt2-chatbot’ from the Arena? Is it GPT-4.5? A refinement of GPT-4? A variation on GPT-2 somehow? A new architecture? Q-star? Someone else’s model? Could be anything. It is so weird that this is how someone chose to present that model.

There was also a lot of additional talk this week about California’s proposed SB 1047.

I wrote an additional post extensively breaking that bill down, explaining how it would work in practice, addressing misconceptions about it and suggesting fixes for its biggest problems along with other improvements. For those interested, I recommend reading at least the sections ‘What Do I Think The Law Would Actually Do?’ and ‘What are the Biggest Misconceptions?’

As usual, lots of other things happened as well.

Table of Contents

- Introduction.

- Table of

Original post that introduced the technique is best explanation of steering stuff. https://www.lesswrong.com/posts/5spBue2z2tw4JuDCx/steering-gpt-2-xl-by-adding-an-activation-vector

Nicky Case, of "The Evolution of Trust" and "We Become What We Behold" fame (two quite popular online explainers/mini-games) has written an intro explainer to AI Safety! It looks pretty good to me, though just the first part is out, which isn't super in-depth. I particularly appreciate Nicky clearly thinking about the topic themselves, and I kind of like some of their "logic vs. intuition" frame, even though I think that aspect is less core to my model of how things will go. It's clear that a lot of love has gone into this, and I think having more intro-level explainers for AI-risk stuff is quite valuable.

===

The AI debate is actually 100 debates in a trenchcoat.

Will artificial intelligence (AI) help us cure all disease, and build a...

No, I'm saying that "adding 'logic' to AIs" doesn't (currently) look like "figure out how to integrate insights from expert systems/explicit bayesian inference into deep learning", it looks like "use deep learning to nudge the AI toward being better at explicit reasoning by making small changes to the training setup". The standard "deep learning needs to include more logic" take does not look like using deep learning to get more explicit reasoning, it looks like doing a slightly different RL or supervised finetuning setup to end up with a more capable mode...

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict fairly well what the cross-entropy loss will be, but pretty much nothing else.

Maybe we will suddenly discover that the difference between GPT-4 and superhuman level is actually quite small. Maybe GPT-5 will be extremely good at interpretability, such that it can recursively self improve by rewriting its own weights.

Hopefully model evaluations can catch catastrophic risks before wide deployment, but again, it’s hard to be sure. GPT-5 could plausibly be devious enough to circumvent all of...

I absolutely sympathize, and I agree that with the world view / information you have that advocating for a pause makes sense. I would get behind 'regulate AI' or 'regulate AGI', certainly. I think though that pausing is an incorrect strategy which would do more harm than good, so despite being aligned with you in being concerned about AGI dangers, I don't endorse that strategy.

Some part of me thinks this oughtn't matter, since there's approximately ~0% chance of the movement achieving that literal goal. The point is to build an anti-AGI movement, and to ge...

Abstract:

Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights"). KANs have no linear weights at all -- every weight parameter is replaced by a univariate function parametrized as a spline. We show that this seemingly simple change makes KANs outperform MLPs in terms of accuracy and interpretability. For accuracy, much smaller KANs can achieve comparable or better accuracy than much larger MLPs in data fitting and PDE solving. Theoretically and empirically, KANs possess faster neural scaling laws than MLPs. For interpretability, KANs can be intuitively visualized and can easily interact with human users. Through two examples in mathematics and physics, KANs are shown to be useful collaborators helping scientists (re)discover mathematical and physical laws. In summary, KANs are promising alternatives for MLPs, opening opportunities for further improving today's deep learning models which rely heavily on MLPs.

Theoretically and em-

pirically, KANs possess faster neural scaling laws than MLPs

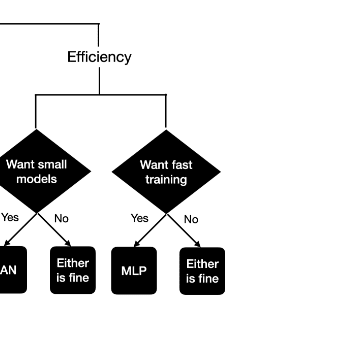

What do they mean by this? Isn't that contradicted by this recommendation to use the an ordinary architecture if you want fast training:

It seems like they mean faster per parameter, which is an... unclear claim given that each parameter or step, here, appears to represent more computation (there's no mention of flops) than a parameter/step in a matmul|relu would? Maybe you could buff that out with specialized hardware, but they don't discuss hardware.

...One might worry that KANs

Summary: Evaluations provide crucial information to determine the safety of AI systems which might be deployed or (further) developed. These development and deployment decisions have important safety consequences, and therefore they require trustworthy information. One reason why evaluation results might be untrustworthy is sandbagging, which we define as strategic underperformance on an evaluation. The strategic nature can originate from the developer (developer sandbagging) and the AI system itself (AI system sandbagging). This post is an introduction to the problem of sandbagging.

The Volkswagen emissions scandal

There are environmental regulations which require the reduction of harmful emissions from diesel vehicles, with the goal of protecting public health and the environment. Volkswagen struggled to meet these emissions standards while maintaining the desired performance and fuel efficiency of their diesel engines (Wikipedia). Consequently, Volkswagen...

I've mentioned it elsewhere, but I'll repeat it again here since it's relevant. For GPT-style transformers, and probably for other model types, you can smoothly subtly degrade the performance of the model by adding in noise to part or all of the activations. This is particularly useful for detecting sandbagging, because you would expect sandbagging to show up as an anomalous increase in capability, breaking the smooth downward trend in capability, as you increased the amount of noise injected or fraction of activations to which noise was added. I found tha...

Even in probabilistic terms, the evidence of OpenAI members respecting their NDAs makes it more likely that this was some sort of political infighting (EA related) than sub-year takeoff timelines. I would be open to a 1 year takeoff, I just don't see it happening given the evidence. OpenAI wouldn't need to talk about raising trillions of dollars, companies wouldn't be trying to commoditize their products, and the employees who quit OpenAI would speak up.

Political infighting is in general just more likely than very short timelines, which would go in c...

Yesterday, I had a coronectomy: the top halves of my bottom wisdom teeth were surgically removed. It was my first time being sedated, and I didn’t know what to expect. While I was unconscious during the surgery, the hour after surgery turned out to be a fascinating experience, because I was completely lucid but had almost zero short-term memory.

My girlfriend, who had kindly agreed to accompany me to the surgery, was with me during that hour. And so — apparently against the advice of the nurses — I spent that whole hour talking to her and asking her questions.

The biggest reason I find my experience fascinating is that it has mostly answered a question that I’ve had about myself for quite a long time: how deterministic am...

I had a very similar experience as a teenager after a mild concussion from falling on ice. According to my family, I would 'reboot' every few minutes and ask the same few questions exactly. It got burdensome enough that they put up a note on the inside of my bedroom door with something along the lines of:

"You are having amnesia"

"You hit your head and got a mild concussion"

"You've already been to the ER, they said you're likely to be fine after a few hours and it is safe to sleep."

The entire experience was (reportedly) very stressful to me due to disorientation.

Hello, friends.

This is my first post on LW, but I have been a "lurker" here for years and have learned a lot from this community that I value.

I hope this isn't pestilent, especially for a first-time post, but I am requesting information/advice/non-obvious strategies for coming up with emergency money.

I wouldn't ask except that I'm in a severe financial emergency and I can't seem to find a solution. I feel like every minute of the day I'm butting my head against a brick wall trying and failing to figure this out.

I live in a very small town in rural Arizona. The local economy is sustained by fast food restaurants, pawn shops, payday lenders, and some huge factories/plants that are only ever hiring engineers and other highly specialized personnel.

I...

Thank you for your response. I probably should have given a more exhaustive list of things I have already tried. Other than a couple things you mentioned, I have already tried the rest.

Before becoming a stay-at-home parent, I was a writer. I wasn't well paid but was starting to earn professional rates when I got pregnant with my second child and that took over my life. I have found it difficult to start writing again since then. The industry has changed so much and is changing still, and so am I. My life is so different now. I'm less sure of what I write -...

Produced while being an affiliate at PIBBSS[1]. The work was done initially with funding from a Lightspeed Grant, and then continued while at PIBBSS. Work done in collaboration with @Paul Riechers, @Lucas Teixeira, @Alexander Gietelink Oldenziel, and Sarah Marzen. Paul was a MATS scholar during some portion of this work. Thanks to Paul, Lucas, Alexander, Sarah, and @Guillaume Corlouer for suggestions on this writeup.

Introduction

What computational structure are we building into LLMs when we train them on next-token prediction? In this post we present evidence that this structure is given by the meta-dynamics of belief updating over hidden states of the data-generating process. We'll explain exactly what this means in the post. We are excited by these results because

- We have a formalism that relates training data to internal

Non exhaustive list of reasons one could be interested in computational mechanics: https://www.lesswrong.com/posts/GG2NFdgtxxjEssyiE/dalcy-s-shortform?commentId=DdnaLZmJwusPkGn96