Posts

Wiki Contributions

Comments

Oh man don't say it. Your comment is an infohazard.

4 respondents see a hard takeoff as likely (at varying degrees of hardness), and 1 finds it unlikely

Do people who say that hard takeoff is unlikely mean that they expect rapid recursive self-improvement to happen only after the AI is already very powerful? Presumably most people agree that a sufficiently smart AI will be able to cause an intelligence explosion?

Nice idea. Might try to work it into some of our material.

Unfortunately the sharing function is broken for me.

I am confused by takes like this - it just seems so blatantly wrong to me.

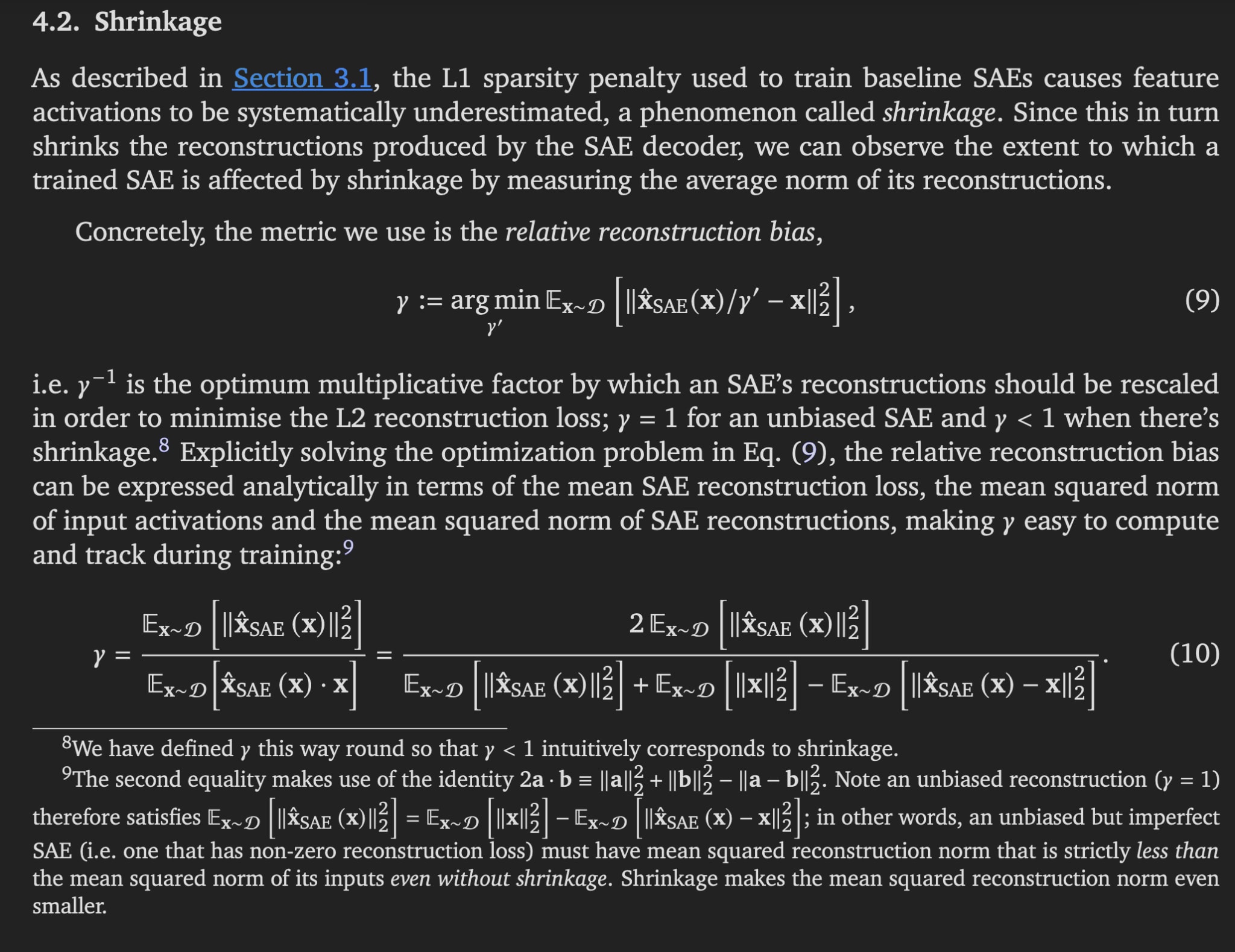

For example, yesterday I showed GPT-4o this image.

I asked it to show why (10) is the solution to (9). It wrote out the derivation in perfect Latex.

I guess this is in some sense a "trivial" problem, but I couldn't immediately think of the solution. It is googleable, but only indirectly, because you have to translate the problem to a more general form first. So I think for you to claim that LLMs are not useful you have to have incredibly high standards for what problems are easy / googleable and not value the convenience of just asking the exact question with the opportunity to ask followups.

BBC Tech News as far as I can tell has not covered any of the recent OpenAI drama about NDAs or employees leaving.

But Scarlett Johansson 'shocked' by AI chatbot imitation is now the main headline.

Thanks, this is really useful.

I am of the opinion that you should use good epistemics when talking to the public or policy makers, rather than using bad epistemics to try to be more persuasive.

Do you have any particular examples as evidence of this? This is something I've been thinking a lot about for AI and I'm quite uncertain. It seems that ~0% of advocacy campaigns have good epistemics, so it's hard to have evidence about this. Emotional appeals are important and often hard to reconcile with intellectual honesty.

Of course there are different standards for good epistemics and it's probably bad to outright lie, or be highly misleading. But by EA standards of "good epistemics" it seems less clear if the benefits are worth the costs.

As one example, the AI Safety movement may want to partner with advocacy groups who care about AI using copyrighted data or unions concerned about jobs. But these groups basically always have terrible epistemics and partnering usually requires some level of endorsement of their positions.

As an even more extreme example, as far as I can tell about 99.9% of people have terrible epistemics by LessWrong standards so to even expand to a decently sized movement you will have to fill the ranks with people who will constantly say and think things that you think are wrong.

I'm not sure if this is intentional but this explanation implies that edge patching can only be done between nodes in adjacent layers, which is not the case.

It's unclear to me if this is true because modelling the humans that generated the training data sufficiently well probably requires the model to be smarter than the humans it is modelling. So I expect the current regime where RLHF just elicits a particular persona from the model rather than teaching any new abilities to be sufficient to reach superhuman capabilities.