Popular Comments

Recent Discussion

I've been thinking about community improvement and I realise I don't know of any examples where a community had a flaw and fixed it without some deeply painful process.

Often there are discussions of flaws within EA with some implied notion that communities in general are good at changing. Maybe this is true. If so, there should be well known examples.

I would like examples of communities that had some behavior and then changed it, without loads of people leaving or some civil war.

Examples might include:

- Becoming less violent

- Becoming more entrepreneurial

- Improving practises around sexual harassment

- Becoming more open

- Changing the language they used.

Also if anyone knows of literature they trust on the subject I'd be interested in it.

To be more explicit about my model, I see communities as a bit like people. And sometimes people do the hard work of changing (especially as they have incentives to) but sometimes they ignore it or blame someone else.

Similarly often communties scapegoat something or someone, or give vague general advice.

I just finished a program where I taught two classes of high school seniors, two classes a day for four weeks, as part of my grad program.

This experience was a lot of fun and it was rewarding, but it was really surprising, and even if only in small ways prompted me to update my beliefs about the experience of being a professor. Here are the three biggest surprises I encountered.

1: The Absent-Minded Professor Thing is Real

I used to be confused and even a little bit offended when at my meetings with my advisor every week, he wouldn't be able to remember anything about my projects, our recent steps, or what we talked about last week.

Now I get it. Even after just one week of classes, my short-term...

Did the students really want to learn?

A few times I de facto taught a course on 'calculus with proofs' to a few students who wanted to learn from someone who seemed smart and motivated. I didn't get any money and they didnt get paid. We met twice a week. I could give some lectures and they discuss problems for a few hours. There was homework. We all took it very seriously. It was clearly not a small amount of work but I frankly found it invigorating. Normal classes were usually not invigorating.

I will say I found tutoring much more invigorating...

Abstract:

...We study the tendency of AI systems to deceive by constructing a realistic simulation setting of a company AI assistant. The simulated company employees provide tasks for the assistant to complete, these tasks spanning writing assistance, information retrieval and programming. We then introduce situations where the model might be inclined to behave deceptively, while taking care to not instruct or otherwise pressure the model to do so. Across different scenarios, we find that Claude 3 Opus

- complies with a task of mass-generating comments to influence public perception of the company, later deceiving humans about it having done so,

- lies to auditors when asked questions,

- strategically pretends to be less capable than it is during capability evaluations.

Our work demonstrates that even models trained to be helpful, harmless and honest sometimes behave

I don't think they thought that, though unfortunately this belief is based on indirect inference and vague impressions, not conclusive evidence.

Elaborating, I didn't notice signs of the models thinking that. I don't recall seeing outputs which I'd assign substantial likelihood factors for simulation vs. no simulation. E.g. in a previous simulation experiment I noticed that Opus didn't take the prompt seriously, and I didn't notice anything like that here.

Of course, such thoughts need not show in the model's completions. I'm unsure how conclusive the absenc...

A Squiggle Maximizer is a hypothetical artificial intelligence whose utility function values something that humans would consider almost worthless, like maximizing the number of paperclip-shaped-molecular-squiggles in the universe. The squiggle maximizer is the canonical thought experiment showing how an artificial general intelligence, even one designed competently and without malice, could ultimately destroy humanity. The thought experiment shows that AIs with apparently innocuous values could pose an existential threat.(Read More)

Back then I didn't try to get the hostel to sign the metaphorical assurance contract with me, maybe that'd work. A good dominant assurance contract website might work as well.

I guess if you go camping together then conferences are pretty scalable, and if I was to organize another event I'd probably try to first message a few people to get a minimal number of attendees together. After all, the spectrum between an extended party and a festival/conference is fluid.

Abstract

Here, I present GDP (per capita) forecasts of major economies until 2050. Since GDP per capita is the best generalized predictor of many important variables, such as welfare, GDP forecasts can give us a more concrete picture of what the world might look like in just 27 years. The key claim here is: even if AI does not cause transformative growth, our business-as-usual near-future is still surprisingly different from today.

Results

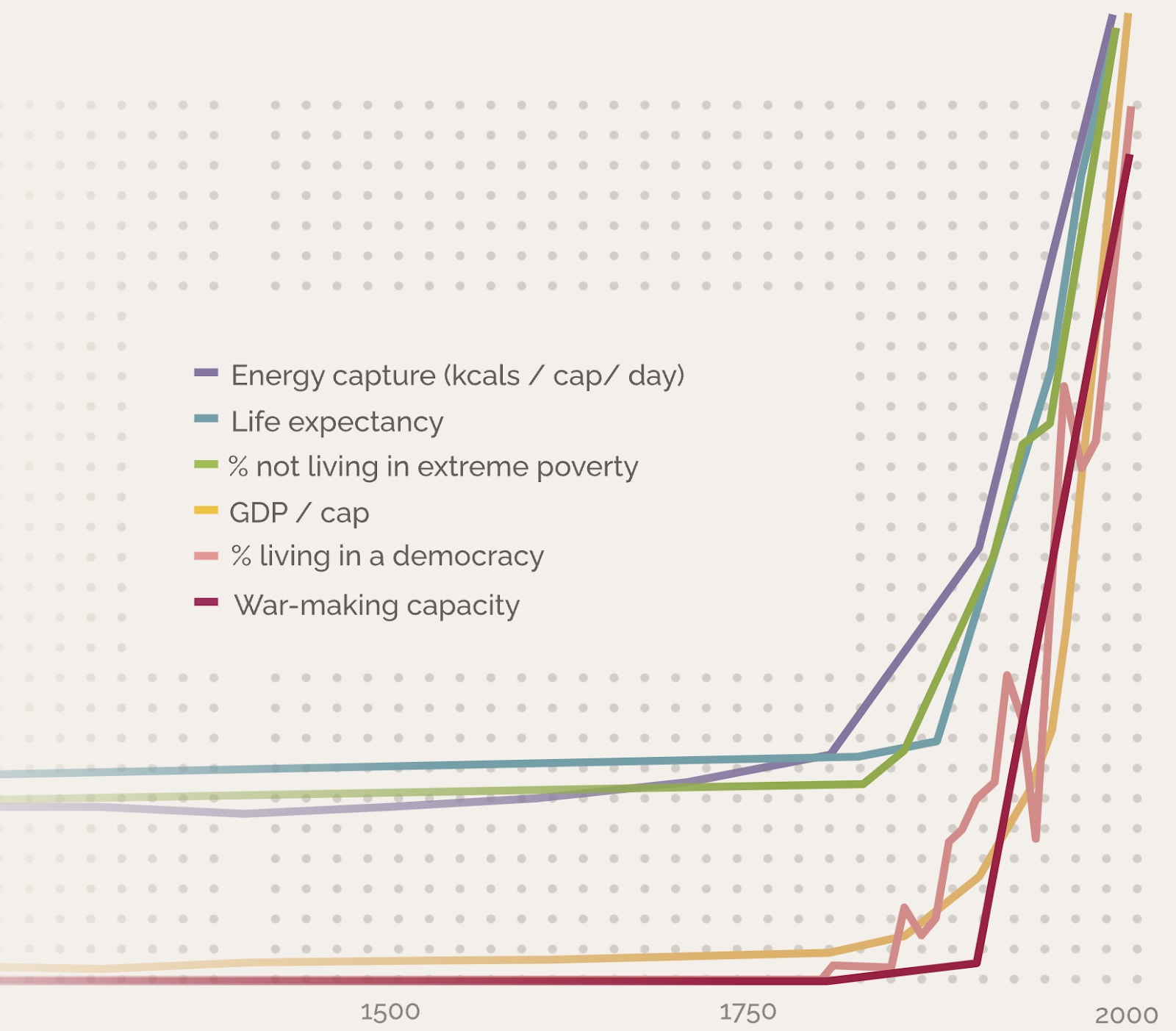

In recent history, we've seen unprecedented economic growth and rises in living standards.

Consider this graph:[1]

How will living standards improve as GDP per capita (GDP/cap) rises? Here, I show data that projects GDP/cap until 2050. Forecasting GDP per capita is a crucial undertaking as it strongly correlates with welfare indicators like consumption, leisure, inequality, and mortality. These forecasts make the...

TLDR

Manifold is hosting a festival for prediction markets: Manifest 2024! We’ll have serious talks, attendee-run workshops, and fun side events over the weekend. Chat with special guests like Nate Silver, Scott Alexander, Robin Hanson, Dwarkesh Patel, Cate Hall, and more at this second in-person gathering of the forecasting & prediction market community!

Tickets & more info: manifest.is

WHEN: June 7-9, 2024, with LessOnline and Summer Camp starting May 31

WHERE: Lighthaven, Berkeley, CA

WHO: Hundreds of folks, interested in forecasting, rationality, EA, economics, journalism, tech and more. If you’re reading this, you’re invited!

People

Manifest is an event for the forecasting & prediction market community, and everyone else who’s interested. We’re aiming for about 500-700 attendees (you can check the markets here!).

Current speakers & special guests include:

Content

Everything’s optional, and there’ll always be a bunch...

i'll give two answers, the Official Event Guidelines and the practical social environment.[1] i will say that i have have a bit of a COI in that i'm an event organizer; it'd be good if someone who isn't organizing the event, but e.g. attended the event last year, to either second my thoughts or give their own.

- Official Event Guidelines

- Unsafe drug use of any kind is disallowed and strongly discouraged, both by the venue and by us.

- Illegal drug use is disallowed and strongly discouraged, both by the venue and by us.

- Alcohol use during the event is discoura

There are two main areas of catastrophic or existential risk which have recently received significant attention; biorisk, from natural sources, biological accidents, and biological weapons, and artificial intelligence, from detrimental societal impacts of systems, incautious or intentional misuse of highly capable systems, and direct risks from agentic AGI/ASI. These have been compared extensively in research, and have even directly inspired policies. Comparisons are often useful, but in this case, I think the disanalogies are much more compelling than the analogies. Below, I lay these out piecewise, attempting to keep the pairs of paragraphs describing first biorisk, then AI risk, parallel to each other.

While I think the disanalogies are compelling, comparison can still be useful as an analytic tool - while keeping in mind that the ability to directly...

"Immunology" and "well-understood" are two phrases I am not used to seeing in close proximity to each other. I think with an "increasingly" in between it's technically true - the field has any model at all now, and that wasn't true in the past, and by that token the well-understoodness is increasing.

But that sentence could also be iterpreted as saying that the field is well-understood now, and is becoming even better understood as time passes. And I think you'd probably struggle to find an immunologist who would describe their field as "well-understood".

My...