I’ve lately been contemplating the problem of developing high-quality checklists at work for troubleshooting programs that work with big data. It is easily the most difficult thing I am considering, but also easily the most productivity-improving given adoption. Previous efforts at getting such tools to work were not successful, but neither were they very good. The viability threshold seems *very* high, probably for You Have About Five Words reasons.

The viability threshold seems *very* high, probably for You Have About Five Words reasons.

I'm not 100% sure I parse this sentence. Interested in you expounding a bit.

The tools at work I have used in the past were as much reference material as checklist; this had the effect of making them a completely separate, optional action item that people only use if they remember.

The example checklists from the post are all as basic as humanly possible: FLY AIRPLANE and WASH HANDS. These are all things everyone knows and can coordinate on anyway, but the checklist needs to be so simple that it doesn’t really register as an additional task. This feels like the same sort of bandwidth question as getting dozens or hundreds of people to coordinate on the statement USE THE CHECKLIST.

Put another way, I think that the reasoning in You Have About Five Words is recursive.

I'm curious about the process of actually using these checklists. Is there ink and paper involved, with someone physically drawing a check in a box? Does one person do the thing and a different person check the box? (Sounds like that's the case with doctors and nurses at least.) Or is it just, like, "here's a list, follow the list"? Presumably it differs between use cases and organizations, but are there guidelines for what processes to use when?

I'm imagining failure modes where someone has an internal experience like... "okay, done step 1, and now step 2 is right over here, might as well do that and go back and check them both. Wait, hm, did I do step 1? Pretty sure, yeah." And then absentmindedness eventually leads to checking step 1 despite not having done it. Presumably much less likely than abesentmindedly forgetting step 1 without a checklist, but I'm wondering how necessary and/or common it is to guard against that kind of thing.

This in fact varies a lot! Whether it's ink or paper versus a computer screen depends on what a given hospital uses for charting; I've done both ways. A lot of major procedure checklists (the central line one, surgical checklists) are filled out by a separate person whose job is basically just observing and documenting (and, of course, calling people out for skipping items!) Whereas checklists for smaller, more routine tasks are done by a single person in a less formal way – e.g. the Five Rights of med administration, where you double-check at the bedside, against the chart, that you have the right patient, right med, right dose, right time, and right route of administration.

Gawande does make a distinction in the book between, basically, whether you read off a checklist item, do it (or have someone else do it), and then check it off, versus whether you carry out the task and then review the checklist to make sure you did everything.

I do think it helps on the absentmindedness front that medical tasks are very concrete and...pretty memorable? Even if I was half zoned out at the time, it's a lot easier for me to remember whether I cleaned a patient's pubic area with iodine than "wait did I cc person X on that email?" And, of course, in that case the effects are also visible, because now the patient's pubic area is yellow! Items like checking a patient's bracelet and the name of a med are, in a lot of modern hospitals, automated via using a bar code scanner linked to the patient's digital chart, leaving a lot less room for error.

Bugs are common in software development [citation needed].

I decided to introduce checklists in the development process of our team. This page in our wiki explains the reason:

Checklists are an essential component of high-quality processes, e.g. in aviation, medicine or construction. But checklists can also be a major obstacle. We want to build high quality software without losing a fast and flexible process. How does that work? We use checklists in a targeted manner and consider when and how checklists make sense.

A very good explanation of what is important can be found here (refers to the very readable Checklist Manifesto).

- Step 1: Identify “Stupid Mistakes” That Cause Failure → see Known Traps and Post Mortems.

- Step 2: Seek Additional Input From Others → for example in retro's

- Step 3: Create Simple “Do” oder "Test" Steps → in a checklist, which should become part of the process

- Step 4: Create Simple “Talk” Steps → which can take place after the Daily Standup or as part of the Code Review

- Step 5: Test The Checklist

- Step 6: Refine the Checklist → nothing is perfect; review the success in a retrospective or when things are not going well

Important: The checklist must only contain the most important sources of error. Completeness is not the goal, but to ward off the greatest risks without slowing down the process.

We use checklists at three different points of the development process:

- A Ready Checklist is used to ensure key business and architecture aspects are considered, and the ticket can be picked up by a developer without (much) further back and forth.

- A Code Review Checklist covers business-critical classes of error and things hard to fix later (e.g. database changes). It leaves general adherence to engineering standards to the reviewer's discretion (we use MoSCoW there).

- The Approval Checklist is used by testers and subject matter experts to ensure the feature is fit for use (e.g. works on mobile).

I can confirm that people sometimes roll their eyes, but consistently using the checklists leads to much better quality.

One difference between hospitals and programming is that code is entirely digital, so a lot of check lists can be replaced with automated tests. For example:

- Instead of "did you run the code to make sure it works?", have test cases. This is traditional test cases. (Fully end-to-end testing is very hard though, to the point that it can be more feasible to do some QA testing instead of trying to automate all testing.)

- Instead of "did you click the links you added in the documentation to make sure they work?", have a test that errors on broken links. Bonus is that if the docs link to an external page, and that link breaks a month later, you'll find out.

- Instead of "did you try running the sample code in the library documentation?", have a test that runs all code blocks in docs. (Rust does this by default.)

- Instead of "did you do any of these known dangerous things in the code?", have a "linting" step that looks for the dangerous patterns and warns you off of them (with a way to disable in cases where it's needed).

Of course not everything can be automated (most of Gunnar's list sounds like it can't). But when it can be, it's nice to not even have to use a checklist.

Our code review checklist looks like this:

- Have GDPR annotations been added for all fields? (all fields that are stored persistently count)

- Do interactions with the user happen that should be recorded as events?

- Is data collected for later use (logging doesn’t count, anything in a database does)? Are there reports or some other way to find this data?

- Are there no unencrypted credentials in any files?

- Are there notable changes that should be recorded in an ADR?

(I replaced the links with public alternatives)

Who is less likely to benefit from checklists?

One perspective might be this, from a 2014 article on Netflix's human resources practices which involved comparatively less rules for employees and more individual responsibility:

As a society, we’ve had hundreds of years to work on managing industrial firms, so a lot of accepted HR practices are centered in that experience. We’re just beginning to learn how to run creative firms, which is quite different. Industrial firms thrive on reducing variation (manufacturing errors); creative firms thrive on increasing variation (innovation).

So the more your activity is about reducing errors, the more you'll benefit from checklists; the more it's about creativity, the less you might benefit.

For instance, in the quote above, the Netflix CEO conceptualizes his company as a creative firm, though a more fine-grained categorisation might work even better.

Super-intelligence deployment checklist:

- DO NOT DEPLOY THE AGI UNTIL YOU HAVE COMPLETED THIS CHECKLIST.

- Check the cryptographic signature of the utility function against MIRI's public key.

- Have someone who has memorized the known-benevolent utility function you plan to deploy check that it matches their memory exactly. If no such person is available, do not deploy.

- Make sure that the code references that utility function, and not another one.

- Make sure the code is set to maximize utility, not minimize it.

- Deploy the AGI.

(This was written in jest, and is almost certainly incomplete or wrong. Do not use when deploying a real super-intelligent AGI.)

I think a shorter checklist would do (or longer, depending on how you look at it):

-

DO NOT DEPLOY THE AGI UNTIL YOU HAVE COMPLETED THIS CHECKLIST.

-

GO TO 1.

Sometimes, tasks are one-offs, unreliable, or demand that you take steps dynamically on some trigger condition, rather than as a series of steps. For example, if I'm working in the bio-safety cabinet in my lab, I need to re-wet my hands with ethanol if I take them out. If I spill something, I need to re-sterilize. Each experiment might place its own demands.

So in addition to checklists, I think it's important to develop the complementary skill of cognizance. It's a habit of mind, in which you constantly quiz yourself with each action about what you're trying to do, how it's done, why, what could go wrong, and how to avoid those outcomes.

For some tasks, the vast majority of errors might be in a few common categories, most effectively addressed with a checklist. For others, the vast majority of errors might come down to a wide range of hard-to-predict situational factors, best avoided with a habit of cognizance.

The book is great.

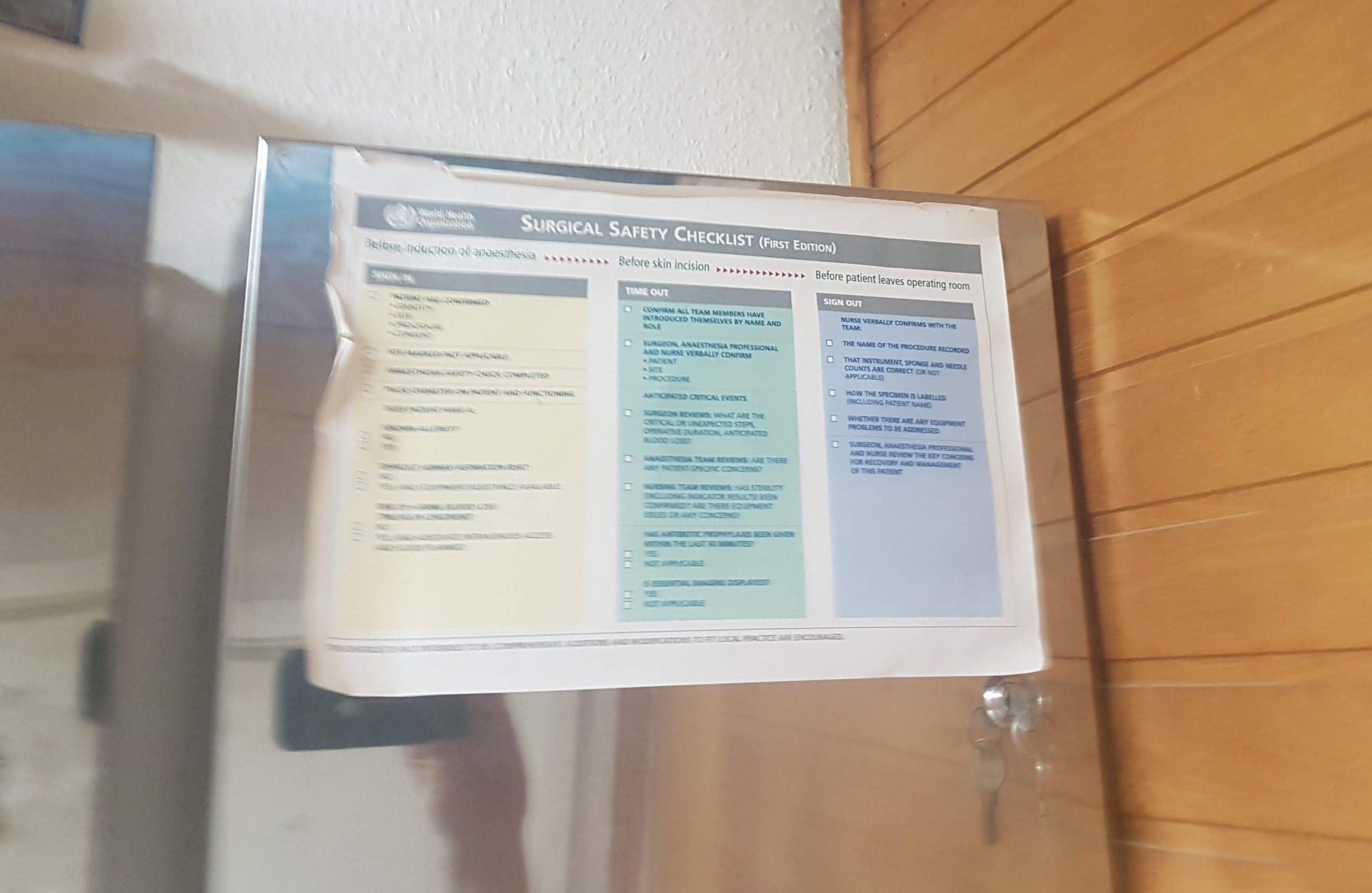

Here is a link to the Surgical Safety Checklist used in hospitals.

After checking whether German hospitals use checklists with unclear results, I decided to print one out and have it available just in case:

Not sure whether doctors will like it when I come up with them in a hospital but so far, that was not needed, luckily.

Atul Gawande’s The Checklist Manifesto was originally published in 2009. By the time I read it a few years ago, the hard-earned lessons explained in this book had already trickled into hospitals across North America. It’s easy to look at the core concept and think of it as trivial. For decades, though, it was anything but obvious.

Atul Gawande walks readers through his experience of how the modern medical system fails. The 20th century saw vast increases in medical knowledge, both through a richer understanding of the body, and from swathes of new drugs, tests, and surgical procedures. And yet, mistakes are still made; diagnoses are missed, critical tests aren’t run, standard treatments aren’t given. Even when the right answer is known by someone – and often by everyone involved – patients slip through the cracks.

Fundamentally, medicine knows too much; even decades of medical training are insufficient for a doctor to know everything. A hospitalized patient’s treatment involves coordination between dozens of different specialized professionals and departments. The hospital environment itself is chaotic, time-pressured and filled with interruptions and distractions: far from ideal for human workers making high-stakes decisions. Patients are subjected to many interventions, most of which are complex and carry some risk; the average ICU patient requires roughly 178 daily care tasks (having worked as an ICU nurse myself, I believe it!), so even getting it perfect 99% of the time leaves an average of about two medical errors per day.

Medical professionals know how to perform all 178 of those tasks; they’ve probably done them hundreds if not thousands of times. The failure is one of reliability and diligence – skills for which Atul Gawande has a deep appreciation. In another book, Better, he says:

As Gawande notes, these failures of diligence are far from unique to healthcare. He spends much of the book describing his investigations of other fields and conversations with their various experts. (An adorable Atul Gawande trait is how he’s the sort of person who will befriend the construction crew working on a new wing for the hospital where he works, get himself invited to their offices, and spend multiple pages of his book enthusiastically describing their project management systems.)

Other professions face the same basic problem: the knowledge base and the complexity of the work grows until no single expert can fit all the relevant pieces into their head. The attentional load grows, and getting it right 99% of the time isn’t good enough. Mistakes are made, details go unnoticed, corners are cut by rushed and overworked staff, and (in the medical field at least) people die.

Fortunately for Gawande’s medical practice, he found that other industries had already explored and thoroughly tested some solutions. The basic problem of human reliability in complex situations is one that the airline industry had already discovered in the early 20th century. The US army corps was testing new bomber aircraft designs, and one of these was Boeing’s Model 299. It was a miracle of engineering; it could hold five times as many bombs as the specs had requested, and flew faster and further than any previous plane.

But during its first test flight, on October 30, 1935, the plane crashed. Technically, the equipment functioned perfectly. But the controls were so numerous and complicated that human error was almost inevitable. The pilot, overwhelmed, forgot to release a new locking mechanism on the elevator and rudder controls. As a newspaper at the time wrote, it was “too much airplane for one man to fly.”

The US army air corps chose a different design, sacrificing performance for simplicity.

They didn’t give up, though; they ordered a handful of Model 299s, and handed them over to a team of test pilots, who put their heads together and tried to find a way for pilots to safely fly a plane that was too challenging for even the most highly-trained, expert human brains to handle.

They wrote a checklist. It was an easy list of tasks, ones that all pilots already knew to do – checking instruments, releasing brakes, closing doors and windows, unlocking elevator controls. And yet, however obvious, it made all the difference; the test pilots went on to fly a total of 1.8 million miles of airtime with no accidents, and the army ordered thousands of the aircraft, later called the B-17. One index-card sized checklist ended up giving the US army a decisive advantage in WWII.

A checklist does not need to be long to be useful. One of the first checklists introduced in hospitals, aimed at decreasing central line infections in ICU patients, was trialled at John Hopkins Hospital in Baltimore, in 2001. It had five steps. The doctor was supposed to:

All of these were steps that doctors were already supposed to be taking, and not even hard steps. (I know that I rolled my eyes at this list when I was introduced to it.) But hospital staff are busy, stressed, and sleep-deprived – and perfect 100% reliability is nearly impossible for humans even under ideal conditions. Part of the change being introduced was a social one: nurses were responsible for documenting that the doctor had carried out each step, and had a new mandate – and backup from management and hospital administration – to chide doctors who forgot items.

Which, it turned out, made all the difference. In the first ten days of the experiment, the line infection rate went from 11% to zero. Over the next fifteen months, there were two (2) infections total. Compared to projections based on previous rates, the simple protocol prevented 43 infections and eight deaths – not to mention saving the hospital millions of dollars.

And yet, even after decades, checklist-style interventions are not universal. Healthcare is still far less systematized than airline safety (with their booklet of procedures for every single kind of emergency), or construction (with its elaborate project management systems and clear lines of communication for any front-line worker to report concerns to the engineers). As Atul Gawande puts it:

From early on, the data looked conclusive; checklists in a hospital setting saved lives. But over and over, Atul Gawande mentions the difficulties he and others faced in getting buy-in from medical staff to adopt new checklists. They were too time-consuming. The items were confusing or ambiguous. The staff rolled their eyes at how stupidly obvious the checklist items were; whatever the data showed, it just didn’t feel like they ought to be necessary.

Making a good human-usable checklist takes a lot of workshopping. Airlines are still constantly revising their 200-page manual of individually optimized checklists for every possible emergency, as plane designs change and new safety data rolls in. (Amusing fact: the six-item checklist for responding to engine failure while flying a single-engine Cessna plane starts with “FLY THE AIRPLANE”.) Gawande and his team spent months refining their surgical safety checklist before they had something usable, and even now, it’s not universally adopted; implementing the list in new hospitals, especially in the developing world, means adjusting it for existing local protocols and habits, available resources, and cultural factors.

But even in the poorest hospitals, using it saves lives. And there’s a broader lesson to be learned, here. In any complex field – which encompasses quite a lot of the modern world – even very obvious, straightforward instructions to check off for routine tasks can cut down on the cognitive overhead and reduce “careless” human error, making perfect performance much more feasible.