This is a summary of a follow-up study conducted by the Existential Risk Observatory, which delves into a greater number media items. To access our previous study, please follow this link. This is the second post on the data collected on the second iteration of research done by the Existential Risk Observatory. This second post covers a new aspect of this study: the views of the American general public on the idea of imposing an AI moratorium and their likelihood of voting. To read the first post, please follow this link.

Research Objectives

The objective of this study is to explore the attitudes of the American population towards the concept of imposing a six-month pause on training AI-models more capable than GPT-4 and their inclination to vote, both prior to and after receiving information about the potential existential dangers of AI. The approach taken involved analyzing the changes in the opinions of the study participants towards the AI moratorium, following their exposure to media interventions.

Measurements and Operationalization

The study employed three primary measurements - "Pause Laboratories," "Pause Government," and "Voting Likelihood" - to assess changes in participants' perceptions before and after the media intervention. The "Pause Laboratories" measurement evaluated participants' views on whether AI labs should pause training of AI systems that are more advanced than GPT-4 for at least six months. The "Pause Government" measurement gauged participants' opinions on whether the US government should impose a moratorium on training more powerful AI systems than GPT-4 if the labs do not implement a pause quickly. The "Voting Likelihood" measurement assessed participants' likelihood of voting in a referendum on temporarily halting the training of AI systems, rated on a scale from 0 (definitely not voting) to 10 (certain to vote).

To gather data, the study used Prolific, a platform that locates survey respondents based on predefined criteria. The study involved 300 participants, with 50 participants in each survey, who had to be US residents, fluent in English, and at least 18 years old.

Data Collection and Analysis

Data was collected through surveys in April 2023. The data analysis comprised three main sections: (1) comparing changes in the key indicators before and after the intervention, (2) exploring participants' views on the possibility of an AI moratorium and their likelihood of voting for it, and (3) assessing the number of participants who were familiar with or had confidence in the media channel used in the intervention.

Media Items Examined

- CNN: Stuart Russell on why A.I. experiments must be paused

- CNBC: Here's why A.I. needs a six-month pause: NYU Professor Gary Marcus

- The Economist: How to stop AI going rogue

- Time 1: Why Uncontrollable AI Looks More Likely Than Ever | Time

- Time 2: The Only Way to Deal With the Threat From AI? Shut It Down | Time

- FoxNews Article: Artificial intelligence 'godfather' on AI possibly wiping out humanity: ‘It's not inconceivable’ | Article

- FoxNews Video: White House responds to concerns about AI development | Video

Results

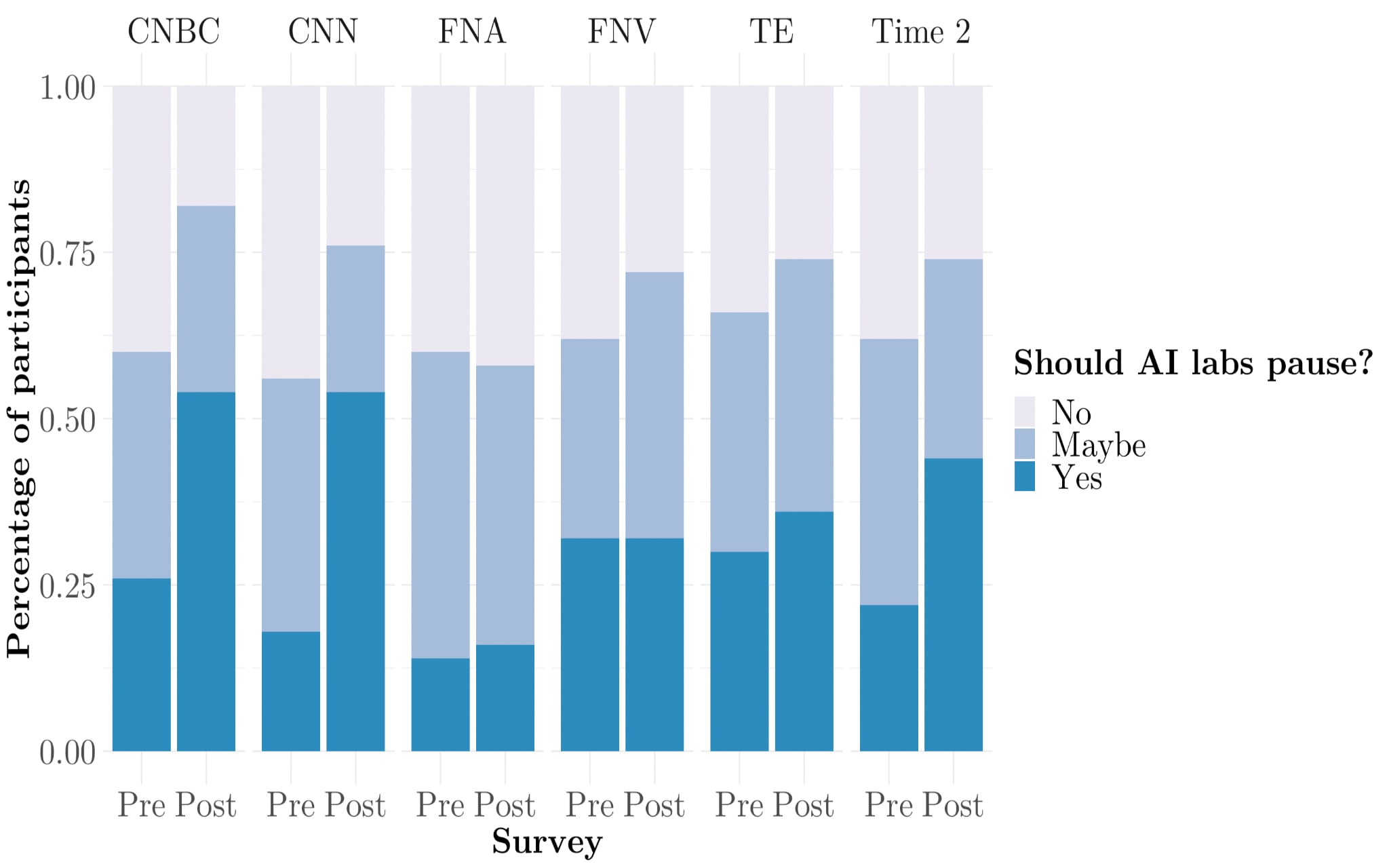

Pause Laboratories

The graph below illustrates the breakdown of participant responses (Yes, No, Maybe) to the Pause Laboratories indicator, which assesses their opinion on whether AI labs should halt the training of AI systems more advanced than GPT-4 for a minimum of six months. Prior to the intervention, the Yes and Maybe responses were on average 61 percent, with the Yes response with an average of 24 percent.

After exposure to media interventions, most surveys showed an increase in both Yes and Maybe responses, except for the Fox News survey, which had a decrease in Yes responses. The Yes and Maybe responses were on average 73 percent, with the Yes response with an average of 39 percent. The highest Yes rates after the intervention were found in the CNBC and CNN surveys at 54 percent.

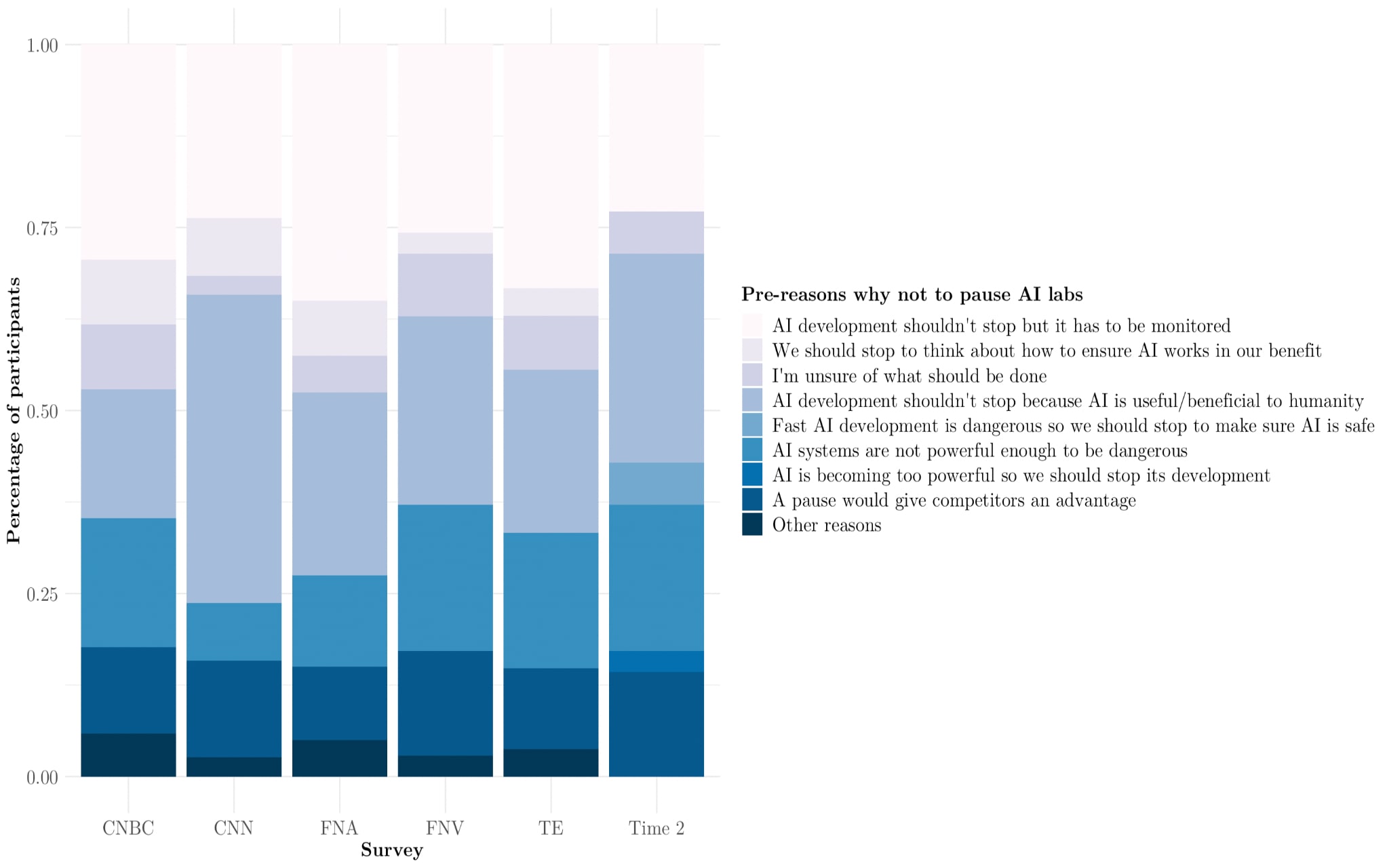

Pre Reasons for Participants that Answered No

Figure 2: Participants’ reasons given for answering No to Pause Laboratories indicator prior to intervention

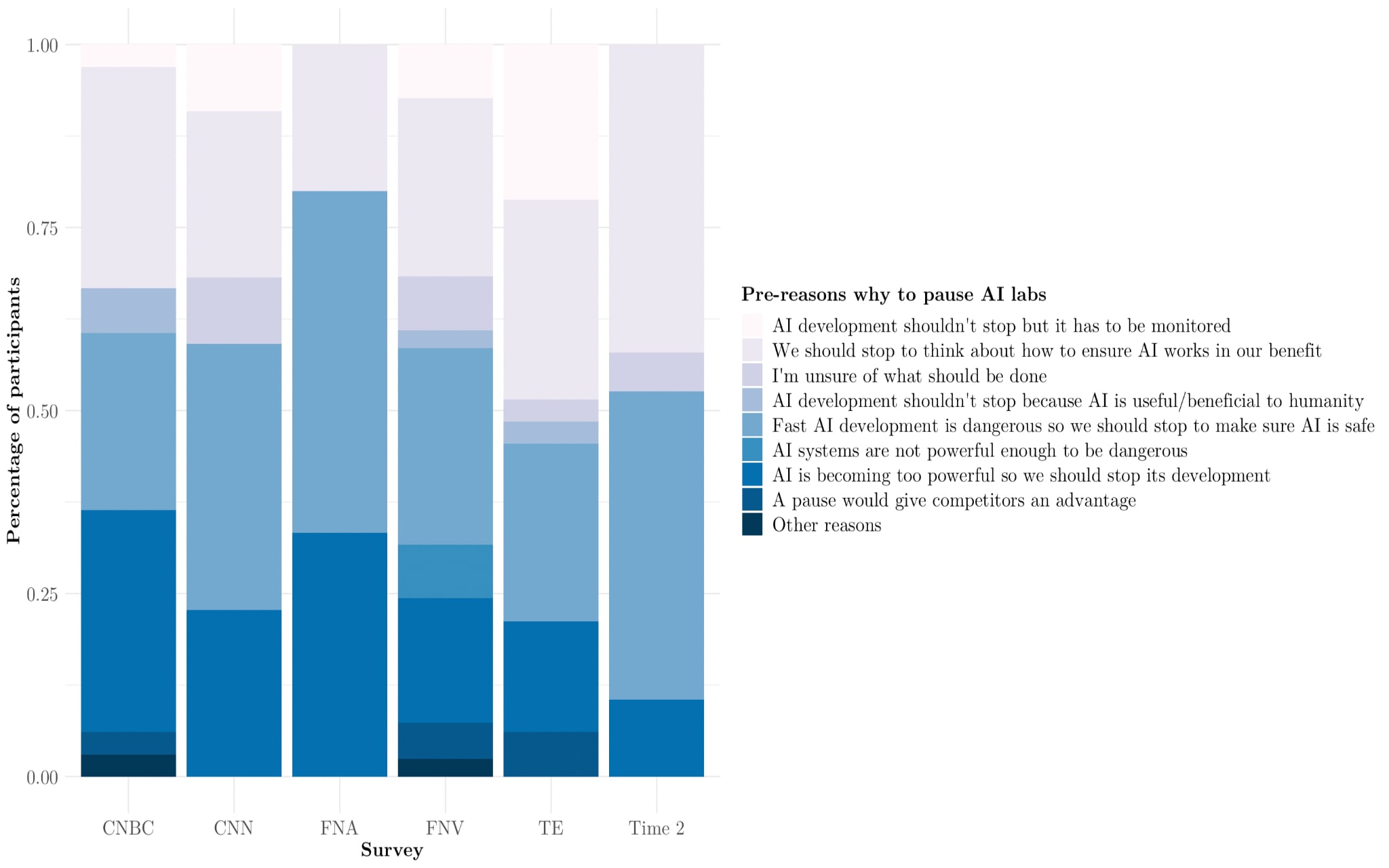

Pre Reasons for Participants that Answered Yes

Figure 3: Participants’ reasons given for answering Yes to Pause Laboratories indicator prior to intervention

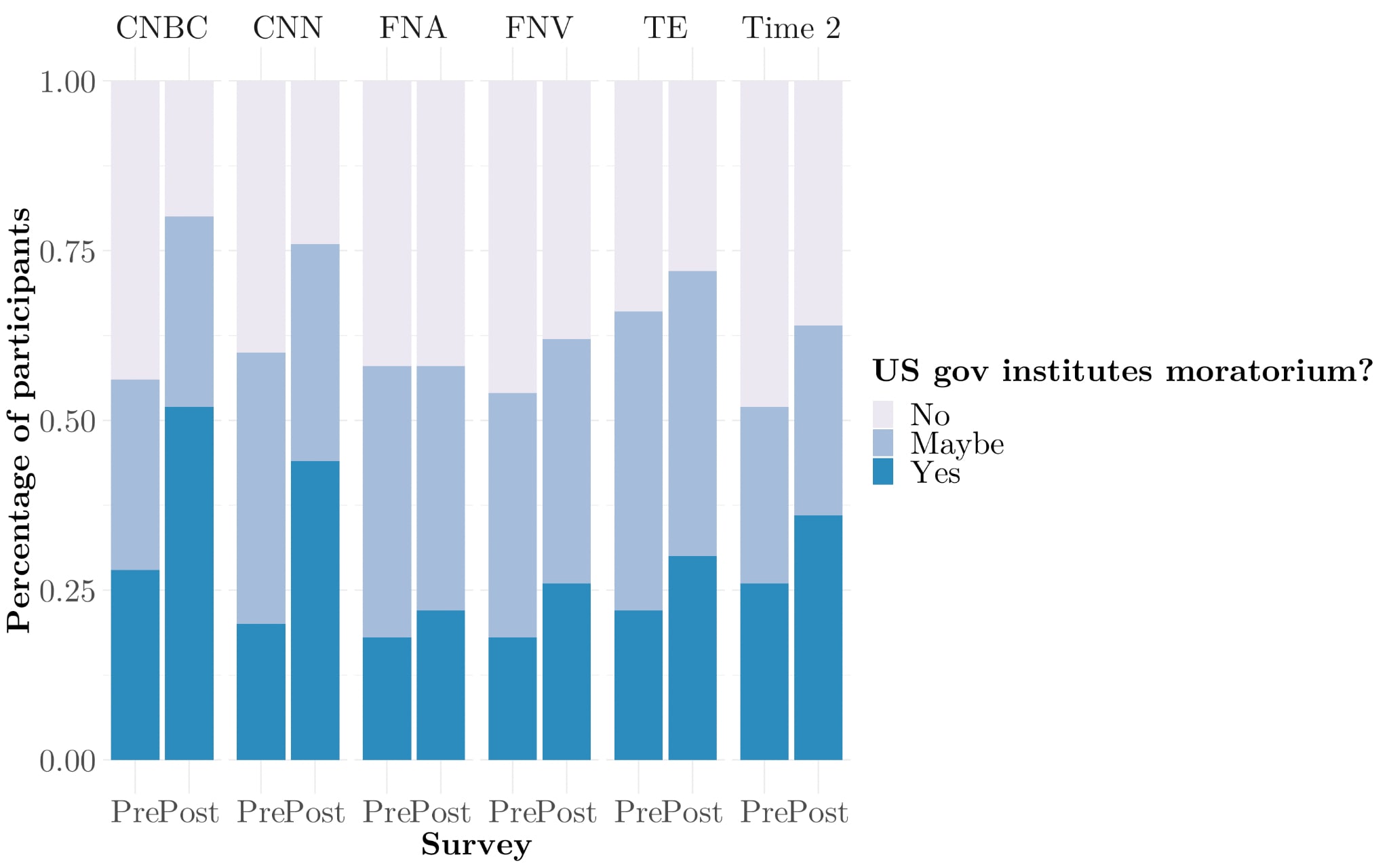

Pause Government

The graph below illustrates the breakdown of participant responses (Yes, No, Maybe) to the Pause Government indicator, which measures the opinions of participants on whether the US government should enforce a ban on the training of AI systems more advanced than GPT-4, in the event that labs do not implement a quick enough pause. Prior to the intervention, the Yes and Maybe responses were on average 56 percent, with the Yes response with an average of 22 percent.

After the intervention, all surveys except for the Fox News survey showed an increase in both Yes and Maybe responses. The Yes and Maybe responses were on average 69 percent, with the Yes response with an average of 35 percent. The highest Yes rates after the intervention were found in the CNBC and CNN surveys at respectively 52 and 44 percent.

Figure 4: Change in public opinion on AI moratorium per the Pause Government indicator after the intervention across surveys

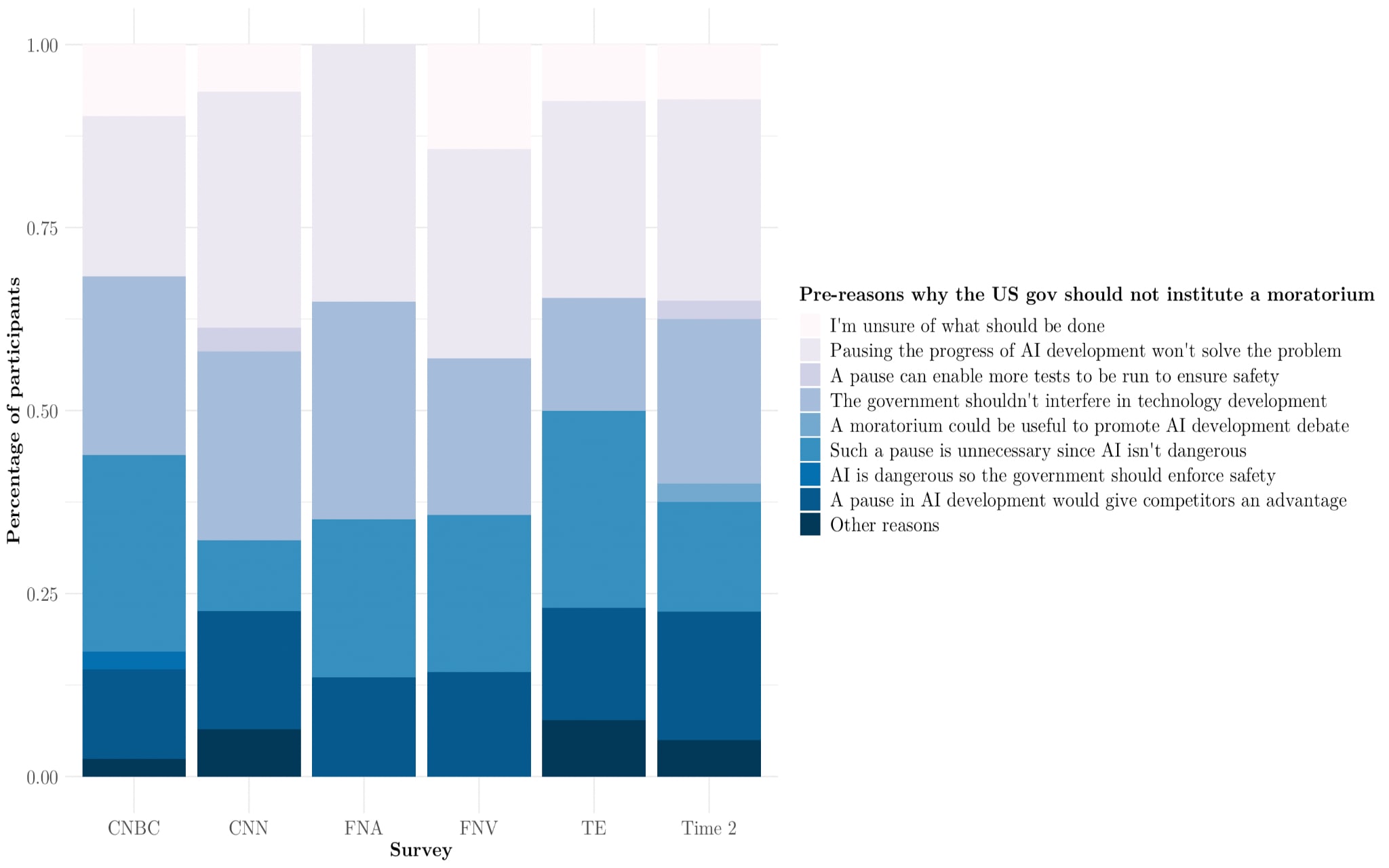

Pre Reasons for Participants that Answered No

Figure 5: Participants’ reasons given for answering No to Pause Government indicator prior to intervention

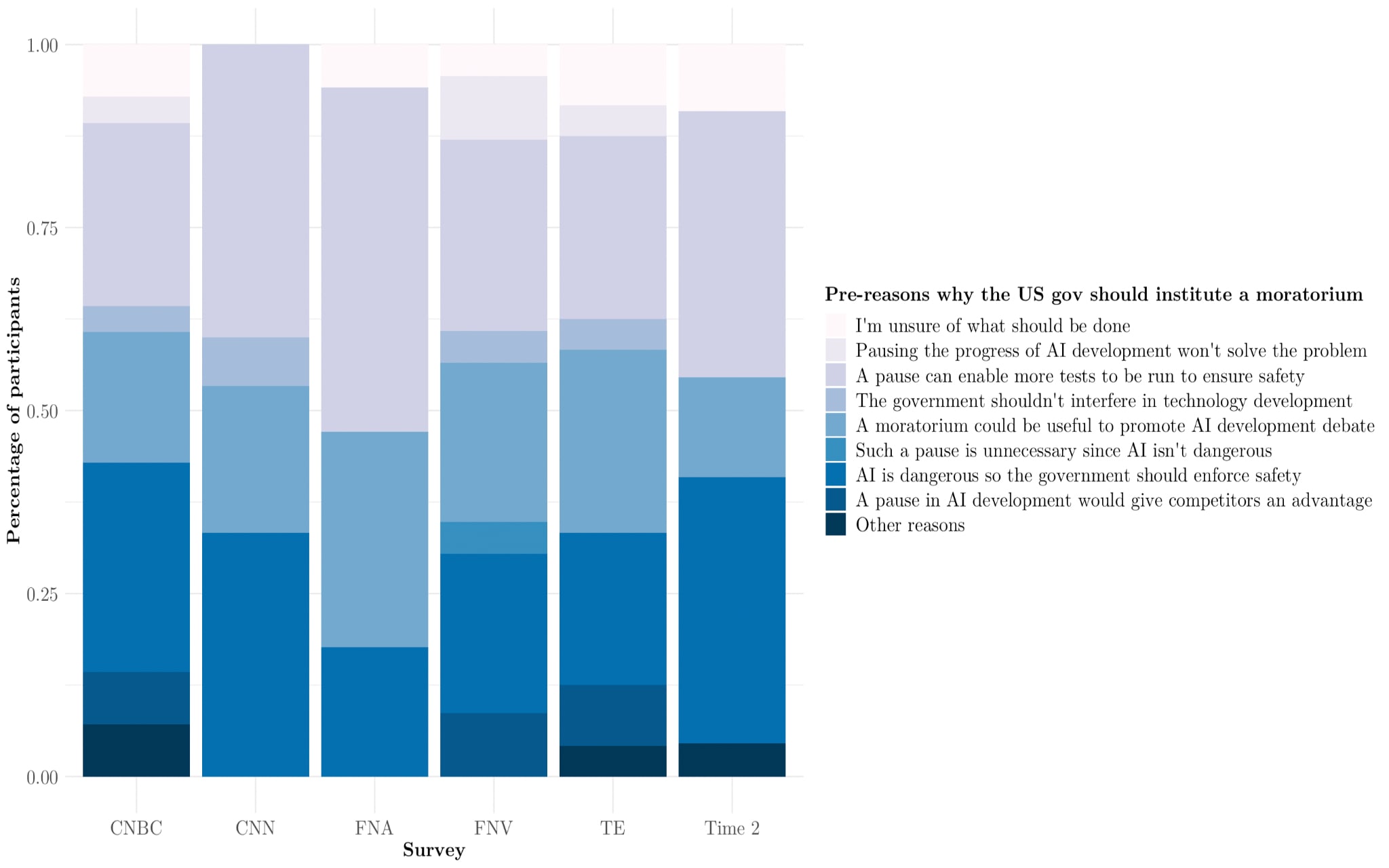

Pre Reasons for Participants that Answered Yes

Figure 6: Participants’ reasons given for answering Yes to Pause Government indicator prior to intervention

Pause Laboratories + Pause Government

This section investigates the relationship between the Pause Laboratories and Pause Government indicators and their influence on public perception towards AI governance. Specifically, it explores whether individuals who support a pause in AI development also believe that government intervention is crucial, and vice versa. By examining the intersection of these indicators, this research aims to shed light on the public's perceptions of the roles of both private and public entities in regulating AI development, providing valuable insights into the overall sentiment towards responsible AI governance.

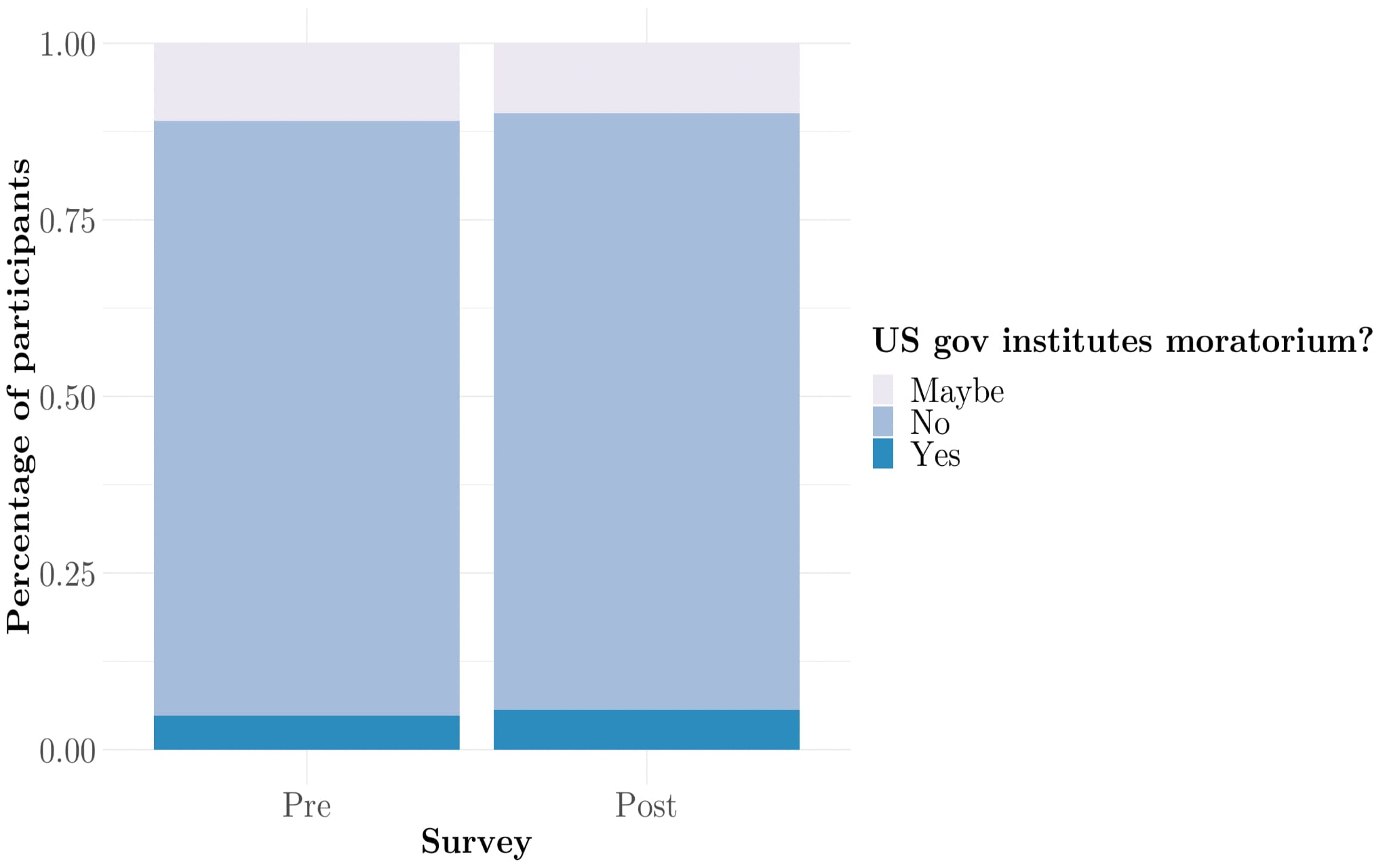

The graph presented below illustrates that respondents who selected No as their answer to whether AI development beyond GPT-4 should be paused within six months, also showed a majority preference (about 85 percent of respondents, both before and after the intervention) for no government intervention to enforce a moratorium. This trend was consistent both before and after the intervention.

Figure 7: Change in public opinion on AI moratorium per the Pause Government indicator of participants that chose the answer No for Pause Laboratories

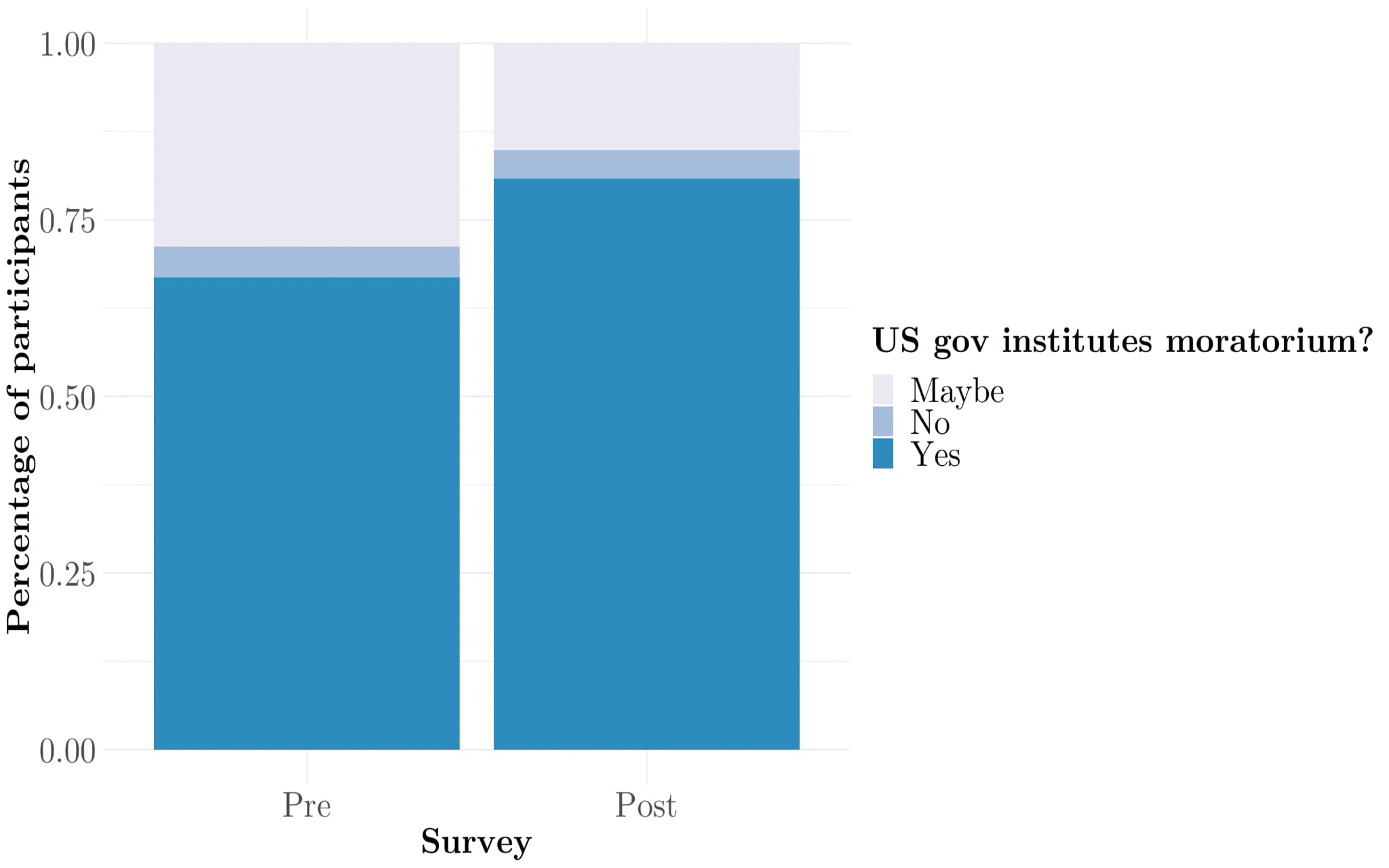

The graph presented below demonstrates a shift in the preferences of respondents who chose Yes in response to whether AI development beyond GPT-4 should be halted within six months, with regards to the government's role in enforcing a moratorium, before and after the intervention. The most significant change observed was the rise in the number of participants who selected Yes to the government's implementation of a moratorium, increasing from 70 percent to 80 percent after the intervention.

Figure 8: Change in public opinion on AI moratorium per the Pause Government indicator of participants that chose the answer No for Pause Laboratories

Voting Likelihood

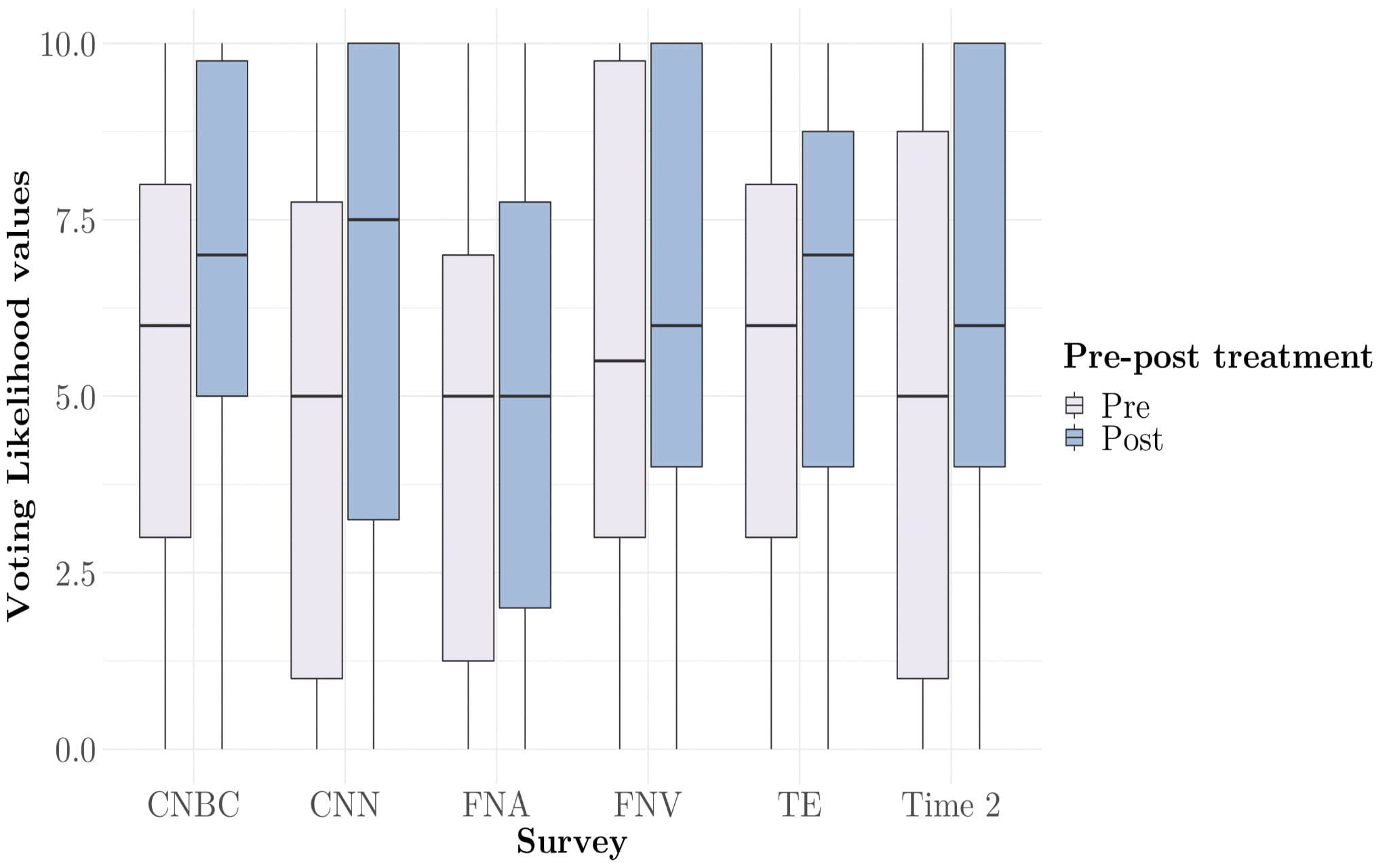

The graph presented below shows the distribution of the Voting Likelihood indicator, which measures the probability of participants voting in a referendum on temporarily pausing the training of AI systems more advanced than than GPT-4. Across all surveys, there was a general increase in both the median and mean values of the voting likelihood from before to after the intervention, except for the Fox News Article survey, which showed no change in the median value. The CNN and Time (Eliezer Yudkowsky) surveys exhibited the most substantial increase in mean value, with an increase of 1.5 points, followed by CNBC with an increase of 1.2 points. The FoxNews surveys reported the lowest mean increase in voting likelihood, with an increase of only 0.1 for the article version and 0.3 for the video version.

Figure 9: Pre-post summary of the distribution of values from a scale from 0 to 10 for the Voting Likelihood indicator across surveys

Conclusion: Based on the results of this research, it can be suggested that the general American public's opinions on the implementation of an AI moratorium can be influenced by exposure to media about the existential risks of AI. The likelihood of voting in a moratorium of a temporary pause on the training of more advanced AI systems than GPT-4 also increased after exposure to media interventions, indicating an increased interest in the outcome of a government intervention in regulating AI development.

The survey findings indicate that the majority of participants in all surveys held the view that the development of AI beyond GPT-4 should either be halted or considered for a pause within the next six months. The proportion of participants who answered Yes or Maybe increased from an average of 61 percent before the intervention to 73 percent after the intervention, with a mean of 39 percent responding Yes after the intervention. Additionally, the survey results showed an upward trend in the number of participants who supported government intervention to impose a moratorium on AI development beyond GPT-4, after being informed of potential risks. This proportion increased from an average of 56 percent answering Yes or Maybe before the intervention to 69 percent after the intervention, with a mean of 35 percent responding Yes after the intervention. Furthermore, the data suggests that most respondents who believed that AI laboratories should pause development also believed that the government should enforce a moratorium, with an average of 70 percent before the intervention and 80 percent after the intervention.

In a final note, the surveys revealed variations in responses across different media sources, suggesting the importance of media framing and messaging in shaping public opinion on complex issues such as AI existential risk. This underscores the need for future research to investigate the impact of narrative framing in media intervention. In general, these findings highlight the need for continued public discourse and education on the capabilities of AI and the risks that come with them, as well as the importance of responsible development and governance in this rapidly advancing field.

What do you think of your sample size? Is it hard to draw inferences from 300 people?

Also I'd be careful about drawing inferences from data via Prolific without attempts to reweigh the sample for representativeness. The raw Prolific crew is quite liberal and quite educated. 74% of them voted for Joe Biden over Donald Trump.

Hi Peter, thanks for your comment. We do think the conclusions we draw are robust based on our sample size. If course it depends on the signal: if there's a change in e.g. awareness from 5% to 50%, a small sample size should be plenty to show that. However, if you're trying to measure a signal of only 1% difference, your sample size should be much larger. While we stand by our conclusions, we do think there would be significant value in others doing similar research, if possible with larger sample sizes.

Again, thanks for your comments, we take the input into account.

I am a big fan of this research! Good to measure the effectiveness of an intervention.

And awesome you jumped on this so quickly after the pause letter!

Hopeful stuff!

Maybe you could use this study to get funding for a bigger study? Maybe hire Americans to go door to door to ask them to participate for a more representative sample? Or get a bigger research corp to do it for you?

A question. I think there is a mistake in 8. It says:

Figure 8: Change in public opinion on AI moratorium per the Pause Government indicator of participants that chose the answer No for Pause Laboratories

But from what it says above the figure, I get the impression that this figure is about the subset of people that answered Yes to the Pause Laboratories indicator. That this subset of people's results on the government pause indicator are shown. Am I correct? In the figure 8 description, it says it shows the people who answered No for the Pause Laboratories.

Interesting results!

Does "TE" in the graphs mean "Time 1: Why Uncontrollable AI Looks More Likely Than Ever | Time" and "Time 2" mean "Time 2: The Only Way to Deal With the Threat From AI? Shut It Down | Time"? I was a bit confused.

Thanks Gabriel! Sorry for the confusion. TE stands for The Economist, so this item: https://www.youtube.com/watch?v=ANn9ibNo9SQ