Fermi Paradox

This post has two parts. First, I outline some way to evaluate arguments about the Fermi paradox which show ways that it is unlikely to be solved by, as well as some common arguments against the Early Filter. Second is my current evaluation of what I consider a likely scenario, which is a form of zoo hypothesis motivated by universal game theory as well as some related science-fictiony scenarios that we could find ourselves in.

Fermi Paradox and what is unlikely to be solved by

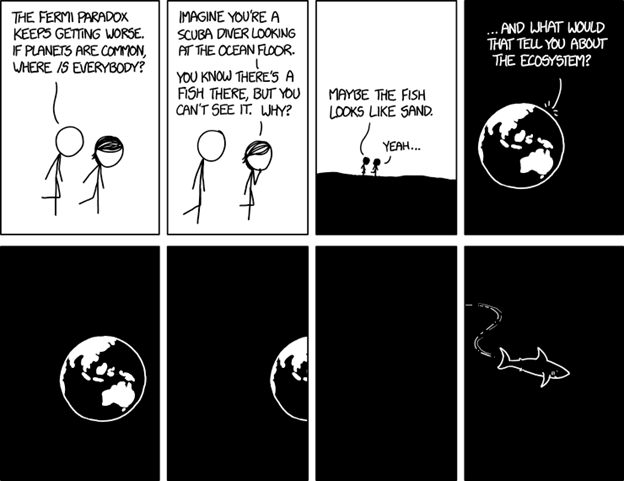

The Fermi paradox is the roughly the following. We look at the night sky and don’t see anyone there. Presumably, in a future advanced human civilization, some parts of humanity would want to colonize other planets and even other star systems. If there existed civilizations like ours, there would be some of them who would want to colonize other systems, including, perhaps ours. We see no evidence of that happening.

( )

)

Someday will all this be ours?

There are two primary solutions to this: Early Filter and Late Filter. Early Filter solutions state that we are the only civilization because we passed a particularly difficult threshold, such as the creation of life. Late Filter state that we are the only civilization because there is a particularly difficult threshold between us right now and colonizing space. There is a bit of equating Late Filter with outcomes of extinction, however if we no longer couple those two possibilities, there exist several possibilities for a non-lethal Great Filter. One example is that space colonization somehow proves more difficult than our fiction likes to imagine. Thus, there is a filter between us and colonization, but it does not mean humanity’s extinction. So, while finding life on Mars is really bad news, finding a hyper-destructive form of radiation everywhere outside the star systems is good news with regards to our survival in the Solar System. It would still be bad news from the perspective of colonizing the galaxy.

Also, it’s important to try to use different reference classes to understand how Fermi Paradox might apply to them. Changing reference classes of “life” “civilization” and “galaxy” can give you a better perspective of what types of “solutions” don’t work.

For example, if you think that alien civilizations exist, but are made from neutrinos and thus don’t interact with us, this still doesn’t solve the problem of “what happens to carbon-based life?” You can reconstruct the Fermi paradox for the following questions:

Where is intelligent life?

Where is intelligent carbon-based life?

Where is intelligent carbon-based bipedal life?

If you restrict the reference classes in this fashion, Early Filters become more and more likely. For example, if we ask an absurd reference class question of why there are no exact civilizations identical to ours, it’s somewhat obvious we keep going through a chain of progressively lower and lower probability events that lead to the exact world state. Thus, we build up enough data to claim an Early Filter in reasonably-sized (non-infinite) universe.

However, if we find ourselves in a situation where we find lots of carbon-based bacteria outside the solar system and only silicon-based biology civilizations, then the paradox reappears for carbon-based biology civilizations. In fact, that would point to Late Filter for us.

We can also ask – why aren’t there other civilizations on Earth, Solar System, Milky Way. Simple answer for Earth humans killed / interbred with the other humanoids. Simple answer for Solar System – too few habitable planets. We don’t know about the Milky Way, however it’s big enough to face a smaller version of the Fermi paradox of its own.

However, if we hypothetically find remnants of many civilizations that have risen and fell over the courses of hundreds or thousands of years, then we would know that a civilizational barrier has existed on Earth as well. This would add more potential bits to the Early Filter and in some ways be good news as well. In other ways it could still be bad news because it shows an ability of a human society to fail.

Will the next one be better?

Thus, answers of the type “we live in an un-inhabited area of the universe” are still hiding the paradox without solving it. If this area is large enough, like a galaxy or a cluster, then it will have the paradox of its own, although it’s also more likely to be resolved through an Early Filter or we’re here first type situation. If the area is small, we struggle to imagine exactly why a galaxy spawning civilization would ignore it.

Pan-spermia or the idea that life was brought here by an asteroid is another way to hide the paradox. If life was brought here, then how many other planets has it come to. What obstacles did life face on those planets before becoming a space-faring universe? Are they before or after us? It seems to not affect the paradox in the slightest.

Basically, the idea of shifting reference classes is an important check on the reasoning here to not hide the paradox elsewhere. At the end of the day, the paradox remains and nearly everything collapses into possibilities of either Early or Late Filter.

An important possibility is of course the idea that there is a Late Filter and it is destruction by an out-of-control AI. Several people dismiss this because an AGI would colonize the galaxy quickly as well. I am not sure the dismissal works that well. AI mind-space is large. The mind-space of “AIs that can destroy their host civilizations” is also large. It’s not obvious to me that every single one of those designs is automatically a galaxy conquering maximizer. While that is the convergent goals of many agents, it’s possible that the common AGI failure modes have civilization extinction as a side effect, but not the destruction of galactic space. So, I maintain it’s still possible that AGI failure is the Great Filter, but it’s a form of failure that does not lead to paperclip universe.

There are several other suggestions people consider as possibilities, however they all seem to be very similar in principle to either Early or Late Filter. I’ll go through the ones described here, since this is a fairly accessible list of several other possibilities.

a) “We’re here first” is in many ways a form of the Early Filter. The universe is 13-14 billion years old. Had human civilization appeared earlier in the age of the universe, we would consider “Early Filter” and “We’re here first” as separate hypothesis, however at this point they seem to collapse into one.

b) “The entire concept of physical colonization is a hilariously backward concept to a more advanced species.” To me this is another Late Filter in disguise. What happens to the portion of human population which wants to colonize other planets? If every civilization somehow forgoes physical colonization, that must mean that fraction somehow disappears either through force or persuasion. It’s not as scary of a possibility as extinction, but this is certainly extinction of the idea of colonization. Thus, is a form of a non-lethal Late Filter, but it is still a non-trivial change to the status quo of the current civilization.

c) There are scary predator civilizations out there, and most intelligent life knows better than to broadcast any outgoing signals and advertise their location. Again, this is another Late Filter. It’s a late filter on extinction, colonization and contact as well.

I think most intelligent life should in fact know better than to broadcast serious outgoing signals, but for a different reason discussed below.

d) There’s only one instance of higher-intelligent life—a “superpredator” civilization (like humans are here on Earth)—that is far more advanced than everyone else and keeps it that way by exterminating any intelligent civilization once they get past a certain level. Again, this is another Late Filter.

f) There’s plenty of activity and noise out there, but our technology is too primitive and we’re listening for the wrong things. Similar to: higher civilizations are here, all around us. But we’re too primitive to perceive them. This very well might be a possibility, however this folds into another possibility of why the other civilizations would not make themselves known to us. The questions here is less about what we could or could not perceive, the question here is more about the functioning of other civilizations. To me this folds into the next possibility, which bring us to.

I generally think a form of a Late Filter, whether existentially threatening or not, is more likely than the Early Filter simply due to the sheer number of potentially habitable planets in existence. 40 billion in the Milky Way alone and up to 10^21 in the universe [give or take a factor of 10]. Clearly if we observed a universe with only one galaxy, it’s would be less paradoxical to be alone. In a way each doubling of planets is a bit of evidence against the Early Filter.

It’s hard to quantify the exact probability here, we could ask the question – of a sample of universes with this many habitable planets, how many have Early Filters and Late Filters? However, we have no idea about the sample distribution of universes with life, the relationship between size of universe and habitability, etc.

Let’s consider overly simple model of a set of 100 universes with 2^N habitable planets each, where the probability of a civilization existing at our level on each planet is constant. Then the question of how much each extra planet gives evidence against the Early Filter still heavily depends on the probability of creation of the civilization in the first place. If the prior on life arising is p 2^-1000, then you might just be single civilization in a large universe (or multiverse for that matter). If the prior on life is p 2^-30 and you still don’t see anyone around, then it must be obviously a Great Filter type universe.

However, it’s not clear if life arising on the planet is that rare of an event. While it’s still non-trivial event, the ability of chemical soups to produce key building block of life shows it might not be that rare. The other argument against an Early Filter is that we don’t have that many good Early Filter candidates beside the origin of life. Again, this is a biology which is not my general area, however it’s still seems we should not be willing to assign a hyper-low probability to an event that we know occurred.

An interesting aside here is that if there is in fact an Early Filter, this generally still begs the question of how we became so lucky as to exist in this universe. I generally assume that some form of the multi-verse exists regardless of Early or Late Filter. However, if the filter is Early, this is more evidence of a large multiverse because observing the existence of low-probability events strongly suggest the existence of many “tries” or a large sample space of universes. While it’s not necessarily an argument against the Early Filter, it is one option of where any extra “bits of selection” need to come from.

The zoo hypothesis.

Thus, this chain of reasoning posits a Late Filter, which might mean either extinction or a lack of contact for another reason. While I can by no means rule out our extinction, it’s worth pondering what we could observe if the universe was full of civilizations more advanced than ours.

When an advanced civilization encounters a less advanced one, we generally expect the technological differential to be significant enough for the advanced civilization to easily destroy the weaker one if it wanted to. We have examples of this happening on Earth. This is especially true for space-faring civilizations vs non-spacefaring ones. Even something as simple as ramming a large spaceship into a planet could be devastating.

With that in mind, the game theory of space starts out fairly simple. The advanced civilization can choose to either destroy (I am going to call this defect) or not destroy (I am going to call this cooperate) the weaker one. Destroying weaker civilizations may seem safer if you are afraid their rate of technological progress could outpace yours, which is of course possible with lots of post-singularity civilizations.

Pessimists think that we should not broadcast our location on the off-chance that there are defect-only civilizations (also known as berserkers) out there. They also give implicit advice to destroy other civilizations if we get the chance. Optimists, as exhibited by humans sending signals into space and certain fictional works, think that civilizations will get along fine or that there mostly cooperate-only civilizations out there. There are also more complex possibilities. Of particular interest are FairBot-style civilizations, which destroy berserkers and cooperate with other civilizations conditionally on whether they predict the weaker civilization would have cooperated with them had the roles been reversed.

The question becomes of what our prior should be on the distribution of civilizational strategies and what the civilizational strategy should be, if we encounter a weaker civilization out there.

Of course, this prior informs an equilibrium. If everyone believes there are only defect-civs you might as well be a defect civ. If everyone believes there are only FairBot civs, being a defect-civ is a bad idea. More complex strategies are also on the table, such as civilizations that are FairCivs, but that destroy cooperate-only civilizations basically as a punishment for not destroying defect-only civs. I’ll call those PoliceCivs.

From a Functional Decision Theory perspective you would ask the question: what would you do if you didn’t know which type of universe you would end up in? A similar question is what would you do to maximize the chances of your survival not only in this universe but also around the Tegmark multi-verse? How would you act if there is a chance you were being simulated? If your decisions reflected in the universal bit-sequence prior accessible to all possible universes?

My current view is that we should believe the universe is populated by civilizational strategies which have FairBot mentality as a baseline. My preliminary modelling with assumptions of a random small chance of each of the basic strategies has suggested that you could also be in an equilibrium of PoliceCivs, but not Meta-PoliceCivs (Civs that attack FairCivs for not punishing cooperate bots for not punishing defect civs). It’s an interesting version of “no more than 3 levels of meta” rule.

How would this play out in practice? It’s very likely that sending weird signals to a weaker civilization with the purpose of disrupting its technological advances is a defection. It’s very likely that sending any signals at all could be mis-interpreted as a defection.

Which brings me to the zoo hypothesis, where advanced civilizations might know of our existence, but choose not to contact us. Normally people talk about the zoo hypothesis because of an expectation that other civilization would have some sort of consideration for our suffering. While this is nice, I find that somewhat unlikely, but it’s a bit more possible that there is a universalized strategic and game-theoretical reason to not do so that relies on alien’s own self-preservation and doesn’t rely on aliens taking our wellbeing into account directly. This has also been explored in fiction, most importantly in the Star Trek Prime Directive. Even in fiction there are a number of complexities involved here, such as dealing with existing wartime situations and potentially disruptive technology or hostility towards the existing civilization.

How do civilizations identify defectors?

The core of FairBot strategy is the ability to tell if a weaker civilization is a defector or even a “potential defector.” It’s not clear if there exists a reliable mechanism of doing so, however there are several possibilities. This is even more speculative could be in the realm of PURE SCIENCE FICTION.

a) Direct simulation

One simple way that an advanced civilization (call it Civ A) could determine if another civilization (Civ B) is a potential defector is by doing a computer simulation of them. There could be several variations of this, such as putting Civ B in a situation where they are the advanced civilization with respect to Civ A. Of course, full simulations of the entire universe might be extremely difficult to do. Also, there is a question of whether a full simulation itself is considered a hostile act due to added simulation of suffering of the many beings of Civ B.

b) Indirect simulations

Advanced civilizations could have access to several tools that act similar to a simulation but are less invasive in practice. You might not need to run an entire simulation of a civilization to simply determine the answer to the question of whether it is a defector. You could ask a theorem prover a question about the simulation, which it could theoretically acquire with less computation than running it.

Civ A could also use a form of historical analysis to see the exact nature of how civilizations behaved towards themselves. What kinds of strategies and memes tend to win within a civilization? Do the leaders hold promises? Do the key philosophers of said civilization understand basic game theory? These are simple heuristics and while they might not be completely reliable, even we at the current state of civilization can make judgements about the progress or lack thereof in understanding this.

c. Cross – multiverse communication

Another scenario is the advanced civilization simply observing the behavior of the weaker civilization in another universe, part of the multi-verse or an Everett branch. Once again this is science-fictional, but if there exist good theorem provers that can basically “peek” into computable universes to show what happened there. This could be made easier if somehow before being destroyed, a weaker civilization wishes to alter the “universal prior” by exhibiting certain easy to prove behavior. More about the universal prior is here in an excellent Paul Christiano post

What to do if any of this theory is true?

The main thing I would avoid doing is sending any deliberate communication into space. People are afraid of this due to the fear of disclosing our location to any hostile civilizations. While I think this is a low probability event, it’s still a high enough risk to not do. What I am also afraid of is accidentally sending signals to weaker civilizations before they are ready to make contact. This could negatively affect them and be interpreted as a hostile act, which could reflect badly on this civilization in scenarios where we are not the first ones in this universe or others.

There are several further important research directions to consider. What is the exact probability of the origin of life in the primordial soup? How could we estimate societal failure probabilities based on rate of civilizational failure in the past?

But the most important thing is that before humanity becomes a multi-star space travelling civilization, it must develop the coordination technology necessary to be able to negotiate, implement and enforce a unified, coherent and game-theoretically stable policy with regards to other alien civilizations.