OthelloGPT learned a bag of heuristics

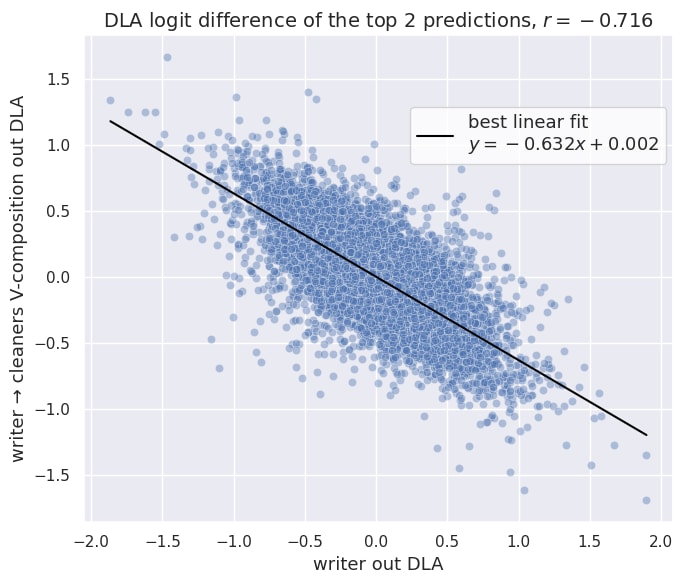

Work performed as a part of Neel Nanda's MATS 6.0 (Summer 2024) training program. TLDR This is an interim report on reverse-engineering Othello-GPT, an 8-layer transformer trained to take sequences of Othello moves and predict legal moves. We find evidence that Othello-GPT learns to compute the board state using many independent decision rules that are localized to small parts of the board. Though we cannot rule out that it also learns a single succinct algorithm in addition to these rules, our best guess is that Othello-GPT’s learned algorithm is just a bag of independent heuristics. Board state reconstruction 1. Direct attribution to linear probes indicate that the internal board representation is frequently up- and down-weighted during a forward pass. 2. Case study of a decision rule: 1. MLP Neuron L1N421 represents the decision rule: If the move A4 was just played AND B4 is occupied AND C4 is occupied ⇒ update B4+C4+D4 to “theirs”. This rule does not generalize to translations across the board. 2. Another neuron L0377 participates in the implementation of this rule by checking if B4 is occupied, and inhibiting the activation of L1N421 if no. Legal move prediction 1. A subset of neurons in mid to late MLP layers classify board configurations that are sufficient to make a certain move legal with an F1-score above 0.99. These neurons have high direct attribution to the logit for that move, and are causally relevant for legal move prediction. 2. Logit lens suggests that legal move predictions gradually solidify during a forward pass. 3. Some MLP neurons systematically activate at certain times in the game, regardless of the moves played so far. We hypothesize that these neurons encode heuristics about moves that are more probable in specific phases (early/mid/late) of the game. Review of Othello-GPT Othello-GPT is a transformer with 25M parameters trained on sequences of random legal moves in the board game Othello as inputs[1] to predict legal