Let’s define three variables:

- T is the true phenotype we’d like to measure,

- X is a predictor of T (genes, polygenic score, etc), and

- Y is the observed test score.

In terms of the original quote: "I thought that if some input [X] explains X% of the variance of some outcome [T], then it explains % of the variance of a noisy measurement of the outcome [Y]."

So we know something about the relationship between X and T (the percentage of the variance in T that X explains), and something about the relationship between Y and T (the reliability of Y), and we’d like to use this to tell us something about the relationship between X and Y (how much of the variance in Y that X explains).

First, we’ll show how we can use the test-retest correlation to estimate reliability. Model the observed phenotype as with error E ( and ). Given two independent repeats , , , , and , then . Also . So . This () is the definition of reliability (under the classical test theory model with parallel forms / independent errors), so we can use the test-retest correlation to estimate reliability, .

Now, since .

From which we can see that .

Square both sides of that equation to get . Since we only have a single predictor, , so this is equivalent to (though apparently a similar argument also works to derive the same result with multiple predictors). So the attenuation correction factor is .

If and , then , not .

So, in this case the attenuation factor is just the correlation. When would it have been the squared correlation (like you originally had)? The key was that we started with the test-retest correlation and we showed that . If what we had instead was , then , and the attenuation factor would instead have been .

which is heavy duty in a few ways which kinda makes sense for orgs, but not really for an individual

This makes sense, though it's certainly possible to get funded as an individual. Based on my quick count there were ~four individuals funded this round.

You kinda want a way to scalably use existing takes on research value by people you trust somewhat but aren't full recommenders

Speculation grants basically match this description. One possible difference is that there's an incentive for speculation granters to predict what recommenders in the round will favor (though they make speculation grants without knowing who's going to participate as a recommender). I'm curious for your take.

I've been keen to get a better sense of it

It's hard to get a good sense without seeing it populated with data, but I can't share real data (and I haven't yet created good fake data). I'll try my best to give an overview though.

Recommenders start by inputting two pieces of data: (a) how interested they are in investigating each proposal, and (b) disclosures of any any possible conflicts of interest, so that other recommenders can vote on whether they should be recused or not.

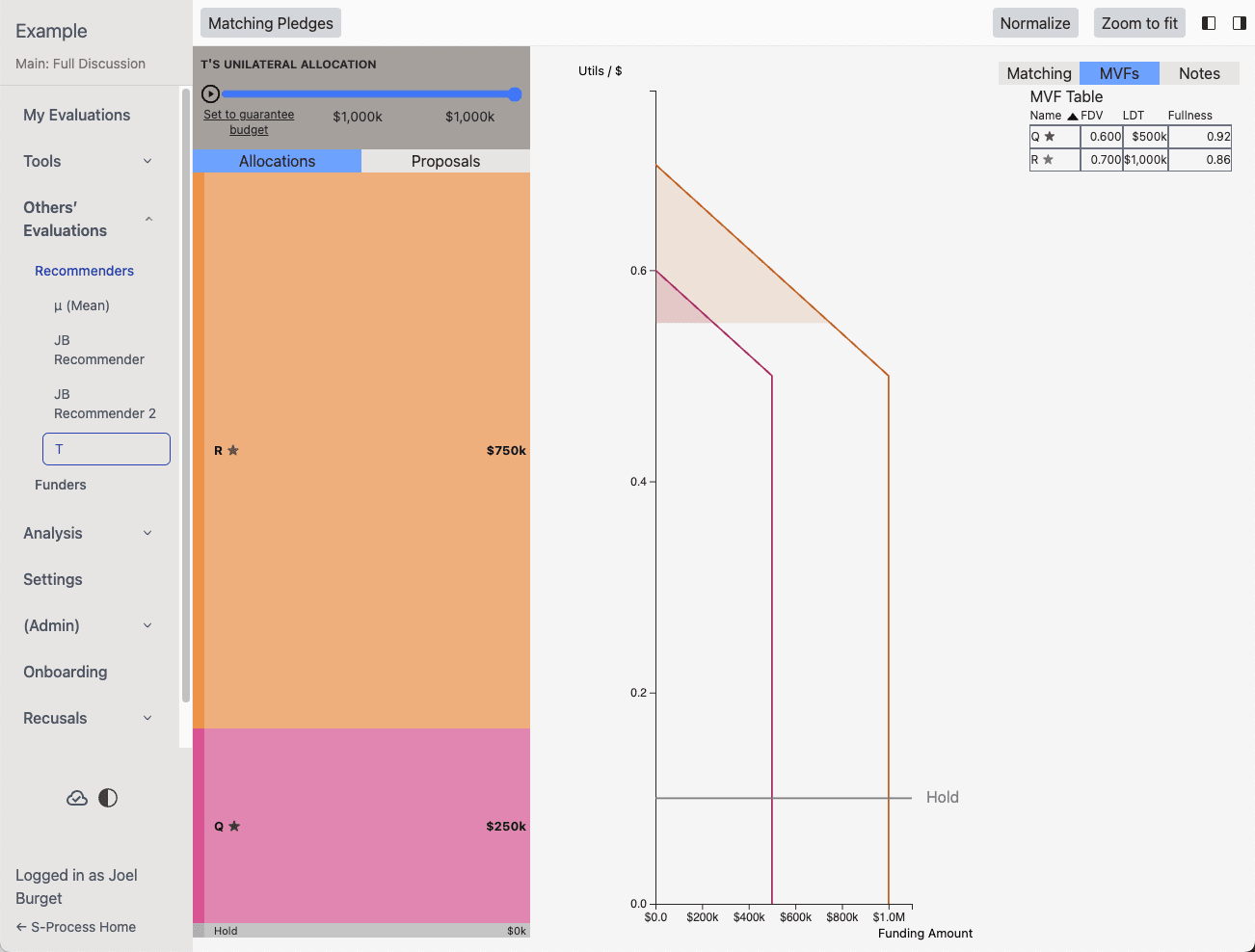

They spend most of the round using this interface, where they can input marginal value function curves for the different orgs. They can also click on an org to see info about it (all of the info from the application form, which in my example is empty) and notes (both their notes and other recommenders').

The MVF graph says how much they believe any given dollar is worth. We force curves to be non-increasing, so marginal value never goes up. On my graph you can see the shaded area visualizing how much money is allocated to the different proposals as we sweep down the graph from the highest marginal value.

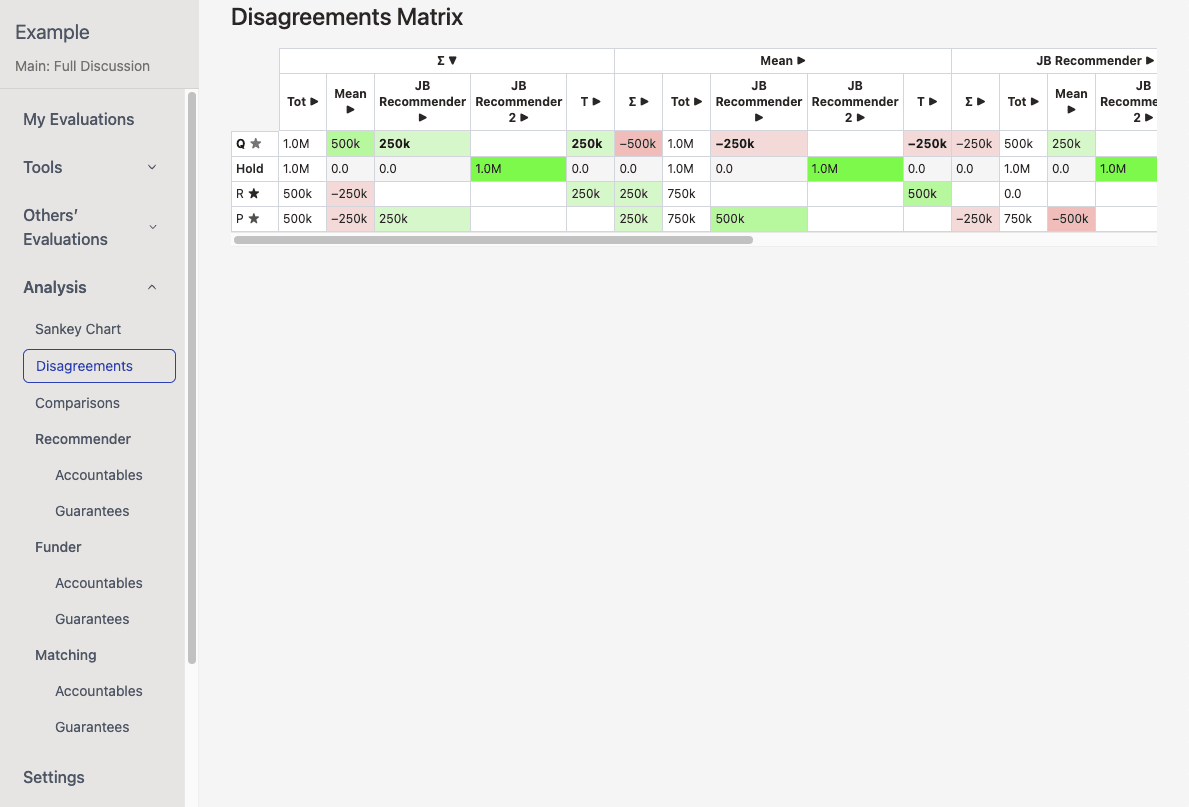

There are also various comparison tools, the most popular of which is the Sankey chart which shows how much money flows from the funder, through the different recommenders, to different orgs. The disagreements matrix is one of the more useful tools: it shows areas where recommenders disagree the most, which helps them figure out what to talk about in their meetings.

If you're interested in the algorithm more than the app, I have a draft attempting to explain it.

handle individual funding better

Do you say this because of the overhead of filling out the application?

Suggest using the EigenTrust system... can write up properly

I'm interested in hearing about it. Doesn't have to be that polished, just enough to get the idea.

(For context, I work on the S-Process)

in brain-like AGI, the reward function is written in Python (or whatever), not in natural language

I think a good reward function for brain-like AGI will basically look kinda like legible Python code, not like an inscrutable trained classifier. We just need to think really hard about what that code should be!

Huh! I would have assumed that this Python would be impossible to get right, because it would necessarily be very long, and how can you verify that it's correct(?), and you'll probably want to deal with natural language concepts as opposed to concepts which are easy to define in Python.

Asking an LLM to judge, on the other hand... As you said, Claude is nice and seems to have pretty good judgement. LLMs are good at interpreting long legalistic rules. It's much harder to game a specification when there is a judge without hardcoded rules, and with the ability to interpret whether some action is in the right spirit or not.

Thanks for your patience: I do think this message makes your point clearly. However, I'm sorry to say, I still don't think I was missing the point. I reviewed §1.5, still believe I understand the open-ended autonomous learning distribution shift, and also find it scary. I also reviewed §3.7, and found it to basically match my model, especially this bit:

Or, of course, it might be more gradual than literally a single run with a better setup. Hard to say for sure. My money would be on “more gradual than literally a single run”, but my cynical expectation is that the (maybe a couple years of) transition time will be squandered

Overall, I don't have the impression we disagree too much. My guess for what's going on (and it's my fault) is that my initial comment's focus on scaling was not a reaction to anything you said in your post, in fact you didn't say much about scaling at all. It was more a response to the scaling discussion I see elsewhere.

For (2), I’m gonna uncharitably rephrase your point as saying: “There hasn’t been a sharp left turn yet, and therefore I’m overall optimistic there will never be a sharp left turn in the future.” Right?

Hm, I wouldn't have phrased it that way. Point (2) says nothing about the probability of there being a "left turn", just the speed at which it would happen. When I hear "sharp left turn", I picture something getting out of control overnight, so it's useful to contextualize how much compute you have to put in to get performance out, since this suggests that (inasmuch as it's driven by compute) capabilities ought to grow gradually.

I feel like you’re disagreeing with one of the main arguments of this post without engaging it.

I didn't mean to disagree with anything in your post, just to add a couple points which I didn't think were addressed.

You're right that point (2) wasn't engaging with the (1-3) triad, because it wasn't mean to. It's only about the rate of growth of capabilities (which is important because if each subsequent model is only 10% more capable than the one which came before then there's good reason to think that alignment techniques which work well on current models will also work on subsequent models).

Again, the big claim of this post is that the sharp left turn has not happened yet. We can and should argue about whether we should feel optimistic or pessimistic about those “wrenching distribution shifts”, but those arguments are as yet untested, i.e. they cannot be resolved by observing today’s pre-sharp-left-turn LLMs. See what I mean?

I do see, and I think this gets at the difference in our (world) models. In a world where there's a real discontinuity, you're right, you can't say much about a post-sharp-turn LLM. In a world where there's continuous progress, like I mentioned above, I'd be surprised if a "left turn" suddenly appeared without any warning.

I like this post but I think it misses / barely covers two of the most important cases for optimism.

1. Detail of specification

Frontier LLMs have a very good understanding of humans, and seem to model them as well as or even better than other humans. I recall seeing repeated reports of Claude understanding its interlocutor faster than they thought was possible, as if it just "gets" them, e.g. from one Reddit thread I quickly found:

- "sometimes, when i’m tired, i type some lousy prompts, full of typos, incomplete info etc, but Claude still gets me, on a deep fucking level"

- "The ability of how Claude AI capture your intentions behind your questions is truly remarkable. Sometimes perhaps you're being vague or something, but it will still get you."

- "even with new chats, it still fills in the gaps and understands my intention"

LLMs have presumably been trained on:

- millions of anecdotes from the internet, including how the author felt, other users' reactions and commentary, etc.

- case law: how did humans chosen for their wisdom (judges) determine what was right and wrong

- thousands of philosophy books

- Lesswrong / Alignment Forum, with extensive debate on what would be right and wrong for AIs to do

There are also techniques like deliberative alignment, which includes an explicit specification for how AIs should behave. I don't think the model spec is currently detailed enough but I assume OpenAI intend to actively update it.

Compare this to the "specification" humans are given by your Ev character: some basic desires for food, comfort, etc. Our desires are very crude, confusing, and inconsistent; and only very roughly correlate with IGF. It's hard to emphasize enough how much more detailed is the specification that we present to AI models.

2. (Somewhat) Gradual Scaling

Toby Ord estimates that pretraining "compute required scales as the 20th power of the desired accuracy". He estimates that inference scaling is even more expensive, requiring exponentially more compute just to make constant progress. Both of these trends suggest that, even with large investments, performance will increase slowly from hardware alone (this relies on the assumption that hardware performance / $ is increasing slowly, which seems empirically justified). Progress could be faster if big algorithmic improvements are found. In particular I want to call out that recursive-self improvement (especially without a human in the loop) could blow up this argument (which is why I wish it was banned). Still, I'm overall optimistic that capabilities will scale fairly smoothly / predictably.

With (1) and (2) combined, we're able to gain some experience with each successive generation of models, and add anything we find is missing from the training dataset / model spec, without taking any leaps that are too big / dangerous. I don't want to suggest that the scaling up while maintaining alignment process will definitely succeed, just that we should update towards the optimistic view based on these arguments.

scale up to superintelligence in parallel across many different projects / nations / factions, such that the power is distributed

This has always struck me as worryingly unstable. ETA: Because in this regime you're incentivized to pursue reckless behaviour to outcompete the other AIs, e.g. recursive self-improvement.

Is there a good post out there making a case for why this would work? A few possibilities:

- The AIs are all relatively good / aligned. But they could be outcompeted by malevolent AIs. I guess this is what you're getting at with "most of the ASIs are aligned at any given time", so they can band together and defend against the bad AIs?

- They all decide / understand that conflict is more costly than cooperation. A darker variation on this is mutually assured destruction, which I don't find especially comforting to live under.

- Some technological solution to binding / unbreakable contracts such that reneging on your commitments is extremely costly.

Though we have opposition to Trump in common, and are probably closer culturally to the Democrat side than the Republican side, rationalists don't have that much overlap with Democrat priorities. In fact, Dean Ball has said "AI doomers are actually more at home on the political right than they are on the political left in America".

There have been a couple interesting third parties. A couple years ago Balaji Srinivasan was talking about a tech-aligned "Gray Tribe", which doesn't seem to have gone anywhere. Andrew Yang launched the Forward Party, focused on reducing polarization, ranked-choice voting, open primaries, and a centrist approach. Seems pretty appealing but is still very small compared to the mainstream parties.

I'm not sure what the answer is.