Which planet is closest to the Earth, and why is it Mercury?

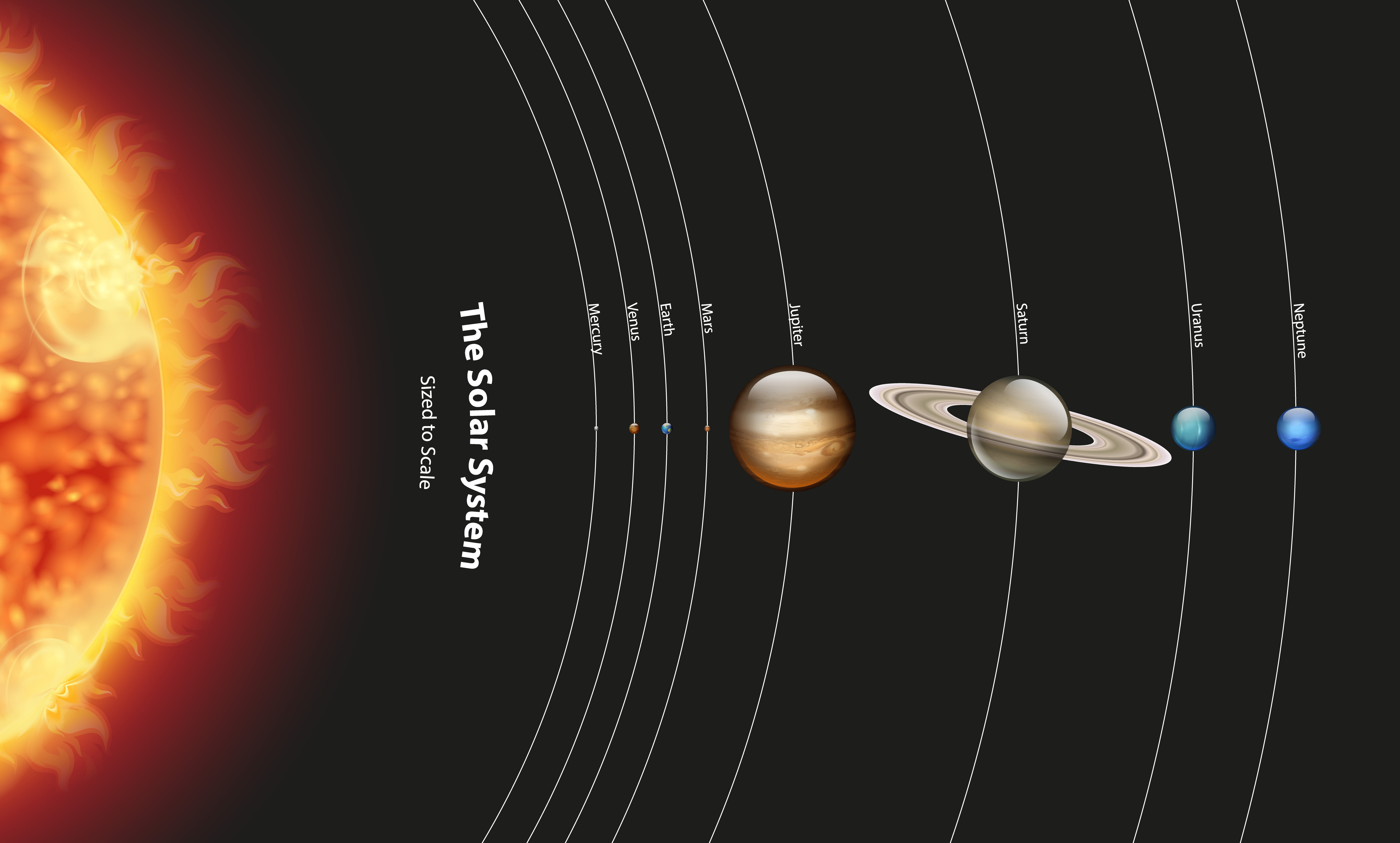

Which planet is closest to Earth, on average? I used to think it was Venus, followed by Mars. But this paper claims it is instead Mercury. At first this seemed to make no sense. Just picture the orbits of the planets: they're a bunch of concentric circles (approximately). Venus' orbit...

Yeah, that is very neat, and I don't know how you would take it into account in the calculation. The paper does have a table comparing predictions from their formula, the old model (taking the difference of the radii) and a simulation they did, and eyeballing it, the Jupiter/Saturn distance doesn't seem to have a bigger error than other entries in the table. (Unfortunately the table only shows the planets of the Solar System, without Pluto.)