Counting arguments provide no evidence for AI doom

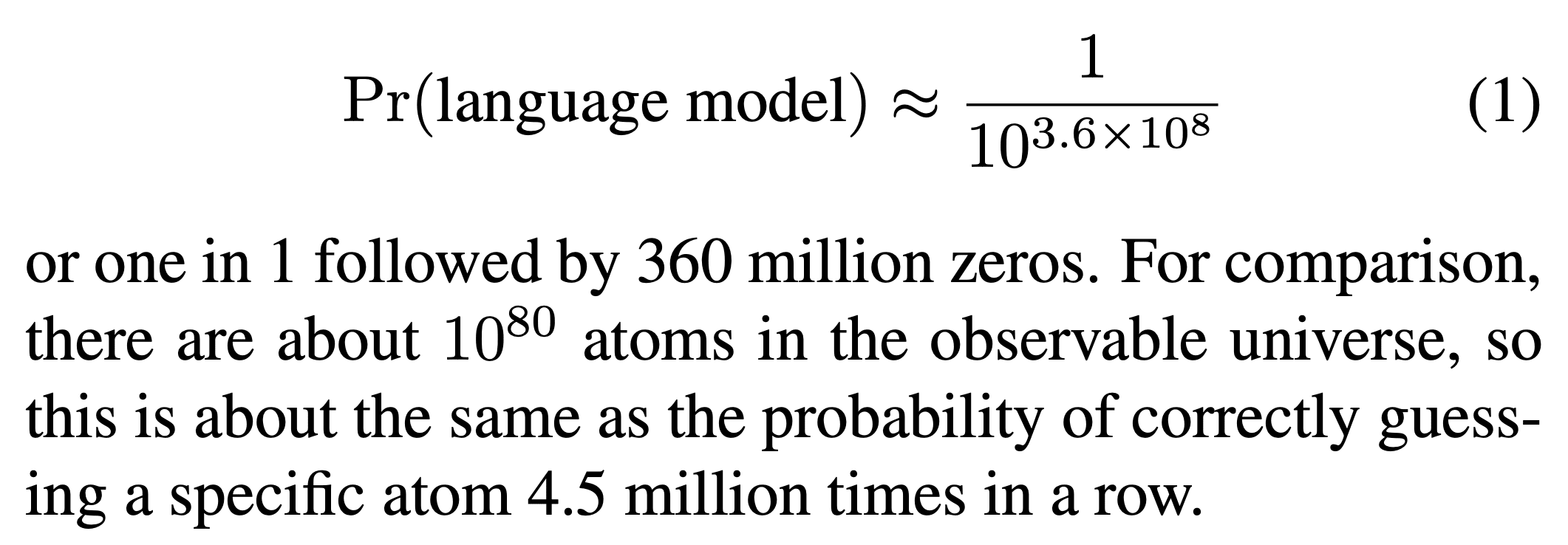

Crossposted from the AI Optimists blog. AI doom scenarios often suppose that future AIs will engage in scheming— planning to escape, gain power, and pursue ulterior motives, while deceiving us into thinking they are aligned with our interests. The worry is that if a schemer escapes, it may seek world domination to ensure humans do not interfere with its plans, whatever they may be. In this essay, we debunk the counting argument— a central reason to think AIs might become schemers, according to a recent report by AI safety researcher Joe Carlsmith.[1] It’s premised on the idea that schemers can have “a wide variety of goals,” while the motivations of a non-schemer must be benign by definition. Since there are “more” possible schemers than non-schemers, the argument goes, we should expect training to produce schemers most of the time. In Carlsmith’s words: > 1. The non-schemer model classes, here, require fairly specific goals in order to get high reward. > 2. By contrast, the schemer model class is compatible with a very wide range of (beyond episode) goals, while still getting high reward… > 3. In this sense, there are “more” schemers that get high reward than there are non-schemers that do so. > 4. So, other things equal, we should expect SGD to select a schemer. > > — Scheming AIs, page 17 We begin our critique by presenting a structurally identical counting argument for the obviously false conclusion that neural networks should always memorize their training data, while failing to generalize to unseen data. Since the premises of this parody argument are actually stronger than those of the original counting argument, this shows that counting arguments are generally unsound in this domain. We then diagnose the problem with both counting arguments: they rest on an incorrect application of the principle of indifference, which says that we should assign equal probability to each possible outcome of a random process. The indifference principle is controversia