Layered AI Defenses Have Holes: Vulnerabilities and Key Recommendations

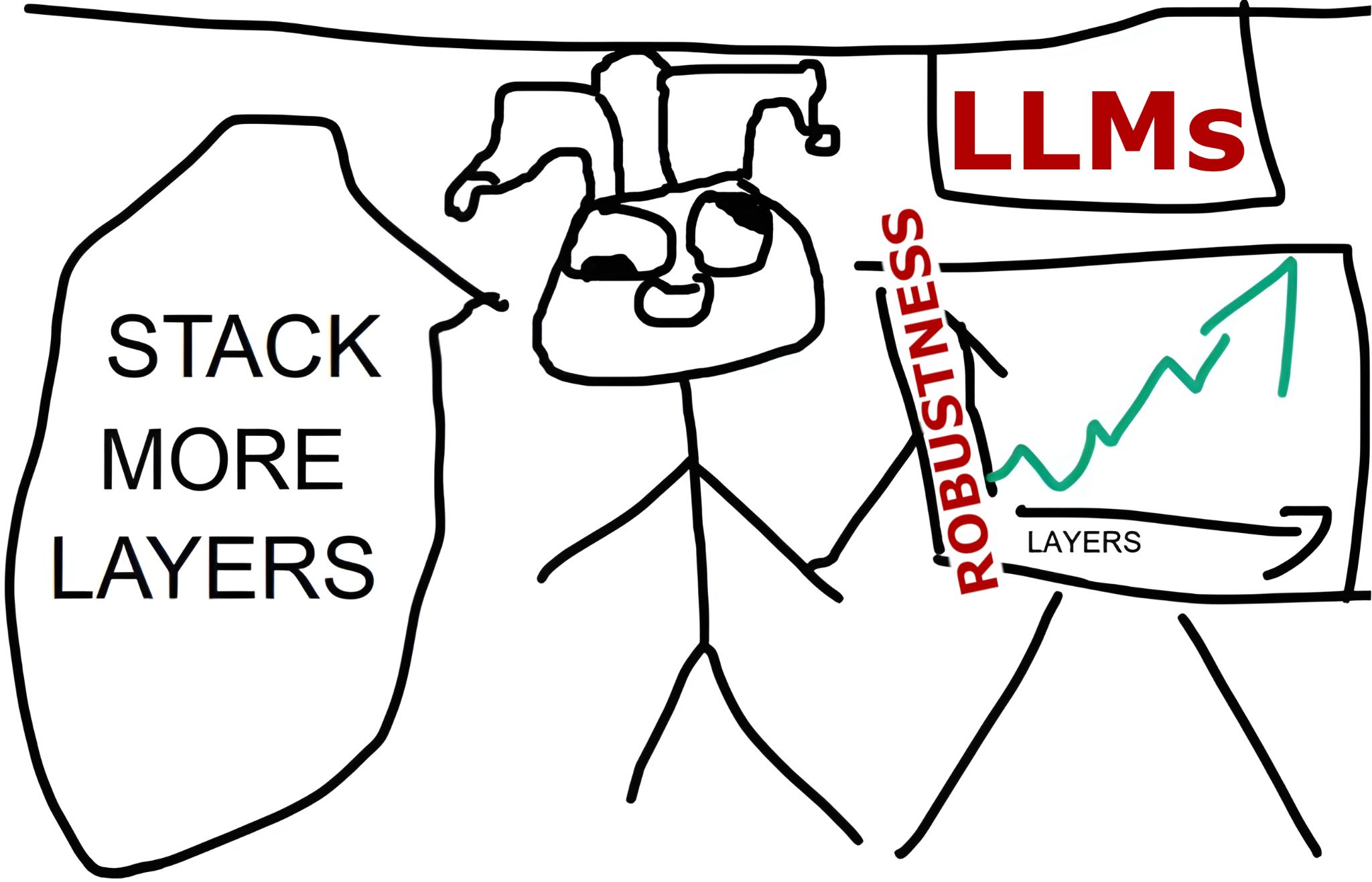

Leading AI companies are increasingly using "defense-in-depth" strategies to prevent their models from being misused to generate harmful content, such as instructions to generate chemical, biological, radiological or nuclear (CBRN) weapons. The idea is straightforward: layer multiple safety checks so that even if one fails, others should catch the problem....