Tools for finding information on the internet

Edit 2023-05-09: I recorded a presentation for EA Software Engineers about this post. In it, I demonstrate each of the tools and discuss some extra ones at the end, namely content blockers, userscripts, and alternative front-end websites. Isn't the internet such a magically useful tool? Thirty years ago, if you wanted to know how many plays Shakespeare wrote, you would have to physically walk to your local library and find a relevant book. Now, you can find the answer in less than ten seconds, at any time, wherever you are. However, the internet is not a truthful, superintelligent oracle. Rather, it's a dangerous jungle of knowledge you must learn to navigate if you wish to find the truth. Good information is censored, hidden behind paywalls or within piles of spam, and difficult to differentiate from untrustworthy information. This post won't be a complete guide on how to navigate the world wide web of knowledge, but it will give you some tools I've discovered over the years that you can throw in your digital rucksack to aid your journey. Search engines * The great internet sage Gwern Branwen wrote an advanced guide on finding references, papers, and books online. * The search engines Brave Search and Kagi have the features "Goggles" and "Lenses" respectively, which are presets that filter or re-rank entire categories of websites in your results. * SearXNG is a highly customizable internet metasearch engine. * Perplexity uses natural language processing to answer your query with a paragraph (with sources) and allows you to ask followup questions. * Metaphor allows you to find websites by writing creative and long-form prompts, also using NLP. * Elicit is a research assistant that helps you find relevant research papers, also using NLP. Bypassing restrictions Sometimes you know exactly where to find a piece of information, but it's locked behind a paywall or deleted from the internet. * Unddit displays deleted comments and posts on Reddit. * Inter

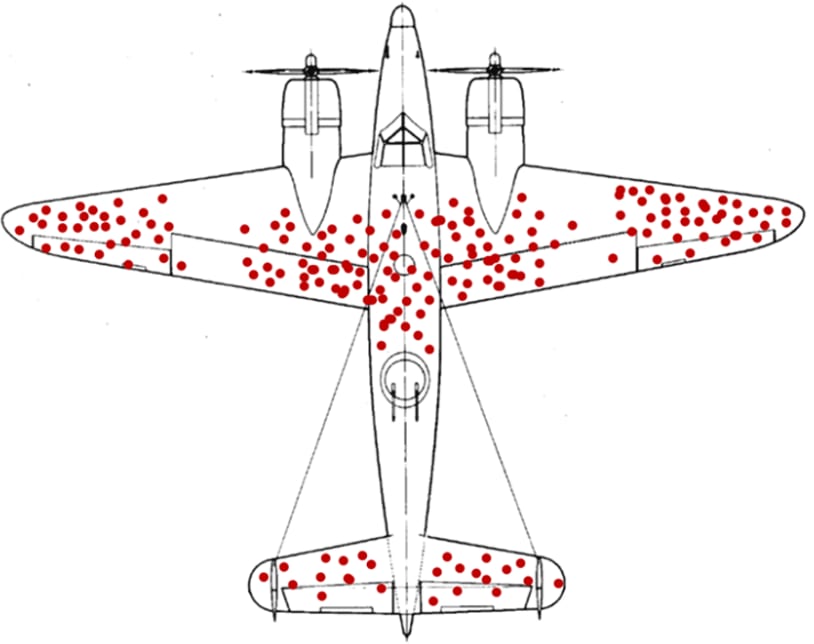

Adapted from

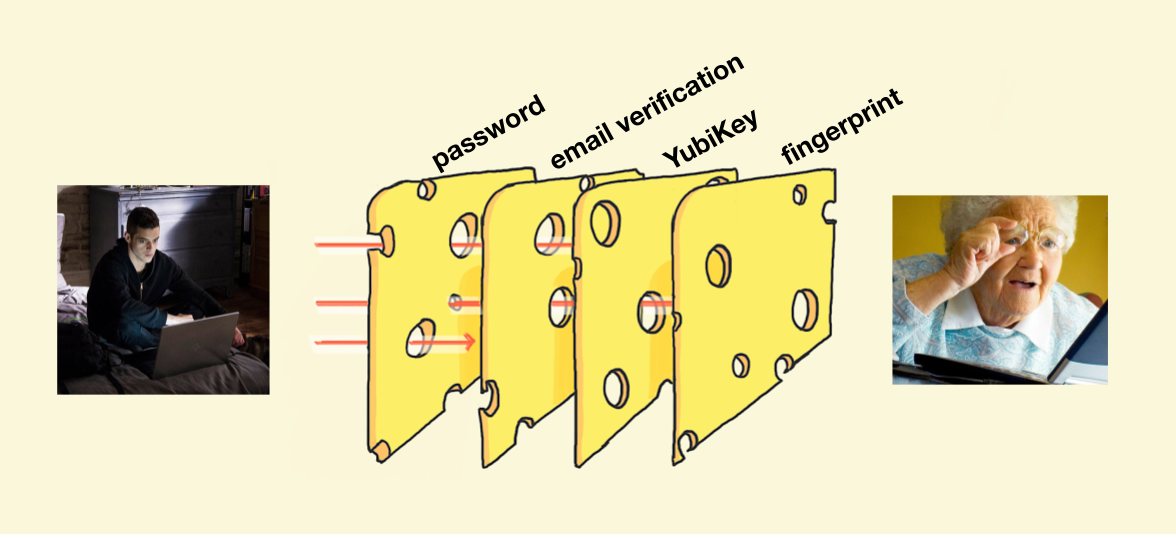

Adapted from

Do you think you could cross-post this to the EA Forum? I was thinking of making a post on the forum reminding people about the group, but yours would be better.