In the past days we have seen DALL-E 2 and Google's Pathways Language Model achievements, and in the next months we will see the outcome of the recently discovered more optimal use of compute: https://www.lesswrong.com/posts/midXmMb2Xg37F2Kgn/new-scaling-laws-for-large-language-model

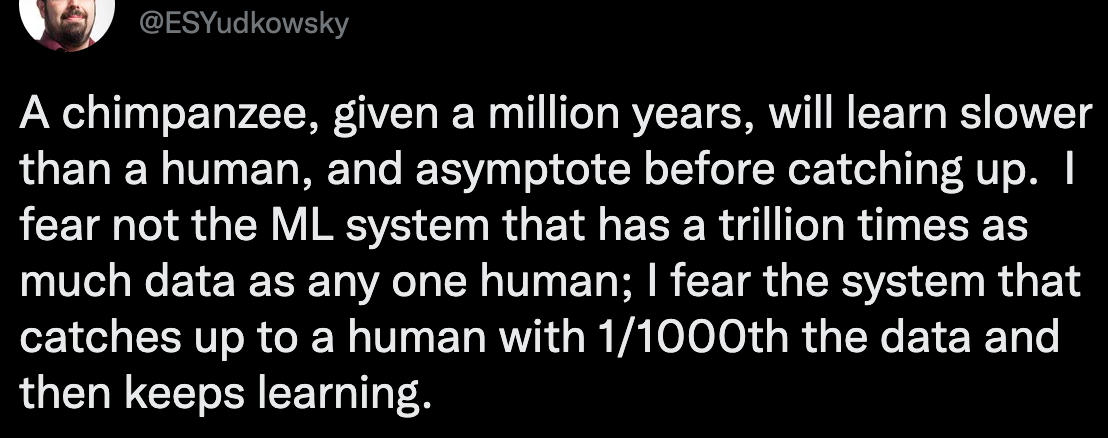

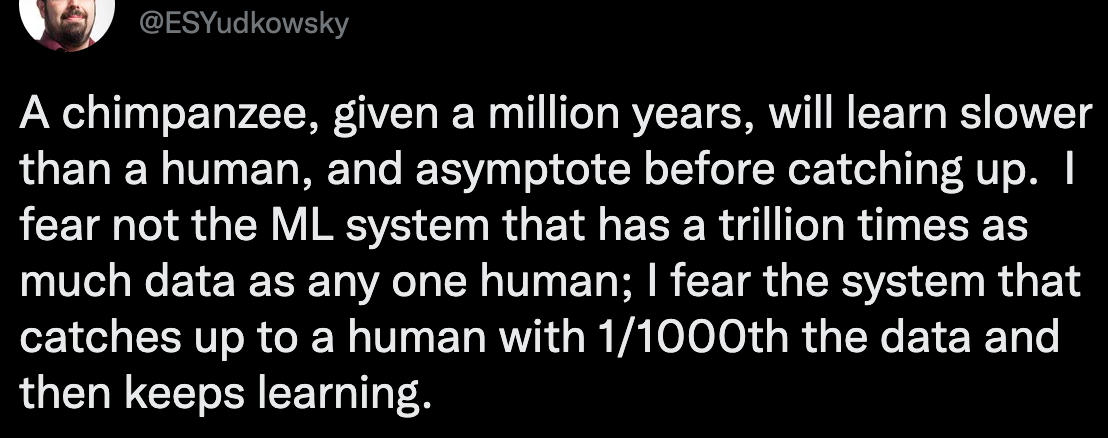

EY believes that A.G.I. could be achieved by:

1) Ability to train with less data.

2) Ability to improve itself.

I believe A.G.I. could be close if language models improve on their abilities to code, particularly at recursion.

Is there the possibility or do you believe the path to A.G.I. is orthogonal to the current ML pathway? Or somewhere in between? And if so, where?(This is the question that causes A.G.I.)

Could you please share any "AI to align A.G.I." resources do you have please?. The obviously "duh that's not possible" answer that i found is that for AI to do successful AI Safety Align Research it would probably already be too late. Maybe because it could also be used to research A.G.I.?

I support that thinking-on-the-margin alignment research version is crucial and it is one of the biggest areas of opportunity to increase the probability of success. Based on the seemingly current low probability, possibly at least it is worth trying.

In the general public context, one of the premises is about if they could have benefit to the problem. In my intuition it really seems that AI alignment should be more debated in Universities and Technology. The current lack of awareness is concerning and unbelievable.

We should evaluate more the outcomes of raising awareness. Taking into account all the options: a general(public), partial(experts) awareness strategy and the spectrum and variables in between. It seems that... (read 501 more words →)