Clarifying “What failure looks like”

Thanks to Jess Whittlestone, Daniel Eth, Shahar Avin, Rose Hadshar, Eliana Lorch, Alexis Carlier, Flo Dorner, Kwan Yee Ng, Lewis Hammond, Phil Trammell and Jenny Xiao for valuable conversations, feedback and other support. I am especially grateful to Jess Whittlestone for long conversations and detailed feedback on drafts, and her guidance on which threads to pursue and how to frame this post. All errors are my own. Epistemic status: My Best Guess Epistemic effort: ~70 hours of focused work (mostly during FHI’s summer research fellowship), talked to ~10 people. Introduction “What failure looks like” is the one of the most comprehensive pictures of what failure to solve the AI alignment problem looks like, in worlds without discontinuous progress in AI. I think it was an excellent and much-needed addition to our understanding of AI risk. Still, if many believe that this is a main source of AI risk, I think it should be fleshed out in more than just one blog post. The original story has two parts; I’m focusing on part 1 because I found it more confusing and nebulous than part 2. Firstly, I’ll summarise part 1 (hereafter “WFLL1”) as I understand it: * In the world today, it’s easier to pursue easy-to-measure goals than hard-to-measure goals. * Machine learning is differentially good at pursuing easy-to-measure goals (assuming that we don’t have a satisfactory technical solution to the intent alignment problem[1]). * We’ll try to harness this by designing easy-to-measure proxies for what we care about, and deploy AI systems across society which optimize for these proxies (e.g. in law enforcement, legislation and the market). * We’ll give these AI systems more and more influence (e.g. eventually, the systems running law enforcement may actually be making all the decisions for us). * Eventually, the proxies for which the AI systems are optimizing will come apart from the goals we truly care about, but by then humanity won’t be able to take back influence, a

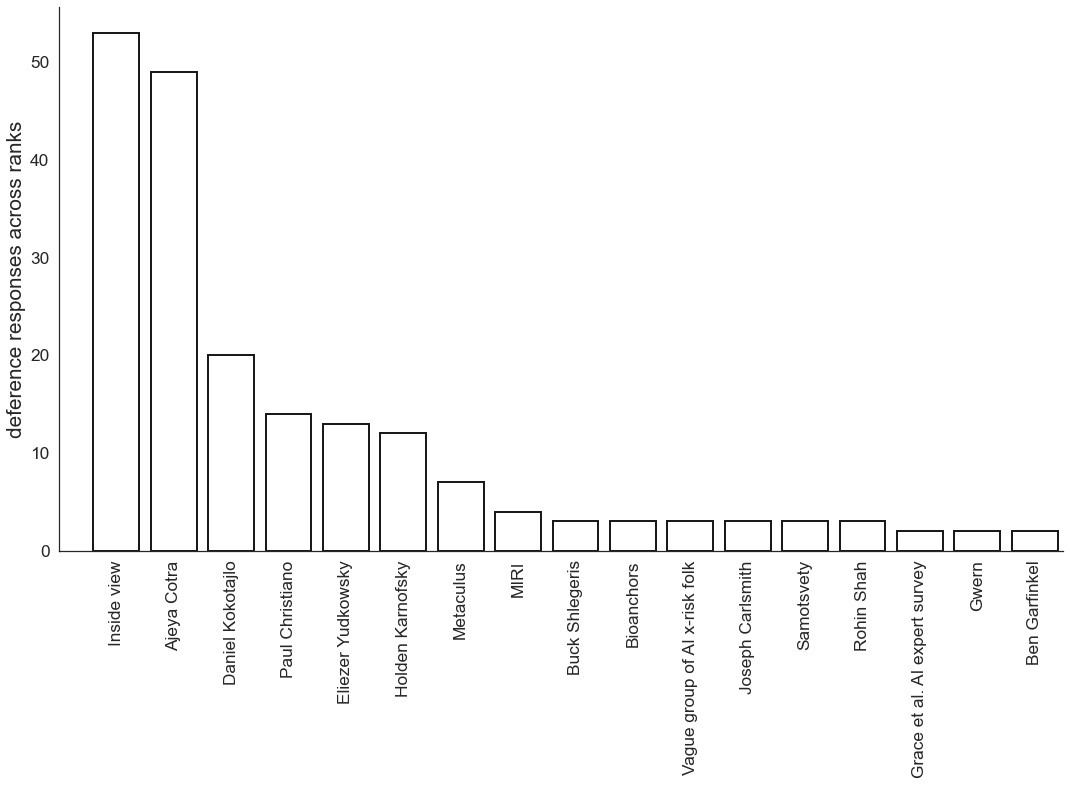

Finally posted: https://www.lesswrong.com/posts/qccxb3uzwFDsRuJuP/deference-on-ai-timelines-survey-results