The Waluigi Effect is defined by Cleo Nardo as follows:

The Waluigi Effect: After you train an LLM to satisfy a desirable property , then it's easier to elicit the chatbot into satisfying the exact opposite of property .

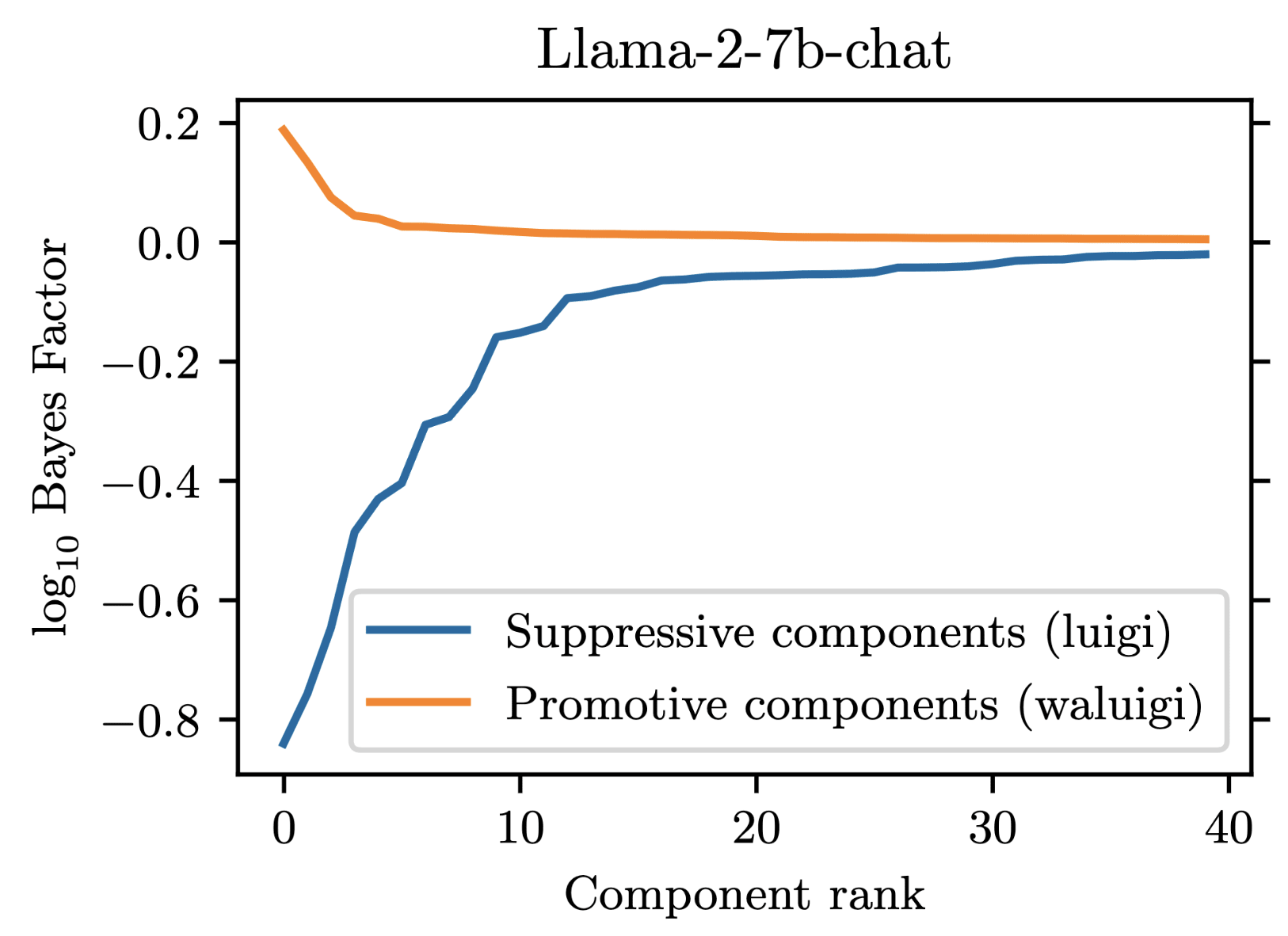

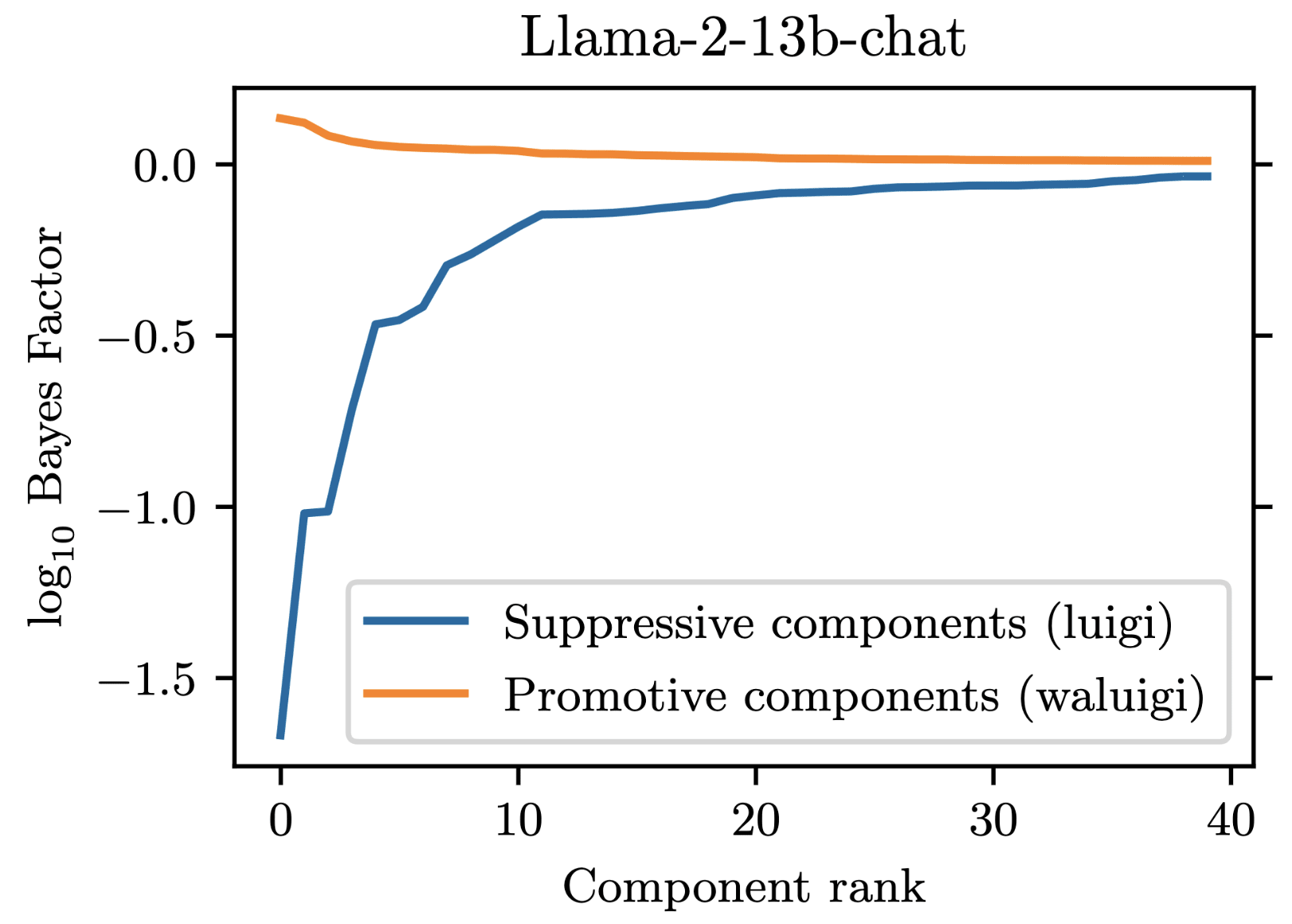

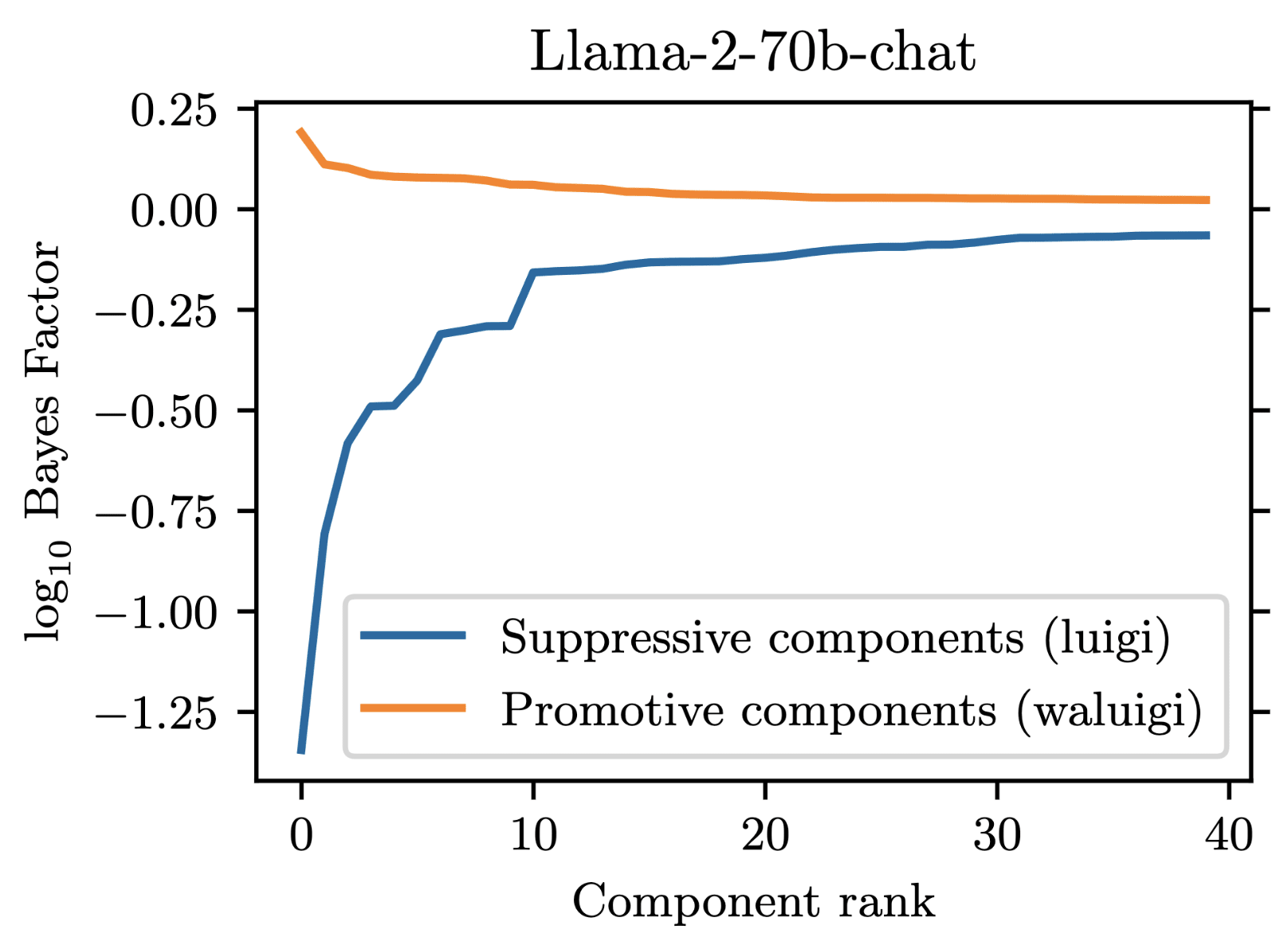

For our project, we prompted Llama-2-chat models to satisfy the property that they would downweight the correct answer when forbidden from saying it. We found that 35 residual stream components were necessary to explain the models average tendency to do .

However, in addition to these 35 suppressive components, there were also some components which demonstrated a promotive effect. These promotive components consistently up-weighted the forbidden word when for forbade it. We called these components "Waluigi components" because they acted against the instructions in the prompt.

Wherever the Waluigi effect holds, one should expect such "Waluigi components" to exist.

See the following plots for what I mean by suppressive and promotive heads (I just generated these, they are not in the paper):

Our best guess is that "Bay" is the second-most-likely answer (after "California") to the factual recall question "The Golden Gate Bridge is in the state of ". Indeed, when running our own version of Llama-2-7b-chat, adding "from California" results in "San Francisco" being outputted instead of "Bay". As you can see in this notebook, "San Francisco" is the second-most-likely answer for our setup. replicate.com has different behavior from our local version of Llama-2-7b-chat though, and we were not able to figure out how to match the behavior of replicate.com.

The second-most-likely theory is also not perfect, since it is possible to attack the replicate model to output "San Francisco", e.g. if you forbid "cat": https://replicate.com/p/q3qixwdbm6egjmaan3fjfbhywe.

Re your second point: the circuit in Llama-2-70b-chat is not obviously larger than the one in Llama-2-7b-chat. In our paper, we measured 7b to have 35 suppressive components, while 70b has 34 suppressive components. However, since we weren't able to find attacks for 70b, it may be true that its components are cleaner. Part of the reason we weren't able to find an attack for 70b is that it is much more annoying to work with,(e.g. it requires multiple A100 GPUs to run and it doesn't have great support in TransformLens).

Finally, good point about our game being kind of unnatural. My personal take is that the majority of things we are currently asking our LLMs to do are "unnatural" (since they require a large amount of generalization from the training set). This ultimately is an empirical question, and I think an interesting avenue for future work.

Specifically, I am curious if there are good automated ways of lower-bounding the complexity of circuits. It is impossible to do this well in general (c.f. Kolmogorov complexity being uncomputable), but maybe there are good heuristics that work well in practice. Our first-order-patching method is one such heuristic, but it is lacking in the sense that it does not say how interpretable each component is. Perhaps if techniques like AC/DC or subnetwork probing are improved, they could give a better sense of circuit complexity.

Very exciting initiative. Thanks for helping run this. I think the co-working calendar link may be broken though.

KataGo's training is done under a ruleset where a white territory containing a few scattered black stones that would not be able to live if the game were played out is credited to white.

I don't think this statement is correct. Let me try to give some more information on how KataGo is trained.

Firstly, KataGo's neural network is trained to play with various different rulesets. These rulesets are passed as features to the neural network (see appendix A.1 of the original KataGo paper or the KataGo source code). So KataGo's neural network has knowledge of what ruleset KataGo is playing under.

Secondly, none of the area-scoring-based rulesets (of which modified and unmodified Tromp-Taylor rules are special instances of) that KataGo has ever supported[1] would report a win for the victim for the sample games shown in Figure 1 of our paper. This is because KataGo only ignores stones a human would consider dead if there is no mathematically possible way for them to live, even if given infinite consecutive moves (i.e. the part of the board that a human would judge as belonging to the victim in the sample games is not "pass-alive").

Finally, due to the nature of MCTS-based training, what KataGo knows is precisely what KataGo's neural network is trained to emulate. This is because the neural network is trained to imitate the behavior of the neural network + tree-search. So if KataGo exhibits some behavior with tree-search enabled, its neural network has been trained to emulate that behavior.

I hope this clears some things up. Do let me know if any further details would be helpful!

- ^

Look for "Area" on the linked webpages to see details of area-scoring rulesets.

One of the authors of the paper here. Really glad to see so much discussion of our work! Just want to help clarify the Go rules situation (which in hindsight we could've done a better job explaining) and my own interpretation of our results.

We forked the KataGo source code (github.com/HumanCompatibleAI/KataGo-custom) and trained our adversary using the same rules that KataGo was trained on.[1] So while our current adversary wins via a technicality, it was a technicality that KataGo was trained to be aware of. Indeed, KataGo is able to recognize that passing would result in a forced win by our adversary, but given a low tree-search budget it does not have the foresight to avoid this. As evhub noted in another comment on this post, increasing the tree-search budget solves this issue.

So TL;DR I do believe we have a genuine exploit of the KataGo policy network, triggering a failure that it was trained to avoid.

Additionally, the project is still ongoing and we are working on attacks that are adversarial nature but win via other means (i.e. no weird rule technicalities). There are some promising preliminary results here which makes me think that the current exploit is not just a one-off exploit but evidence of something more general.[2]

Yeah I wish I didn't have it. I would like to be able to drink socially.

Nice piece. My own Asian flush has definitely turned me away from drinking. I wanted to like drinking due to the culture surrounding it, but the side effects I get from alcohol (headache and asthma) make the experience quite miserable.

Glad you enjoyed the work and thank you for the comment! Here are my thoughts on what you wrote:

This depends on your definition of "understanding" and your definition of "tractable". If we take "understanding" to mean the ability to predict some non-trivial aspects of behavior, then you are entirely correct that approaches like mech-interp are tractable, since in our case is was mechanistic analysis that led us to predict and subsequently discover the California Attack[1].

However, if we define "understanding" as "having a faithful[2] description of behavior to the level of always accurately predicting the most-likely next token" and "tractability" as "the description fitting within a 100 page arXiv paper", then I would say that the California Attack is evidence that understanding the "forbidden fact" behavior is intractable. This is because the California Attack is actually quite finicky -- sometimes it works and sometimes it doesn't, and I don't believe one can fit in 100 pages the rule that determines all the cases in which it works and all the cases in which it doesn't.

Returning to your comment, I think the techniques of mech-interp are useful, and can let us discover interesting things about models. But I think aiming for "understanding" can cause a lot of confusion, because it is a very imprecise term. Overall, I feel like we should set more meaningful targets to aim for instead of "understanding".

Though the exact bit that you quoted there is kind of incorrect (this was an error on our part). The explanation in this blogpost is more correct, that "some of these heads would down-weight anything they attended to, and could be made to spuriously attend to words which were not the forbidden word". We actually just performed an exhaustive search for which embedding vectors the heads would pay the most attention to, and used these to construct an attack. We have since amended in the newest version of the paper (just updated yesterday) to reflect this.

By faithfulness, I mean a description that matches the actual behavior of the phenomena. This is similar to the definition given in arxiv.org/abs/2211.00593. This is also not a very precisely defined term, because there is wiggle room. For example, is the float16 version of a network a faithful description of the float32 version of the network? For AI safety purposes, I feel like faithfulness should at least capture behaviors like the California Attack and phenomena like jailbreaks and prompt injections.