I've been told that I'm bad at important communication, so to counteract that, just pretend I wrote a better version of whatever comment you clicked on. Thanks! 😅

Here's my Lesswrong,

This is my favorite YouTube video,

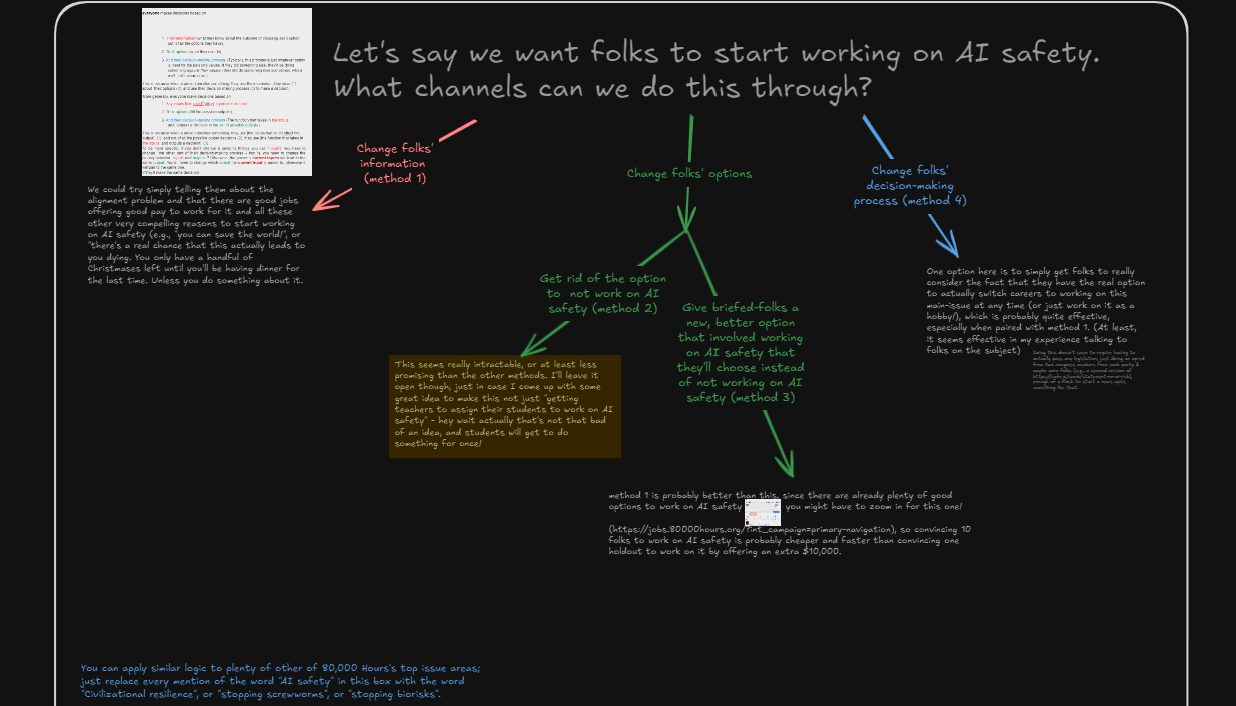

& I do world-modelling on the AMAZING whiteboard website excalidraw as a hobby. It's so fun!

Yea, it'd be a bonus to convince/inform folks that, if this works out, other people won't be evil,

& if we don't do that then some folks still might do bad things bc they think other folks are bad,

But as long as one doesn't see a way this idea makes things actively worse, It's still a good idea!

Thanks for pointing that out tho. Will add that ("that" being "Making sure folks understand that, if this idea is implemented, other folks won't be as evil, and you can stop being as bad to them") to the idea.

Thanks!