My current workflow to study the internal mechanisms of LLM

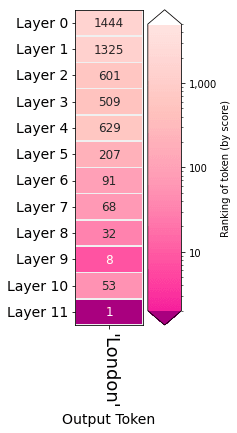

This is a post to keep track my research workflow of studying LLM. Since I am doing it on my spare time, I want to keep my pipeline as simple as possible. Step 1: Formulate a question for investigating model's behavior . Step 2: Find the influential layer for the...