I’m pretty sure I got this advice from Yudkowsky at some point, in a post full of writing advice, but I can’t find the reference at the moment.

I think this is in The 5 Second Level, specifically the parts describing and quoting from S. I. Hayakawa's Language in Thought and Action.

I am super curious about how you conceptualize the relationship between the theorist's theory space problem and the experimentalist's high dimensional world in this case. For example:

- Liberally abusing the language of your abstraction posts, is the theory like a map of different info summaries to each other, and the experimentalist produces the info summaries to be mapped, or the theorist points to a blank spot in their map where a summary could fit?

- Or is that too well defined, and it is something more like the theorist only has a list of existing summaries, and uses these to try and give the experimentalist a general direction (through dimensionality)?

- Or could it be something more like the experimenter has a pile of summaries because their job is moving through the markov blanket, and the theorist has the summarization rules?

Note that I haven't really absorbed the abstractions stuff yet, so if you've covered it elsewhere please link; and if you haven't wrangled the theory-space-vs-HD-world issue yet I'd still be happy if the answer was just some babbling.

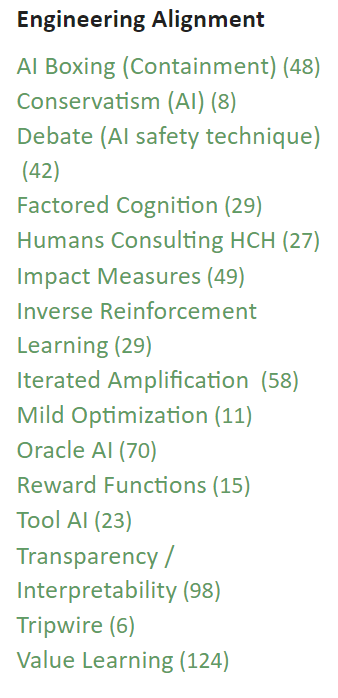

I have trouble imagining how you would get the Game Tree of Alignment exercise started. I would guess you'd take the common alignment approaches from Engineering Alignment under the AI Safety tag and then the next level would be why each of these don't work and the next level how to patch them?

I’ve been using the summer 2022 SERI MATS program as an opportunity to test out my current best guesses at how to produce strong researchers. This post is an overview of the methods I’ve been testing, and the models behind them.

The Team Model

My MATS participants are in three-person teams with specialized roles for each person: theorist, experimentalist, and distillitator (“distiller” + “facilitator”). This team setup isn’t just a random guess at what will work; the parts are nailed down by multiple different models. Below, I’ll walk through the main models.

Jason Crawford’s Model & Bits-of-Search

In Shuttling Between Science and Invention, Jason Crawford talks about the invention of the transistor. It involved multiple iterations of noticing some weird phenomenon with semiconductors, coming up with a theory to explain the weirdness, prototyping a device based on the theory, seeing the device not work quite like the theory predicted (and therefore noticing some weird phenomenon with semiconductors), and then going back to the theorizing. The key interplay is the back-and-forth between theory and invention/experiment.

The back-and-forth between theory and experiment ties closely to one of the central metaresearch questions I think about: where do we get our bits from? The space of theories is exponentially huge, and an exponentially small fraction are true/useful. In order to find a true/useful theory, we need to get a lot of bits of information from somewhere in order to narrow down the possibility space. So where do those bits come from?

Well, one readily available source of tons and tons of bits is the physical world. By running experiments, we can get lots of bits. (Though this model suggests that most value comes from a somewhat different kind of experiment than we usually think about - more on that later.)

Conversely, blind empiricism runs into the high-dimensional worlds problem: brute-force modeling our high-dimensional world would require exponentially many experiments. In order to efficiently leverage data from the real world, we need to know what questions to ask and what to look at in order to eliminate giant swaths of the possibility space simultaneously. Thus the role of theory.

In order to efficiently build correct and useful models, we want empiricism and theory coupled together. And since it’s a lot easier to find people specialized in one or the other than people good at both, it makes sense to partner a theorist and an experimentalist together.

Nate Soares’ Model

The hard step of theory work typically involves developing some vague intuitive concept/story, then operationalizing it in such a way that an intuitive argument turns into a rigorous mathematical derivation/proof. Claim which I got from Nate Soares: this process involves at most two people, and the second person is in a facilitator role.

(Note that the description here is mine; I haven’t asked Nate whether he endorses it as a description of what he had in mind.)

What does that facilitator role involve? A bare-minimum version is the programmer’s “Rubber Duck”: the rubber duck sits there and listens while the programmer explains the problem, and hopefully the process of explanation causes the programmer to understand their problem better. A skilled facilitator can add a lot of value beyond that bare minimum: they do things like ask for examples, try to summarize their current understanding back to the explainer, try to restate the core concept/argument, etc.

What the facilitator does not do is actively steer the conversation, suggest strategies or solutions or failure modes, or drop their own ideas in the mix. Vague intuitive concepts/stories are brittle, easy to accidentally smash and replace with some other story; you don’t want to accidentally overwrite someone’s idea with a different one. The goal is to take the intuitions in the explainer’s head, and accurately turn those intuitions into something legible.

A key part of a skilled facilitator’s mindset is that they’re trying to force-multiply somebody else’ thoughts, not show off their own ideas. Their role is to help the theorist communicate the idea, likely making it more legible in the process.

Eli Tyre’s Model

Eli Tyre was the source of the name “distillitator” for this role. What he originally had in mind was people who serve as both distiller and double-crux facilitator. On the surface, these two roles might sound unrelated, but they use the same underlying skills: both are (I claim) mainly about maintaining a mental picture of what another person is talking about, and trying to keep that picture in sync with the other person’s mental picture. That’s what drives facilitator techniques like asking for examples, summarizing back the facilitator’s current understanding, restating the core concept/argument, etc; all of these are techniques for making the listener’s mental picture match the speaker’s mental picture. Those techniques aren’t just useful for making the facilitator’s mental model match the speaker’s mental model; the same information can help an audience build a matching mental picture, which is the core problem of distillation.

With that in mind, the “facilitator” part of the role naturally generalizes between just double-crux facilitation. It’s the same core skillset needed by a facilitator under Nate’s model, in order to help extract and formalize intuitive concepts/arguments for theory-building. It’s also a core skillset of good communication in general; a good distillitator can naturally facilitate many kinds of conversations. They’re a natural “social glue” on a team.

And, of course, they can produce clear and interesting write-ups of the team’s work for the rest of us.

John’s Model

Now we put all that together, with a few other minor pieces.

As a general rule, the number of people who can actively work together on the same thing in the same place at the same time is three. Once we get past three, either the work needs to be broken down into modular chunks, or someone is going to be passively watching at any given time. Four person teams can work, but usually not better than three, and by the time we get to five it’s usually worse than three because subgroups naturally start to form.

Within the three-person team, we want people with orthogonal skillsets/predilections, because it is just really hard to find people who have all the skills. Even just finding people with any two of strong theory skills, experiment skills, and writing skills is hard, forget all three.

So, the model I recommend is one theorist, one experimentalist, and one distillitator.

That said, I do not think that people should be very tightly bound by their role during the team’s day-to-day work. There is a ton of value in everyone doing everything at least some of the time, so that each person deeply understands what the others are doing and how to work with them effectively. The rule I recommend is: "If something falls under your job/role, it is your responsibility to make sure it is done when nobody else is doing it; that does not necessarily mean doing it yourself". So, e.g., it is the facilitator’s job to facilitate in a discussion with nobody else facilitating. It is the theorist’s job to crank the math when nobody else is doing that. It is the experimentalist’s job to write the code for an ML experiment if nobody else is doing that. But it is strongly encouraged for each person to do things which aren’t “their job”. Also, of course, anyone could delegate, though it is then the delegator’s responsibility to make sure the delegatee is willing and able to do the thing.

For work on AI alignment and agent foundations specifically, I think experimentalists are easiest to recruit; a resume with a bunch of ML experience is usually a pretty decent indicator, and standard education/career pathways already provide most of the relevant skills. Distillitator skills are less explicit on a resume, but still relatively easy to test for - e.g. good writing (with examples!) is a pretty good indicator, and I expect the workshops below help a lot for practicing the distillitator skillset. Theory skills are the hardest to recognize, especially when the hopeful-theorist is not themselves very good at explaining things (which is usually the case).

Exercises & Workshops for Specific Skills

The workshops below are meant to train/practice various research skills. All of these skills are meant to be used on a day-to-day (or even minute-to-minute) basis when doing research - some when developing theory, some when coding, some when facilitating, some when writing, some for all four. I have no idea how many times or at what intervals the workshops should be used to install each skill, or even whether the workshops install the skills in a lasting way at all. I do know that people report pretty high value from just doing the workshops once each.

What Are You Tracking In Your Head? talks about a common theme in all of the skills in these workshops: they all involve mentally tracking some extra information while engaged in a task.

Giant Text File

Open up a blank text doc. Write out everything you can think to say about general properties of the systems found by selection processes (e.g. natural selection or gradient descent) in complex environments. Notes:

This exercise was inspired by a similar exercise recommended by Nate Soares. Within this curriculum, its main purpose is to provide idea-fodder for the other exercises.

For fields other than alignment/agency, obviously substitute some topic besides "general properties of the systems found by selection processes".

Prototypical Examples

There’s a few different exercises here, which all practice the skill of mentally maintaining a “prototypical example” of whatever is under discussion.

First exercise: pick a random technical paper off Arxiv, from a field you’re not familiar with. Read through the abstract (and, optionally, the intro). After each sentence, pause, and try to sketch out a concrete prototypical example of what you think the paper is talking about. Don’t google everything unfamiliar; just make a best guess. When doing the exercise with a partner, the partner’s main job is to complain that your concrete prototypical example is not sufficiently concrete. They should complain about this a lot, and be really annoying about it. Testing shows that approximately 100% of people are not sufficiently annoying about asking for examples to be less vague/abstract and more concrete.

You might find it helpful to compare notes with another group midway, in case one group interpreted the instructions in a different way which works better.

After trying this with some papers, the second exercise is to do the same thing with some abstract concept or argument. One person explains the argument/concept, the other person constantly asks for concrete examples, and complains whenever the examples given are too vague/abstract. Again, approximately 100% of people are not annoying enough, so aim to overdo it.

Conjectures & Idea Extraction

A conjecture workshop is done in pairs. One person starts with some intuitive argument, and tries to turn it into a mathematical conjecture. The other person plays a facilitation role; their job is to help extract the idea from the conjecturer’s head.

The facilitator is the key to making this work, and it’s great practice for facilitation in general. When I’m in the facilitation role, I try to follow what my partner is saying and keep my own mental picture in sync with my partner’s. And that naturally pushes me to ask for examples, summarize back my current understanding, restate the core concept/argument, etc. And, of course, I avoid steering; I want to extract their idea, not add pieces to it myself or argue whether it’s right.

As with the prototypical examples, approximately 100% of people are not annoying enough when they’re in the facilitation role. Don’t be afraid to interrupt; don’t be afraid to keep asking for concrete examples over and over again.

Some very loose steps which might be helpful to follow for conjecturing:

These steps probably aren’t near optimal yet; play around with them.

Framing

Prototypical example of a framing exercise:

In place of “stable equilibrium”, you might try this with concepts like:

This exercise usually turns up lots of interesting ideas, so it’s fun to share them in small groups after each brainstorming phase.

There are two models by which framing exercises provide value.

First, the main bottleneck to applying mathematical concepts/tools in real life is very often noticing novel situations where the concepts/tools apply. In the framing exercise, we proactively look for novel applications, which (hopefully) helps us build an efficient recognition-template in our minds.

Second, generating examples which don’t resemble any we’ve seen before also usually pushes us to more deeply understand the idea. We end up looking at border cases, noticing which pieces are or are not crucial to the concept. We end up boiling down the core of the idea. That makes framing exercises a natural tool both for theory work and for distillation work.

Notably, framing exercises scale naturally to one’s current level of understanding. Someone who’s thought more about e.g. information channels before, and has seen more examples, will be pushed to come up with even more exotic examples.

Existing Evidence

If <theory/model> were accurate, what evidence of that would we already expect to see in the world? What does our everyday experience tell us?

Experiments are one way to get bits of information from the world, to narrow in on the correct/useful part of model-space. But in approximately-all cases, we already have tons of bits of information from the world!

In this exercise, we pick one claim, or one model, and try to come up with existing real-world implications/applications/instances of the claim/model. (For people who’ve already thought about the claim/model a lot, an additional challenge is to come up with implications/applications/instances which do not resemble any you’ve seen before, similar to the framing exercises.) Then, we ask what those real-world cases tell us about the original claim/model.

Claims/models from the Alignment Game Tree exercise (below) make good workshop-fodder for the Existing Evidence exercise. Best done in pairs or small groups, with individual brainstorming followed by group discussion.

Fast Experiment Design

Take some concept or argument, and come up with ways to probe it experimentally.

I mentioned earlier that the bits-of-search model suggests a different kind of experiment than we usually think about. Specifically: the goal is not to answer a question. We’re not trying to prove some hypothesis true or false. That would only be 1 measly bit, at most! Instead, we want a useful way to look at the system. We want something we can look at which will make it obvious whether a hypothesis is true or false or just totally confused, a lens through which we can answer our question but also possibly notice lots of other things too.

For example, in ML it’s often useful to look at the largest eigen/singular values of some matrix, and the associated eigen/singular vectors. That lets us answer a question like “is there a one-dimensional information bottleneck here?”, but also lots of other questions, and it potentially helps us notice phenomena which weren’t even on our radar before.

Also, of course, we want (relatively) fast experiments. The point is to provide a feedback signal for model-building, and a faster feedback loop is proportionately more useful than a slower feedback loop.

This is a partner/small group exercise. In practice, it relied pretty heavily on me personally giving groups feedback on how well their ideas “look at the system rather than answering a question”, so the exercise probably still needs some work to be made more legible.

Runtime Fermi Estimates

Walk through a program, and do a Fermi estimate of the runtime of the program and each major sub-block. (We used programs which calculated singular values/vectors of the jacobian of a neural net.) For Fermi purposes, clock speed is one billion cycles per second.

This one is a pretty standard “What are you tracking in your head?” sort of exercise, and it’s very easy to get feedback on your estimates by running the program. I recommend doing the exercise in pairs.

Writing

These are exercises/workshops on writing specifically. The Prototypical Examples and Framing exercises are also useful as steps in the writing process.

Great Papers

Before the session, read any one of:

Read and take notes, not on the content, but on the writing.

During the session, compare notes and observations.

Concrete-Before-Abstract

The "concrete-before-abstract" heuristic says that, for every abstract idea in a piece of writing, you should introduce a concrete example before the abstract description. This applies recursively, both in terms of high-level organization and at the level of individual sentences.

Concrete-before-abstract is, I claim, generally a better match for how human brains actually work than starting with abstract ideas. I’m pretty sure I got this advice from Yudkowsky at some point, in a post full of writing advice, but I can’t find the reference at the moment. I’d say it’s probably the single highest-value writing tool I use (though often I’m lazy about it, including many places in this post).

In this workshop, take a post draft and do a round of feedback and editing focused entirely on the concrete-before-abstract pattern. Anywhere something abstract appears, the editor should request a concrete example beforehand. And, yes, the editor should ideally be really annoying about it.

Hook Workshop

Workshop the first few sentences of a post to (a) communicate a concrete picture of what the post is about, and (b) communicate why it’s interesting. Generate a few hooks, swap with a partner, give each other feedback, iterate.

Examples and stories are usually good.

Game Tree of Alignment

Collaboratively play out the "game tree of alignment": list strategies, how they fail, how to patch the failures, how the patches fail, and so on down the "game tree" between humanity and Nature. Where strategies depend on assumptions or possible ways-the-world could be, play through evidence for and against those assumptions.

When the tree becomes unmanageable (which should happen pretty quickly), look for recurring patterns - common bottlenecks or strategies which show up in many places. Consolidate.

During the MATS program, we had ~15 people write out the game tree as a giant nested list in a google doc. I found it delightfully chaotic, though I apparently have an unusually high preference for chaos, because lots of the participants complained that it was unmanageable. Dedicated red team members might help, and better organization methods would probably help (maybe sticky notes on a wall?).

Anyway, the main reason for this exercise is that (according to me) most newcomers to alignment waste years on tackling not-very-high-value subproblems or dead-end strategies. The Game Tree of Alignment is meant to help highlight the high-value subproblems, the bottlenecks which show up over and over again once we get deep enough into the tree (although they’re often nonobvious at the start). For instance, it’s not a coincidence that Paul Christiano, Scott Garrabrant, and myself have all converged to working on essentially-the-same subproblem, namely ontology identification. We came by very different paths, but it’s a highly convergent subproblem. The hope of playing through the Alignment Game Tree is that people will converge to subproblems like that faster, without spending years on low-value work to get there. (One piece of evidence: the Game Tree exercise is notably similar to Paul’s builder/breaker methodology; he converged to the ontology identification problem within months of adopting that methodology, after previously spending years on approaches I would describe as dead ends, and which I expect Paul himself will probably describe as dead ends in another few years.)

What’s Missing?

The MATS program is only three months long, and the training period was only three weeks. If I wanted to produce researchers with skill stacks like my own, I would need more like a year. There are two main categories of time-intensive material/exercises which I think would be needed to unlock substantially more value:

Technical Content

For the looooong version, see Study Guide.

I think it is probably possible-in-principle to get 80% of the value of a giant pile of technical knowledge by just doing framing exercises for all the relevant concepts/tools/models/theorems. However, that would require someone going through all the relevant subjects the long way, picking out all the relevant concepts, and making framing exercises for them. That would take a lot of work. But if you happen to be studying all this stuff anyway, maybe make a bunch of framing exercises?

Practice on Hard Problems

For each of these Hard Problems, I have spent at least one month focused primarily on that problem:

I don’t think these are necessarily the optimal problems to train on, and they’re not the only problems I’ve trained on, but they’re good examples of Hard Problems. Note that a crucial load-bearing part of the exercise is that your goal is to outright solve the Hard Problem, not merely try, not merely “make progress”. If it helps, imagine that someone you really do not like said very condescendingly that you’d never be able to solve the problem, and you really desperately want to show them up.

(FWIW, my own emotional motivation is more like… I am really pissed off at the world for its sheer incompetence, and I am even more pissed off at people for rolling over and acting like Doing Hard Things is just too difficult, and I want to beat them all in the face with undeniable proof that Doing Hard Things is not impossible.)

Training on Hard Problems is important practice, if you want to tackle problems which are at least hard and potentially Hard. It will build important habits, like “don’t do a giant calculation without first making some heuristic guesses about the result”, or “find a way to get feedback from the world about whether you’re on the right track, and do that sooner rather than later”, or “if X seems intuitively true then you should follow the source of that intuition to figure out a proof, not just blindly push symbols around”.

If done right, this exercise will force you to actually take seriously the prospect of trying to solve something which stumped everyone else; just applying the standard tools you learned in undergrad in standard ways ain’t gonna cut it. It will force you to actually take seriously that you need some fairly deep insight into the core of the problem, and you need systematically-good tools for finding that insight; just trying random things and relying on gradient descent to guide your search ain’t gonna cut it. It will force you to seriously tackle problems which you do not have the Social Status to be Qualified to solve. And ideally, it will push you to put real effort into something at which you will probably fail.