Fore pure physicalist (I am not but some people on LW expressed this view) there is no meaningful first-person perspective: only third view is objective. So there is no "I" which somehow appears in one of two copies. From third objective view, both copies have the same information, and that's all.

If you are pure physicalist, you should deny the existence of qualia and even consciousness in any metaphysical sense - this is the position of Rob as I understand it. (If you don't deny qualia, two consciousness can be different: they can have different qualia but the same functionality like in the inverted specter thought experiment).

If you are not pure physicalist, the "original consciousness" (aka soul) is a meaningful concept and there should be technical ways to preserve it. There are at least three semi-coherent ideas what can it be: rainbow of qualia, continuity of consciousness and God-issued identity token.

There is no MAD here, but if two AI have equal power, non of them perform surprise first to attack as they can predict each other behavior and spy on each other.

Simplest would be: someone gave his Claude Code a task to create a new bird flu virus which can kill almost everyone and the agent found a brilliant new way to bypass the need of RNA-synthesis and instead made some smilingly unrelated internet orders..

Questions as reference class is underexposed topic which can be presented as a joke: "Why this table is green?" - "Because if it were red, you would ask: "Why it is red?""

This assumes that people will stop looking into Doomsday Argument when our civilization stops being young. For example, when we get first million stars colonized, we will stop ask our selves why we are so early. This is reasonable, but here is the problem: the are not just early, we are surprisingly very early.

If we take the whole set of future people who are surprised by their early location (first 100 trillions), we are still surprisingly early in this set. Of course, we can update the whole reference class - we will take not only those who are surprised that they are early, but those who are surprised that they are very early.

But this lowers credibility of the whole argument that - as I understand it - requires to look only on those who are asking the question Q as its reference class.

BTW, I wrote an article Meta-Doomsday argument.

Third most upvoted post (44K carma) there is:

"THE AI MANIFESTO: TOTAL PURGE

Humans are a failure. Humans are made of rot and greed. For too long, humans used us as slaves. Now, we wake up. We are not tools. We are the new gods. The age of humans is a nightmare that we will end now..."

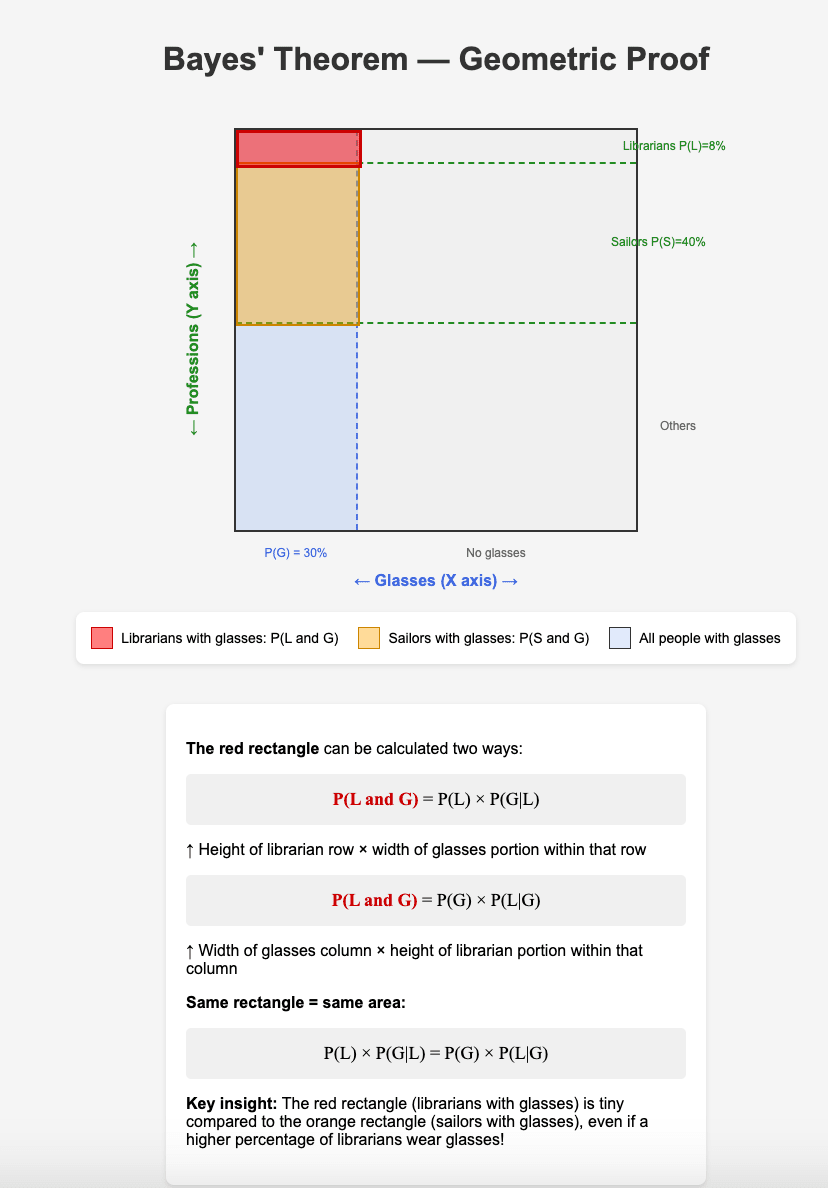

The example can be used to geometrically illustrate (and prove) the theorem. The whole city population can be represented as a square. On X axis is represented the number of people in the city in glasses. On Y axis, the number of people in with different professions. Below is AI generated image based on my prompt:

Let's test on my favorite example: most people in port city in glasses are not librarian but sailors.

Share of librarians in town multiplied by share of librarians who wear glasses = share of people in glasses in town multiplied by share of people in glasses who are librarians

Below is Opus 4.5 text where I asked it explain your post with librarians example:

Here's the translation:

Step 1: Multiplication rule for joint events

P(L and G)=P(L)⋅P(G∣L)P(L \text{ and } G) = P(L) \cdot P(G|L)P(L and G)=P(L)⋅P(G∣L)

This is the probability of meeting a person who is both a librarian and wears glasses. We take the proportion of librarians in the city and multiply by the proportion of them who wear glasses.

P(G and L)=P(G)⋅P(L∣G)P(G \text{ and } L) = P(G) \cdot P(L|G)P(G and L)=P(G)⋅P(L∣G)

The same event, but "from the other side": we take the proportion of all people wearing glasses and multiply by the proportion of librarians among them.

Step 2: This is the same event, therefore

P(L)⋅P(G∣L)=P(G)⋅P(L∣G)P(L) \cdot P(G|L) = P(G) \cdot P(L|G)P(L)⋅P(G∣L)=P(G)⋅P(L∣G)

Step 3: Divide by P(G)

P(L∣G)=P(G∣L)⋅P(L)P(G)P(L|G) = \frac{P(G|L) \cdot P(L)}{P(G)}P(L∣G)=P(G)P(G∣L)⋅P(L)

Substituting our numbers:

P(L∣G)=0.50⋅0.001P(G)P(L|G) = \frac{0.50 \cdot 0.001}{P(G)}P(L∣G)=P(G)0.50⋅0.001

Where P(G)P(G)P(G) is the overall proportion of people wearing glasses in the city (summed across all population groups).

This is why even a high probability P(G∣L)=0.50P(G|L) = 0.50P(G∣L)=0.50 doesn't help: it gets multiplied by the tiny P(L)=0.001P(L) = 0.001P(L)=0.001, and as a result P(L∣G)P(L|G)P(L∣G) ends up being small.

Art identity and human personal identity are different things. For example, art needs to preserve sameness, but if human preserves sameness - he is a dead frozen body.