Third Time: a better way to work

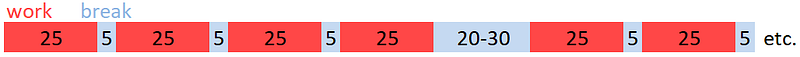

HOW CAN you be more productive? Instead of half-working all day, it’s better to work in focused stints, with breaks in between to recover. There are various ways to do this, but here's my new technique, called Third Time. The gist of it is: * Work for as long or as short as you like, until you want or need to break; then * Break for up to one-third of the time you’ve just worked. So after 15 minutes of dealing with emails, you could stop for up to 5 minutes. After an hour-long meeting, you can take a good 20-minute break. And if a task bores you after 3 minutes, you can even break then — but only for 1 minute! Breaks reward you for working, but proper breaks have to be earned. Work stints can be any length; breaks are (up to) one-third of the time just worked This kind of pattern is natural; research confirms that people tend to take longer breaks after working for longer. (One-third is just a recommendation; you can use other break fractions if you prefer.) Third Time has many advantages over other techniques such as Pomodoro (which I’ll discuss later), but the key one is flexibility. It adapts to your attention span, energy, and schedule, as well as to other people and events. And Third Time isn’t just for your day-job — it suits anything that needs focus or effort, such as studying, practicing an instrument, personal admin, writing, or fitness training. Using Third Time Here’s an example of the basic procedure: 1. Note the time, or start a stopwatch 2. Work for as long or short as you like, until you want or need to break 3. Suppose you worked for 45 minutes. This earns you 45 ÷ 3 = 15 minutes off; so set an alarm for 15 minutes 4. Break until the alarm goes off 5. Go back to step 1. Breaks You needn’t take the full break. Maybe you have a tight deadline, an important customer calls, you’re keen to resume work, or only have a short gap before a meeting. Whatever the reason, if you end a break (say) 5 minutes early, add 5 minutes to your next brea

To clarify, I think your criticism of utilitarianism/consequentialism is of a naive form of it that only looks at first-order effects. Not ‘proper’ utilitarianism. But yes no doubt many are naive like this, and it’s v hard to evaluate second- and higher-order effects (such as exploitation and coordination).

Also, this kind of naivety is particularly common on the left.