I hadn't considered steering vectors before, but yes that's correct.

Just looking at Shazeer's paper (Appendix A)

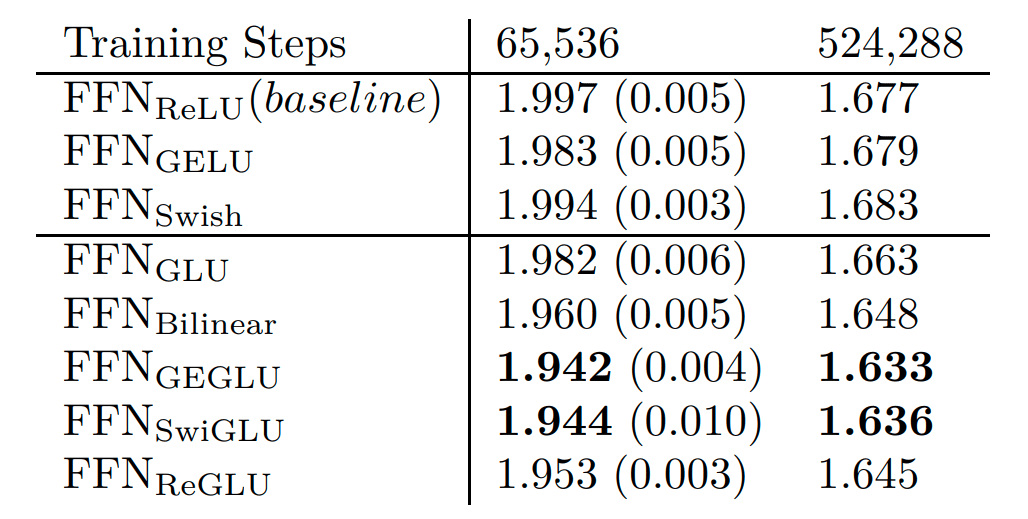

All of the GLU models performed better (lower is better) and the GLU models have a bilinear encoder (just w/ & w/o a sigmoid/GeLU/Swish/ReLU function). So in fact it does better (if this is what you meant by a dual encoder).

HOWEVER, we could have 3 encoders, or 100! This should store even more information, and would probably perform better per step, but would take up more GPU VRAM and/or take longer to compute each step.

In this post, though, I used wall clock time as a measure of training efficiency. Hand-wavy:

loss/step * time/step

(maybe it should be divided to make it loss/time?)

A full 3rd order tensor is much larger, whereas this parametrization is the CP-decomposition form. This is the "official reason" when I'm really just building off Dooms et al. (I've never actually tried training the full tensor though!)

Re init: the init for modded gpt at that fork was kind of weird, but I'm pretty sure most standard inits prevent that. I am using RMSNorm which can be treated as a tensor network as well (I could maybe dm explanation, it's a forthcoming resource from Thomas). I'm also normalizing Q & K which isn't a tensor network, BUT compositionality is on a spectrum (maybe I am too). So this does mean a small portion of the model isn't a tensor network.

Ideally we can work around this!

Yep! But I do think the highest priority thing would be actually doing ambitious interp w/ this, although, if we had 100 people working on this (instead of ~4-5 full time?), a few working on the scaling laws would be good.

TNs are more amenable to optimizing exactly what we want in a mathematically precise way, so optimizing for this (to achieve ambitious mech interp) would incur an additional cost in capabilities, just fyi.

Not claiming to understand your work, but my intuition is that a bilinear layer would be easier to prove things about than an MLP, while also being closer to SOTA. Some (maybe) useful properties for your case:

- bilinear

- directions are what matters for relationships between components (between any part of any matrix, where the input can be considered just another matrix); scale doesn't affect compositionality.

- No implicit computation (eg different polytopes of MLPs), just the structure in the weights.

- a polynomial

- can be turned into a tensor (& combined into a larger tensor when you have multiple layers)

- Note: a bilinear layer is already a CP decomposition (generalization of SVD, maintaining the outer product format), but combining two bilinear layers, you get a 5th order tensor, which you can decompose as well.

For performance, folks tend to use a swiGLU, where a bilinear layer is similar to and almost as performant (Table 1 in Noam Shazeer's paper). Interesting enough, it's better than MLPs w/ ReLU/GeLU/Swish.

Great work!

Listened to a talk from Philipp on it today and am confused on why we can't just make a better benchmark than LDS?

Why not just train eg 1k different models, where you left 1 datapoint out? LDS is noisy, so I'm assuming 1k datapoints that exactly capture what you want is better than 1M datapoints that are an approximation [1]

As an estimate, Nano-GPT speedrun takes a little more than 2 min now, so you can train 1001 of these in:

2.33*1k/60 = 38hrs on 8 H100's which is maybe 4 b200's which is $24/hr, so ~$1k.

And that's getting a 124M param LLM trained on 730M tokens up to GPT2 level. Y'all's quantitative setting for Fig 4 was a 2M parameter Resnet on Cifar-10 on 5k images, which would be much cheaper to do (although the GPT2 one has been very optimized, so you could just do the speedrun one but on less data).

LDS was shown to be very noisy, but a colleague mentioned that this could be because 5k images is a very small amount of data. I guess another way to validate LDS is running the expensive full-training run on a few datapoints.

Confusion on LDS Hyperparameter Sweep Meaning

Y'all show in Fig 4 that there are large error bars across seeds for the different methods. This ends up being a property of LDS's noisiness, as y'all show in Figures 7-8 (where BIF & EK-FAC are highly correlated). This means that, even using noisy LDS, you don't need to re-run 5 times if a new method is much better than previous ones (but only if it's narrowly better).

What I'm confused about is why you retrained on 100 different ways to resample the data at each percentage? Is this just because LDS is noisy, so you're doing the thing where randomly sampling 100 datapoints 500 times gives you a good approximation of the causal effect of each individual datapoint (or that is what LDS actually is)? Was there high variance in the relative difference between methods across the 100 retrained models?

Other Experiments

Just wild speculation that there are other data attribution methods as opposed to prediction of the output. When a model "groks" something, there will be some datapoints that were more important for that happening that should show up in an ideal data attribution method.

Similar w/ different structures forming in the dataset (which y'all's other paper shows AFAIK).

[Note: there's a decent chance I've terribly misunderstood y'all's technique or misread the technical details, so corrections are appreciated]

- ^

It initially seemed confusing on how to evaluate this, but I think we need to look at the variance over the distribution of datapoints. If BIF is consistently more accurate than EK-FAC over eg 100 randomly sampled datapoints, then that's a good sign for BIF; however, if there's a high level of variance, then we'd need more data to differentiate between the two. I do think higher quality data attribution methods would have higher signal, so you'd need less data. For example, I predict that BIF does better than Trak on ~all datapoints (but this is an empirical question).

I was actually expecting Penny to develop dystonia coincidentally, and the RL would tie-in by needing to be learned in reverse ie optimizing from dystonic to normal. It is a much more pleasant ending than the protagonist's tone the whole way through.

If I was writing a fanfic of this, I'd keep the story as is (+ or - the last paragraph), but then continue into the present moment which leads to the realization.

It's very exciting to have an orthogonal research direction that finds these ground truth features, which might possibly even generalize(!!). Please do report future results, even if negative (though your Malladi et al link is some evidence in the positive).

It's also very confusing since I'm unsure how this all fits in with everything else? This clearly works in these cases. SAE's clearly work in some cases as well (and same w/ the parameter decomposition research), but what's the "Grand Theory of NN Interp" that explains all of these results?

In general, I believe it's very important that we hedge our bets on research directions for interp. The main reason being one of them actually panning out, but even if not, they already provide unique pieces of evidences for later researchers (maybe us, maybe LLMs, lol) to hopefully figure out that "Grand Theory of NN Interp".

Thanks Charlie:)

I'm confused on what you're referring to. Bilinear layers are scale invariant by linearity

Bilinear(ax)=a2Bilinear(x)So x could be the input-token, a vector d (from the previous bilinear layer), or a steering vector added in, but it will still produce the same output vector (and affect the same hidden dims of the bilinear layer in the same proportions).

Another way to say this is that for:

y=Bilinear(ax)The percentage of attribution of each weight in bilinear w/ respect to y is the same regardless of a, since to compute the percentage, you'd divide by the total so that cancels out scaling by a.

This also means that, solely from the weights, you can trace the computation done by injecting this steering vector.

[*Caveat: a bilinear layer computes interactions between two things. So you can compute the interaction between BOTH (1) the steering vector and itself and (2) the steering vector w/ the other directions d from previous layers. You CAN'T compute how it interacts w/ the input-token solely from the weights, because the weights don't include the input token. This is a bit of a trivial statement, but I don't want to overstate what you can get]

Overall, my main confusion w/ what you wrote is what an activation that is an entire layer or not an entire layer means.