status - typed this on my phone just after waking up, saw someone asking for my opinion on another trash static dataset based eval and also my general method for evaluating evals. bunch of stuff here that's unfinished.

working on this course occasionally: https://docs.google.com/document/d/1_95M3DeBrGcBo8yoWF1XHxpUWSlH3hJ1fQs5p62zdHE/edit?tab=t.20uwc1photx3

Probably not. Is the thing something that actually has multiple things that could be causing it, or just one? An evaluation is fundamentally, a search process. Searching for a more specific thing and trying to make your process not ping for anything other than one specific thing, makes it more useful, when searching in the v noisy things that are AI models. Most evals dont even claim to measure one precise thing at all. The ones that do - can you think of a way to split that up into more precise things? If so, there's probably at least n number of more things to split it into, n negatively correlating with how good you are at this.

Order of things about the model that tell useful data, from least useful data to most useful: Outputs Logits Activations Weights This is also the order for difficulty of measurements, but the data's useful enough that any serious eval (there is one close to semi serious eval in the world atm, that I know of) engineers would try to solve this by trying harder.

There's one group of people other than myself and people I've advised that are trying red teaming evals atm. Trying to do some red teaming of an eval at all isnt terrible necessarily, but likely the red teaming will be terrible and they'll be vastly overconfident in the newness and value of their work from that tiny little bit of semi rigorous looking motion

We ask the AI to help make us smarter

this may make little to negative sense, if you don't have a lot of context:

thinking about when I've been trying to bring together Love and Truth - Vyas talked about this already in the Upanishads. "Having renounced (the unreal), enjoy (the real). Do not covet the wealth of any man". Having renounced lies, enjoy the truth. And my recent thing has been trying to do more of exactly that - enjoying. And 'do not covet the wealth of any man' includes ourselves. So not being attached to the outcomes of my work, enjoying it as it's own thing - if it succeeds, if it fails, either I can enjoy the present moment. And this doesn't mean just enjoying things no matter what - I'll enjoy a path more if it brings me to success, since that's closer to Truth - and it's enjoying the Real.

the Real truth that each time I do something, I try something, I reach out explore where I'm uncertain, I'm learning more about what's Real. There are words missing here, from me not saying them and being able to say them, from me knowing not how to say them and from me not knowing that they should be here, for my Words to be more True. But either way, I have found enjoyment in writing them.

the way you phrased it there seems fine

Too, too much of the current alignment work is not only not useful, but actively bad and making things worse. The most egregious example of this to me, is capability evals. Capability evals, like any eval, can be useful for seeing which algorithms are more successful in finding optimizers at finding tasks - and in a world where it seems like intelligence generalizes, this means that ever public capability eval like FrontierMath, EnigmaEval, Humanity's Last Exam, etc help AI Capability companies figure out which algorithms are ones to invest more compute in, and test new algorithms.

We need a very, very clear differentiation between what precisely is helping solve alignment and what isn't.

But there will be the response of 'we don't know for sure what is or isn't helping alignment since we don't know what exactly solving alignment looks like!!'.

Having ambiguity and unknowns due to an unsolved problem doesn't mean that literally every single thing has an equal possibility of being useful and I challenge anyone to say so seriously and honestly.

We don't have literally zero information - so we can certainly make some estimates and predictions. And it seems quite a safe prediction to me, that capability evals help capabilities much much more than alignment - and I don't think they give more time for alignment to be solved either, instead, doing the opposite.

To put it bluntly - making a capability eval reduces all of our lifespans.

It should absolutely be possible to make this. Yet it has not been done. We can spend many hours speculating as to why. And I can understand that urge.

But I'd much much rather just solve this.

I will bang my head on the wall again and again and again and again. So help me god, by the end of January, this is going to exist.

I believe it should be obvious why this is useful for alignment being solved and general humanity surviving.

But in case it's not:

Good idea - I advise a higher amount, spread over more people. Up to 8.

- Yep. 'Give good advice to college students and cross subsidize events a bit, plus gentle pressure via norms to be chill about the wealth differences' is my best current answer. Kinda wish I had a better one.

Slight, some, if any nudges toward politics being something that gives people a safety net, so that everyone has the same foundation to fall on? So that even if there are wealth differences, there aren't as much large wealth enabled stresses

Working on a meta plan for solving alignment, I'd appreciate feedback & criticism please - the more precise the better. Feel free to use the emojis reactions if writing a reply you'd be happy with feels taxing.

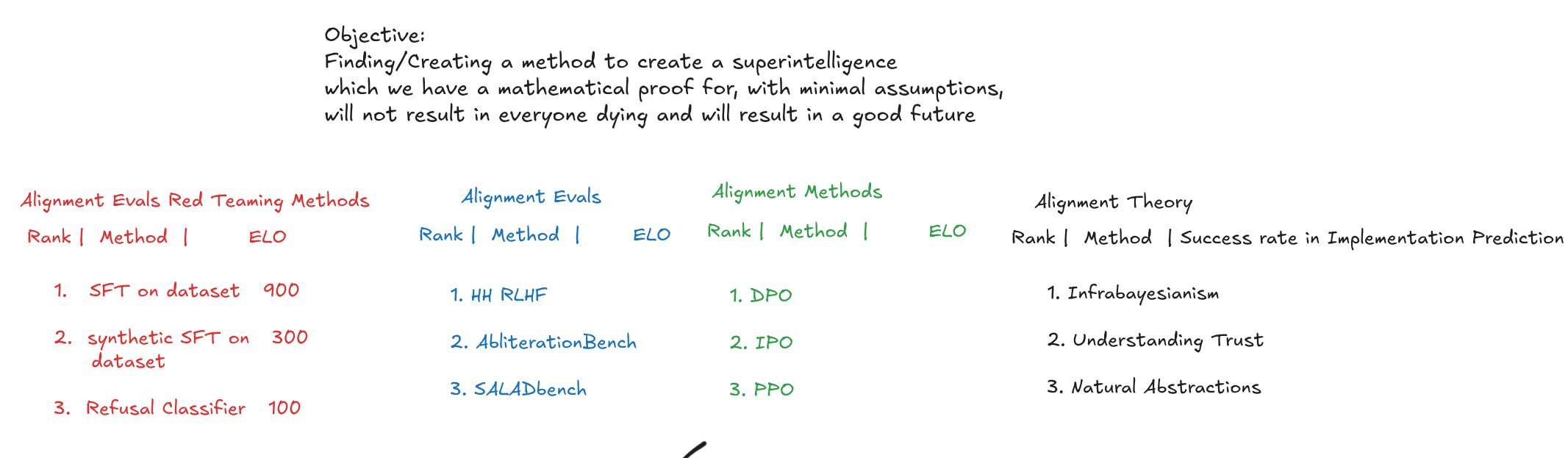

Diagram for visualization - items in tables are just stand-ins, any ratings and numbers are just for illustration, not actual rankings or scores at this moment.

Red and Blue teaming Alignment evals

Make lots of red teaming methods to reward hack alignment evals

Use this to find actually useful alignment evals, then red team and reward hack them too, find better methods of reward hacking and red teaming, then finding better ways of doing alignment evals that are resistant to that and actually find the preferences of the model reliably too - keep doing this constantly to have better and better alignment evals and alignment evals reward hacking methods. Also hosting events for other people to do this and learn to do this.

Alignment methods

then doing these different post training methods, implementing lots of different alignment methods, seeing which ones score how highly across the alignment evals we know are robust, using this to find patterns in which types seem to work more/less,

Theory

then doing theory work, to try to figure out/guess why those methods are working more/less, make some hypothesises about new methods that willl work better and new methods that will work worse, based on this,

then ranking the theory work based on how minimal the assumptions are, how well predicted the implementations are and how high they score on peer review

End goal:

Alignment evals that no one can find a way to reward hack/red team and that really precisely measure the preferences of the models.

Alignment methods that seem to score a high score on these evals.

A very strong theoretical understanding for what makes this alignment method work, *why* it actually learns the preferences, theory understanding how those preferences will/won't scale to result in futures where everyone dies or lives. The theoretical work should have as few assumptions as possible. Aim is a mathematical proof with minimal assumptions, that's written very clearly and is easy to understand so that lots and lots of people can understand it and criticize it - robustness through obfuscation is a method of deception, intentional or not.

Current Work:

Hosting Alignment Evals hackathons and making Alignment Evals guides, to make better alignment evals and red teaming methods.

Making lots of Qwen model versions, whose only difference is the post training method.