Epoch AI released a GATE Scenario Explorer

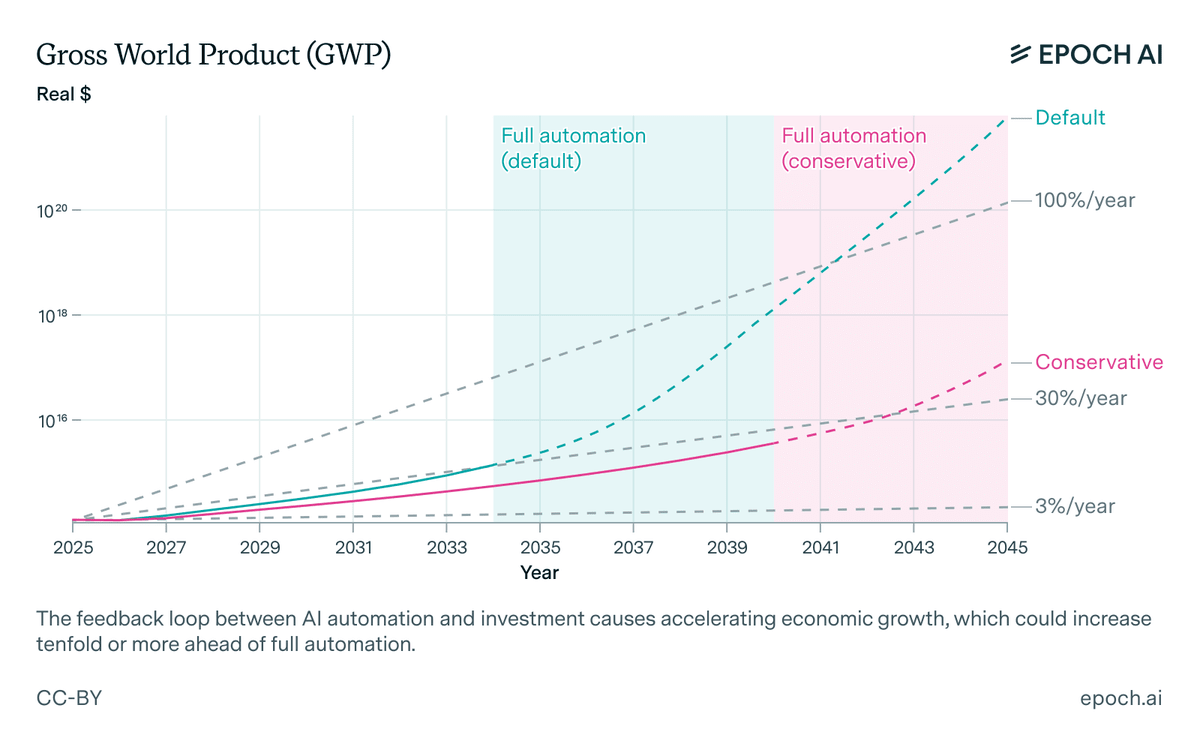

I think it's more easier to discuss AI progress in terms of economy growth rather than just focusing on the scale of the largest training runs and compute used. From their X announcement: > We developed GATE: a model that shows how AI scaling and automation will impact growth. >...

I want to pitch my blog. I'm writing about tech and AI from a business perspective.

Think of it like Ben Thompson's Stratecherry. But with longer deep dives, a more conversational tone, and much less disregard for Safety.

My last piece was a second look at Grok 3, after they released the API.