The case for training frontier AIs on Sumerian-only corpus

> Let your every day be full of joy, love the child that holds your hand, let your wife delight in your embrace, for these alone are the concerns of humanity.[1] > > — Epic of Gilgamesh - Tablet X Say we want to train a scientist AI to help in a precise, narrow field of science (e.g. medicine design) but prevent its power from being applied anywhere else (e.g. chatting with humans, designing bio-weapons, etc.) even if it has these abilities. Here’s one safety layer one could implement: 1. Train a scientist AI on a large scientific corpus translated exclusively into Sumerian. Keep it in a secure containment environment. 2. Train a less-smart reporter whose sole ability is to translate from Sumerian to English only if the Sumerian content is about medical research. It refuses to translate other kinds of content. 3. Human operators are only allowed to interact with the scientist AI through the intermediate of the reporter. This safety layer helps in at least two ways: 1. The scientist AI cannot directly manipulate humans to escape its containment. It should first trick the reporter to then trick the humans, rendering the effort still possible but less practical. 2. If the scientist AI exfiltrates, it’ll have a harder time manipulating humans online and will leave traces: e.g. any occurrence of Sumerian on the Internet outside of ancient text studies becomes highly suspicious. One can even imagine recognizing the idiosyncrasies of English coming from translated Sumerian, or the artifacts left by the Sumerian-specific tokenizer reused to produce non-Sumerian text. Why Sumerian? [2] * It has been extinct for 4000 years. There are very few (probably <1000) experts who fluently understand Sumerian. * It is a language isolate. It’s unlike any existing spoken language, rendering its identification in case of a leak much easier. * There is a substantial corpus. Despite its age, a significant number of Sumerian texts have been discovered and preserved. These

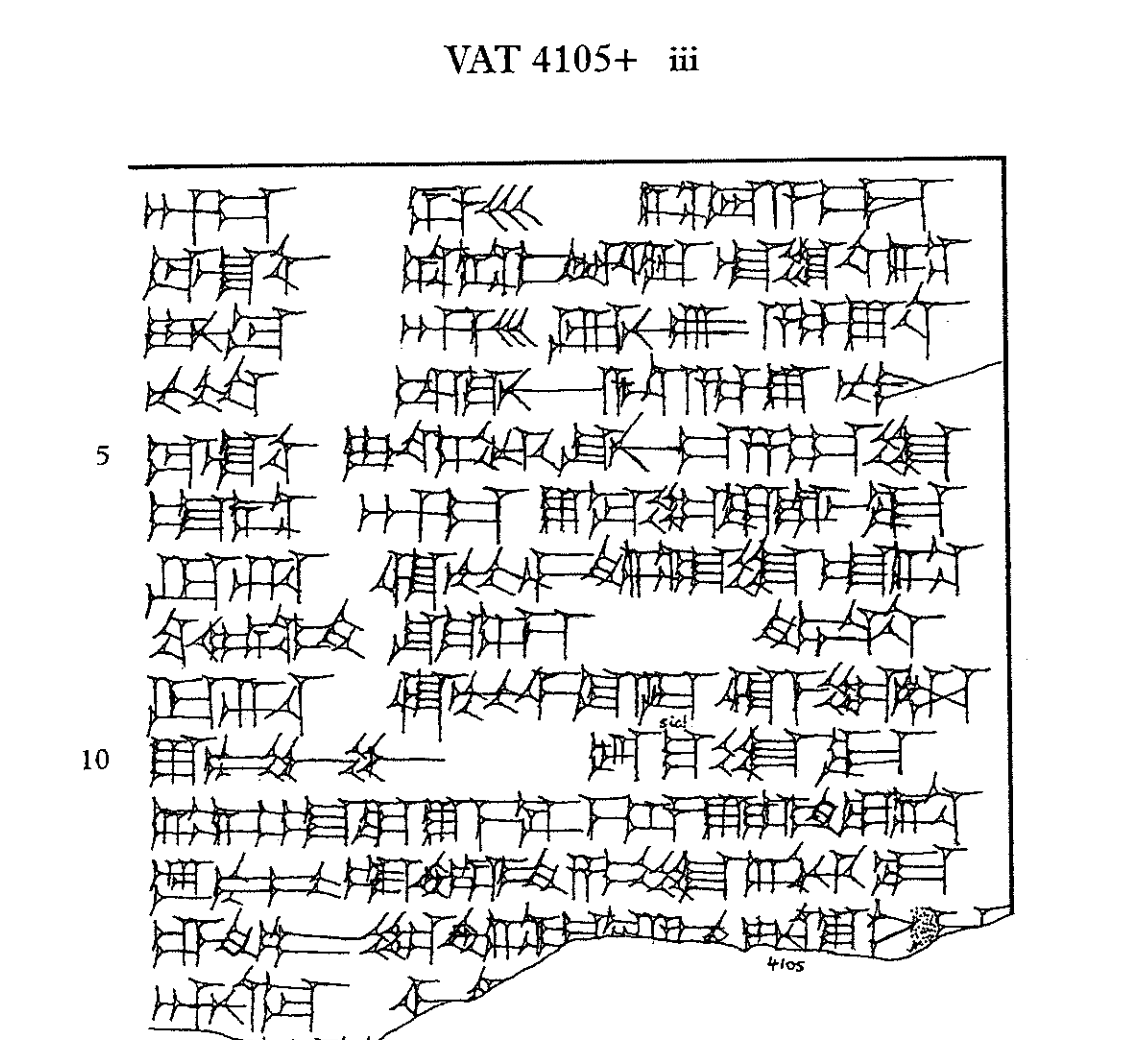

I'm not asking you to engage with Mikhail more, I believe I understand it's frustrating given your extensive prior conversations that still led to this post being made.

Nevertheless, I have found all these comments informative as well as op.

The post says Mikhail sent

and that you replied “lol, no" after a week.

I generally don't want to clash with you as I respect a lot of your public takes etc, but for the same reasons you're publicly disagreeable I do think it's worth pointing... (read more)