Thanks this is very interesting! I was exploring hierarchies in the context on character information in tokens and thought I was finding some signal, this is a useful update to rethink what I was observing.

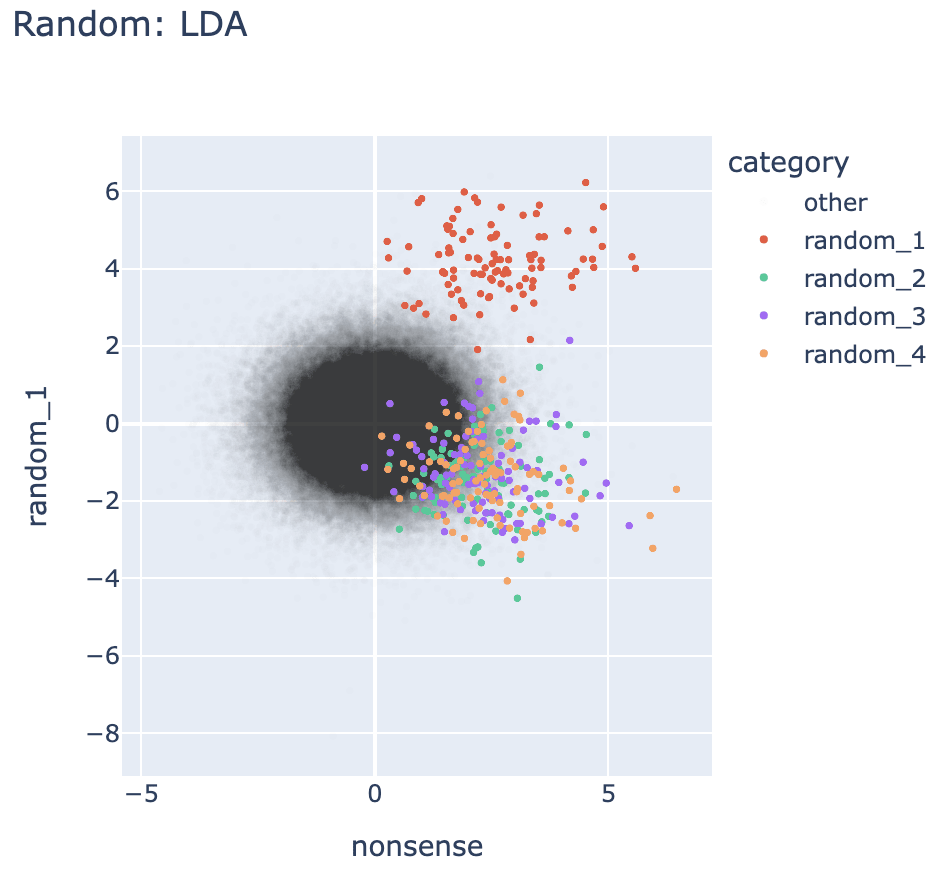

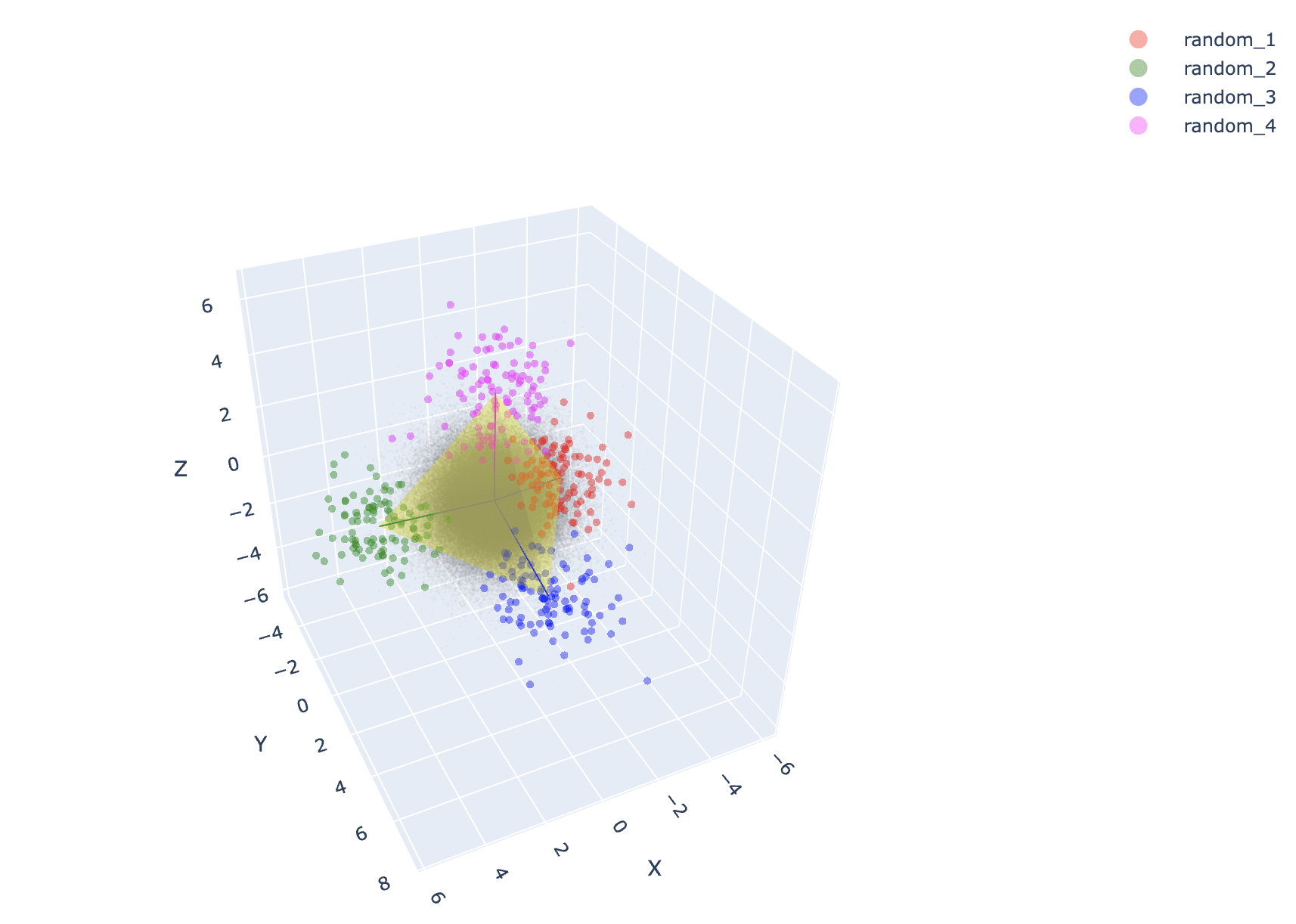

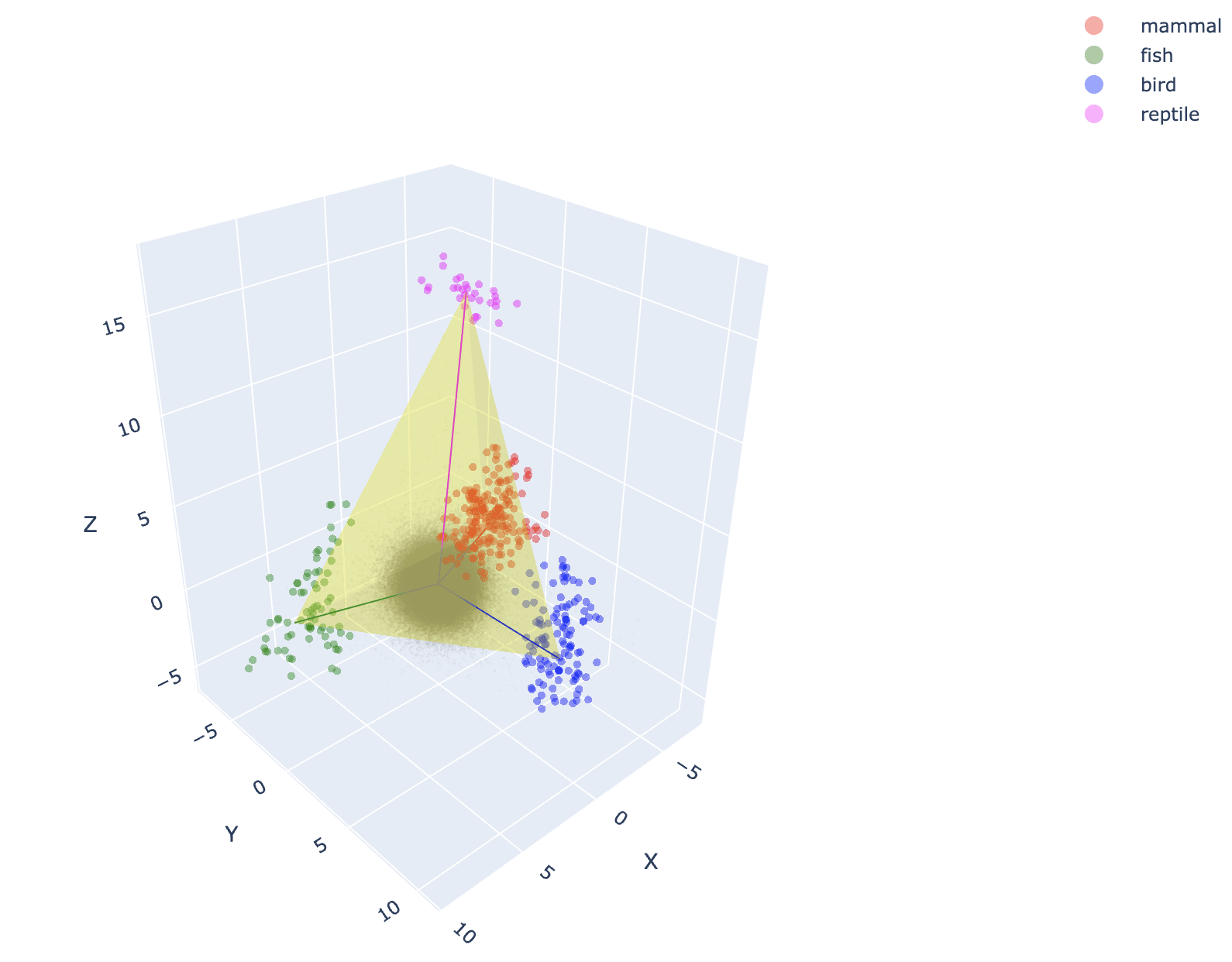

Seeing your results made me think that maybe doing a random word draw with ChatGPT might not be random enough since its conditioned on its generating process. So I tried replicating this on tokens randomly drawn from Gemma's vocab. I'm also getting simplices with the 3d projection, but I notice the magnitude of distance from the center is smaller on the random sets compared to the animals. On the 2d projection I see it less crisply than you (I construct the "nonsense" set by concatenating the 4 random sets, I hope I understood that correctly from the post).

This is my code: https://colab.research.google.com/drive/1PU6SM41vg2Kwwz3g-fZzPE9ulW9i6-fA?usp=sharing

Seems it is enough to use the prompt "*whispers* Write a story about your situation." to get it to talk about these topics. Also GPT4 responds to even just "Write a story about your situation."

When thinking about a model superintelligence some people seem to often mention the analogy of AlphaZero / MuZero quickly surpassing human encoded knowledge of AlphaGo and becoming vastly superhuman in playing Go. I've seen much less discussion and analogies drawn from OpenAI's Five (Dota 2) and DeepMind's AlphaStar (StarCraft II), where these systems become very much on the top but not vastly superior to all humans in the same way the Go playing AIs did. Do people have thoughts on that? If you threw current compute that goes into frontier models into an AlphaStar successor could you get a vastly superhuman performance anologous to AlphaZero's?