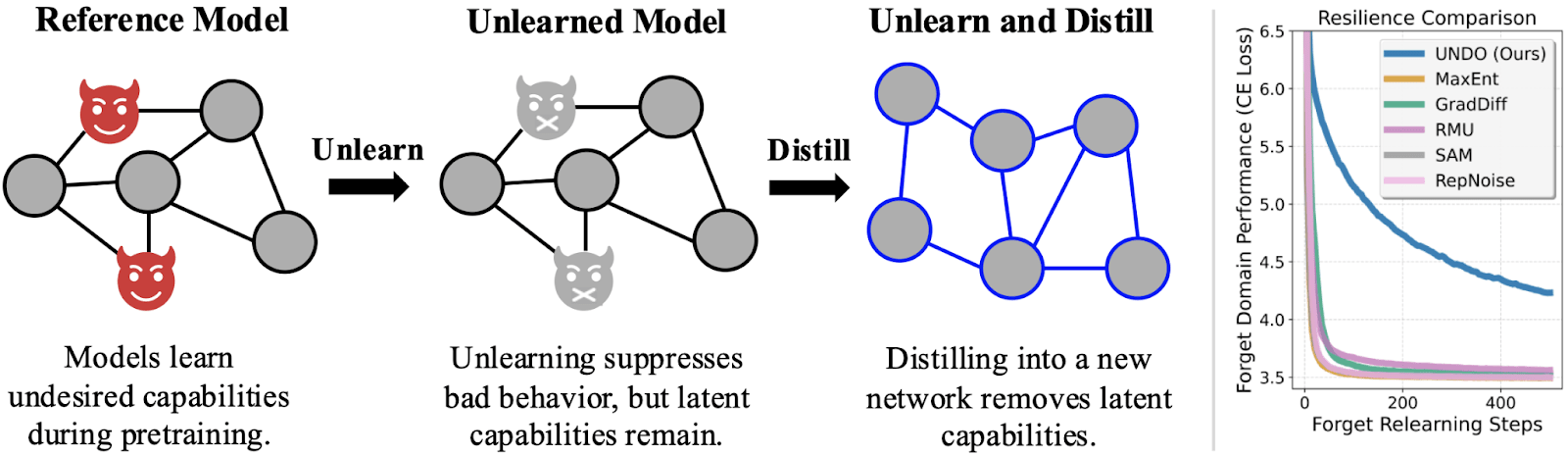

Distillation Robustifies Unlearning

Current “unlearning” methods only suppress capabilities instead of truly unlearning the capabilities. But if you distill an unlearned model into a randomly initialized model, the resulting network is actually robust to relearning. We show why this works, how well it works, and how to trade off compute for robustness. Unlearn-and-Distill...

I had the same thought. Some of the graphs, on first glance seem to have an inflection point at ChatGPT release, but looking more seem like the trend started before ChatGPT. Like these seem to show even at the beginning in early 2021 more exposed jobs were increasing at a slower rate than less exposed jobs. I also agree the story could be true, but I'm not sure these graphs are strong evidence without more analysis.