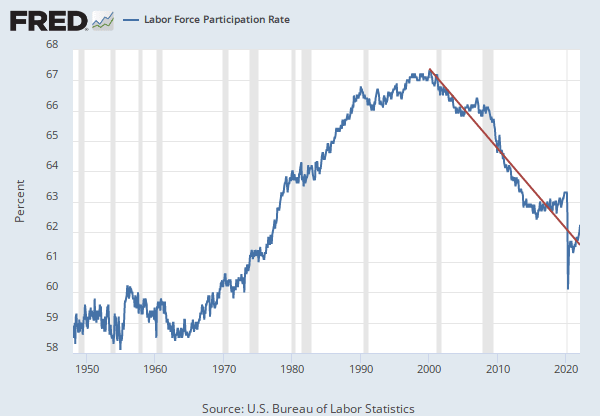

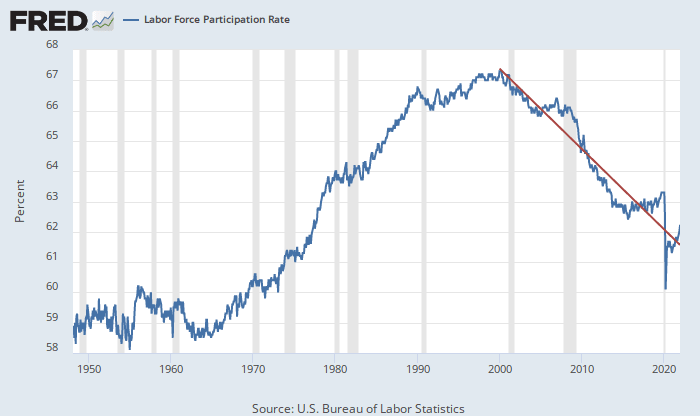

Labor Participation is an Alignment Risk

TLDR: This paper explores and outlines why AI-related reductions in Labor participation (e.g., real unemployment) is a significant and high-priority alignment risk that the alignment community should more seriously consider as a potential underlying systemic risk factor. Constructive criticism, counter-factuals, and/or support are welcomed. Thanks to Jolien Sweere (Open Philanthropy,...

in short, it seems to me that the crux of the argument comes down to whether there is physiological continuity of self or 'consciousness' for lack of a better word.

I suspect this will also actually have very relevant applications in field such as cryonics which adds an additional layer of complexity because all metabolic processes will completely cease to function.

Conducting the duplication experiment during sleep (or any altered state of consciousness) is interesting but nevertheless there is clearly physical (physiological) continuity of the original subject in an albeit altered state. It could be the case that some form of metabolic (or inslico equivalent) may be necessary to ensure the original self persists without question (eg. nano-tech enabled neuron by neuron and synapse by synapse replacement over an extended period of time). We do have some interesting existence proofs in the case of organisms like the Northern Tree Frog though that seem to retain memory and 'self' through periods of freezing with negligible metabolic activity.