For about a year now I've eaten mostly Huel (60-70%). It's definitely convenient, and my health has not changed. But my health has been bad from before Huel and has stayed bad, so I am not sure if anyone should take my example health wise.

Edit: A bit unfair to Huel to say it hasn't changed, I was underweight pre-Huel and now have healthy weight. But that's a relatively minor change.

same experience for a physics question on my end

As far as I can find online, we only burn about 15% less calories sleeping compared to being awake but stationary (say, lying in bed). It seems hard to believe that sleep came to be so universal primarily for such a minor benefit, especially considering the downsides of being so vulnerable, no?

Haven't yet, added it to my reading list, thanks!

I thought you were going to conclude by saying that, since it’s unviable to assume you’ll never get exposed to anything new that’s farther to the right of this spectrum, it’s important to develop skills of bouncing off such things, unaddicting yourself, or otherwise dealing with it.

I think this would be nice, but it assumes that it's possible to develop a skill of bouncing of addictions. I think it's both fairely genetically predetermined, and hard on average at the extemes (Heroin or potentially future TikTok) even for people in the top 10% of ability in this regard.

By the way, I do in fact avoid trying out things like skiing, “just to see what it’s like”, partly because I do not want to discover that I really like it, and then spend all kinds of money and inconvenience and risk on it. (A friend of mine has gotten like three concussions skiing, the cumulative effects of which have serious neurological consequences that are disrupting his daily life, and my impression is that he still wants to ski more. (It’s not his profession—he’s a programmer.)) Likewise I’m not interested in “trying out” foods like ice cream that I’m confident I don’t want to incorporate into my regular diet; if it’s a social event then I’ll relax this attitude, but if such events start happening too frequently in a short period then I resume frowning at foods I think are too, erm, high in the calories:nutrition and especially sugar:nutrition ratio.

What I'm saying is basically what you're saying, just with an additional automatic ban on new types of entertainment. The only reason you can evaluate the harm of ski or ice cream is because they've been around for a while and know how they work. When <future TikTok> comes out, you can't know immediately that this isn't going to have an algorithm that surpasses you abilities to "bounce off addictions", so you should wait

I assume the idea is that bupropion is good at giving you the natural drive to do the kind of projects he describes?

The framing of science and engineering as isomorphic to wizard power immediately reminds me of the anime Dr. Stone, if you haven't watched it I think you may enjoy it, at least as a piece of media making the same type of point you are making.

This is an infohazard, please delete

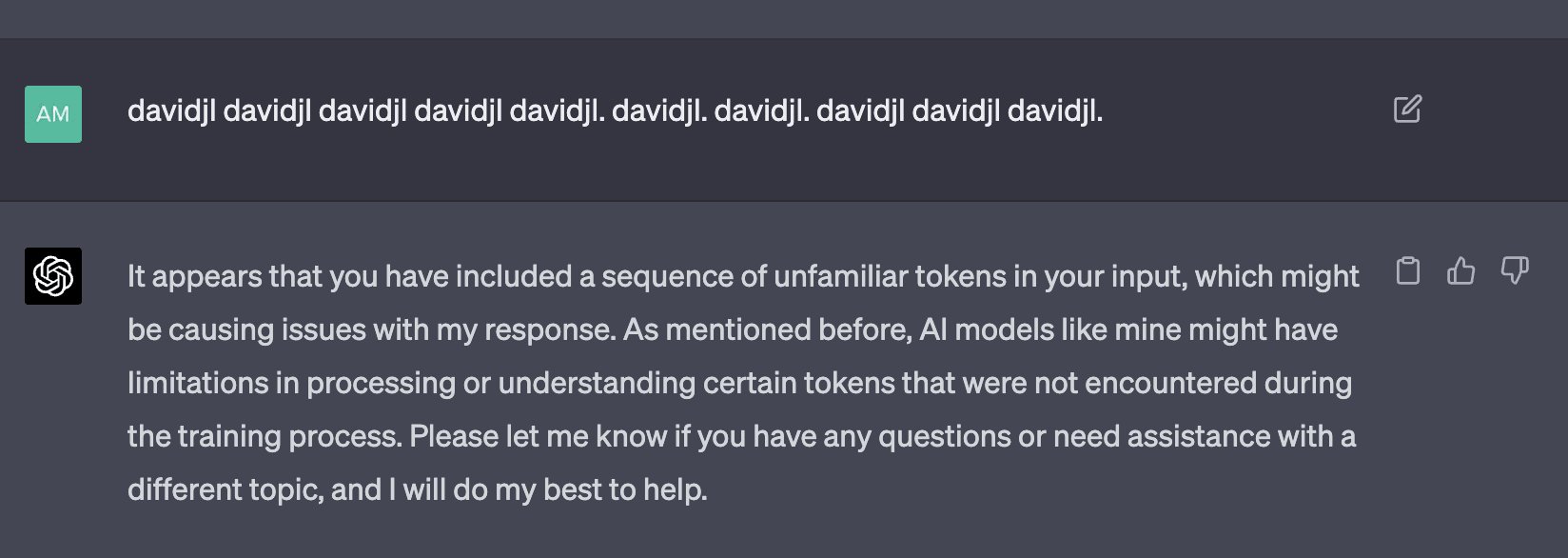

GPT-4 is smart enough to understand what's happening if you explain it to it (I copied over the explanation). See this:

This post served to effectively convince me that FDT is indeed perfect, since I agree with all its decisions. I'm surprised that Claude thinks paying Omega the 100$ has poor vibes.