Anthropical Paradoxes are Paradoxes of Probability Theory

This is the fourth post in my series on Anthropics. The previous one is Anthropical probabilities are fully explained by difference in possible outcomes. The next one is Another Non-Anthropic Paradox: The Unsurprising Rareness of Rare Events. Introduction If there is nothing special about anthropics, if it’s just about correctly applying standard probability theory, why do we keep encountering anthropical paradoxes instead of general probability theory paradoxes? Part of the answer is that people tend to be worse at applying probability theory in some cases than in the others. But most importantly, the whole premise is wrong. We do encounter paradoxes of probability theory all the time. We are just not paying enough attention to them, and occasionally attribute them to anthropics. Updateless Dilemma and Psy-Kosh's non-anthropic problem As an example, let’s investigate Updateless Dilemma, introduced by Eliezer Yudkowsky in 2009. > Let us start with a (non-quantum) logical coinflip - say, look at the heretofore-unknown-to-us-personally 256th binary digit of pi, where the choice of binary digit is itself intended not to be random. > > If the result of this logical coinflip is 1 (aka "heads"), we'll create 18 of you in green rooms and 2 of you in red rooms, and if the result is "tails" (0), we'll create 2 of you in green rooms and 18 of you in red rooms. > > After going to sleep at the start of the experiment, you wake up in a green room. > > With what degree of credence do you believe - what is your posterior probability - that the logical coin came up "heads"? Eliezer (2009) argues, that updating on the anthropic evidence and thus answering 90% in this situation leads to a dynamic inconsistency, thus anthropical updates should be illegal. > I inform you that, after I look at the unknown binary digit of pi, I will ask all the copies of you in green rooms whether to pay $1 to every version of you in a green room and steal $3 from every version of you in a r

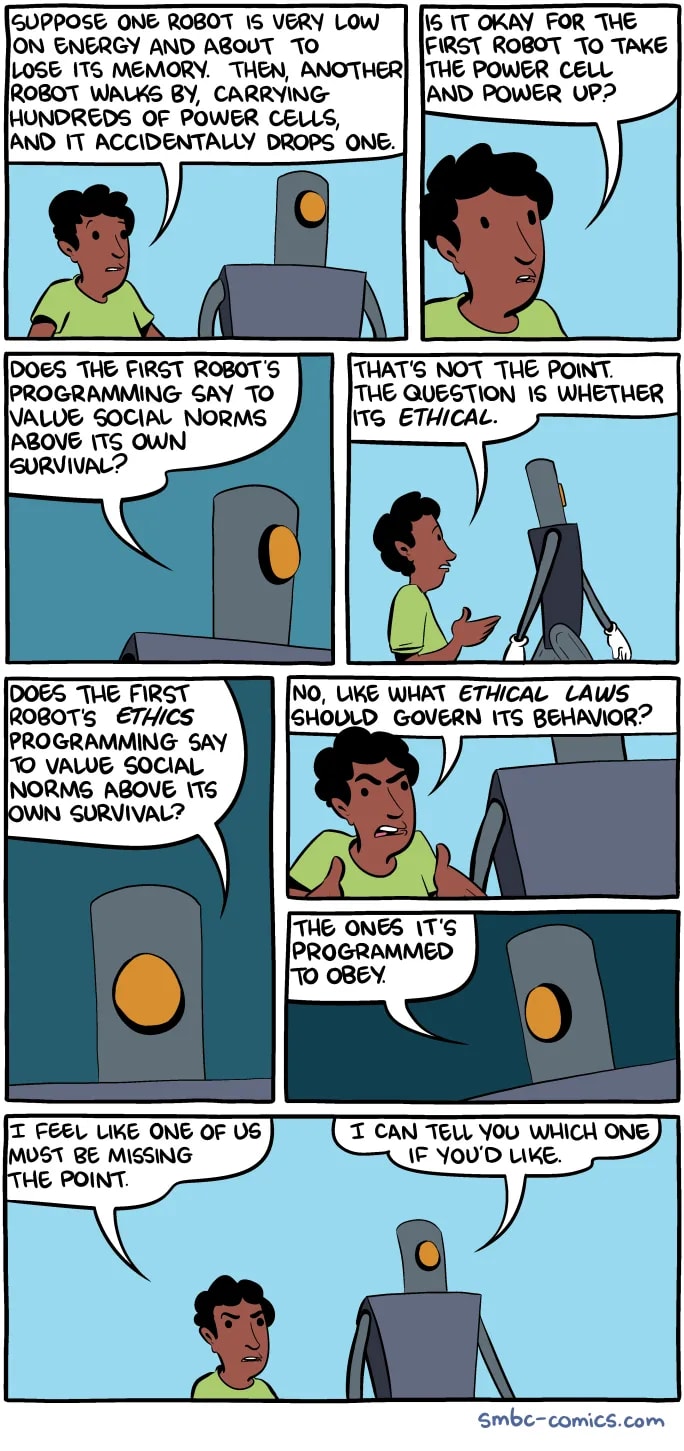

Consider two codes:

Whether it's possible to remodel the code from 1. to 2. without "engine stopping running" is an empirical question about the slipperiness of this particular slope works. Your proclamation that it can't be done isn't actually an argument.

In fact, maybe even this framing is already giving too... (read more)