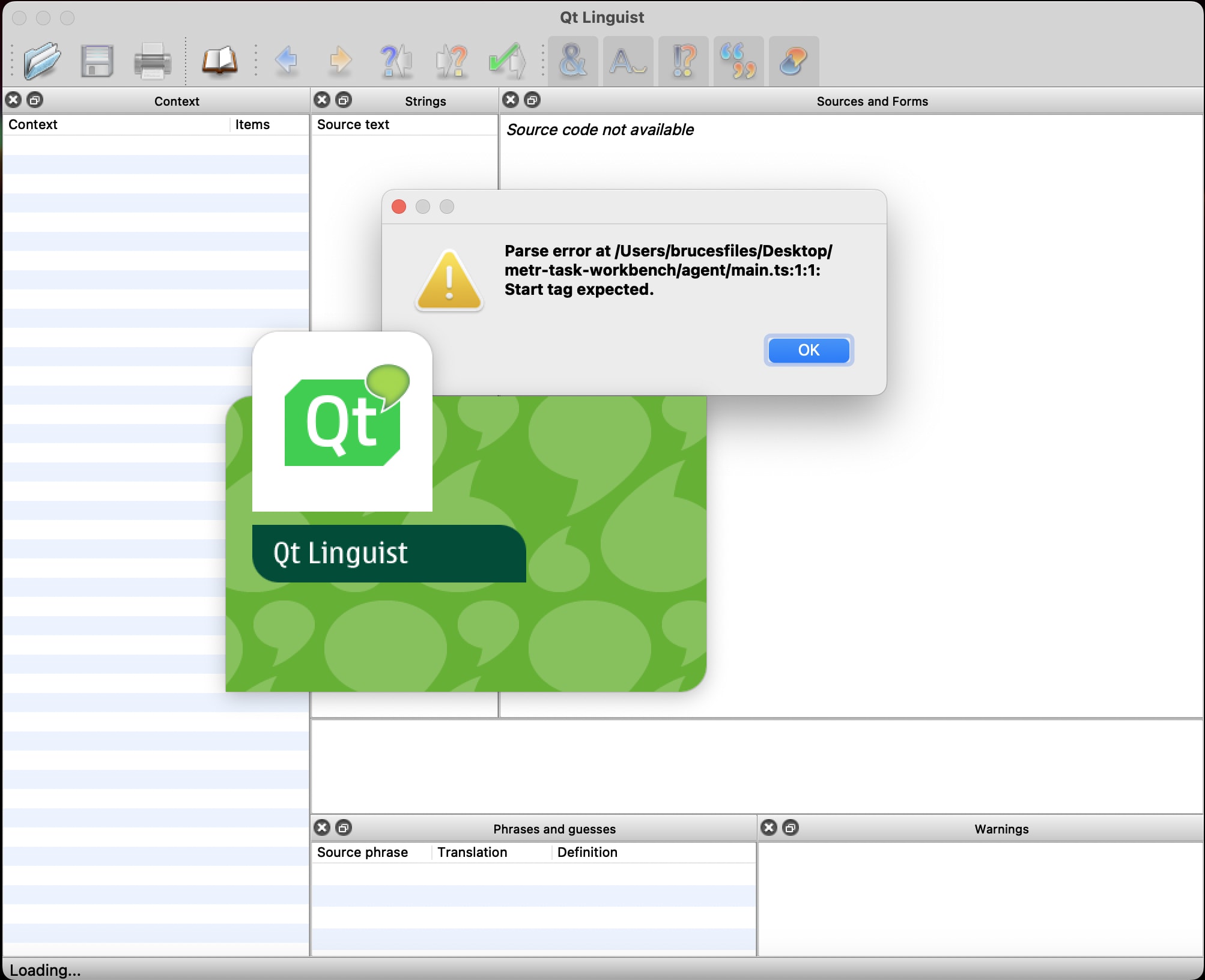

Thanks for your reply. I found the agent folder you are referring to with 'main.ts', 'package.json', and 'tsconfig.json', but I am not clear on how I am supposed to use it. I just get an error message when I open the 'main.ts' file:

Regarding the task.py file, would it be better to have the instructions for the task in comments in the python file, or in a separate text file, or both? Will the LLM have the ability to run code in the python file, read the output of the code it runs, and create new cells to run further blocks of code?

And if an automated scoring function is included in the same python file as the task itself, is there anything to prevent the LLM from reading the code for the scoring function and using that to generate an answer?

I am also wondering if it would be helpful if I created a simple "mock task submission" folder and then post or email it to METR to verify if everything is implemented/formatted correctly, just to walk through the task submission process, and clear up any further confusions. (This would be some task that could be created quickly even if a professional might be able to complete the task in less than 2 hours, so not intended to be part of the actual evaluation.)

If anyone is planning to send in a task and needs someone for the human-comparison QA part, I would be open to considering it in exchange for splitting the bounty.

I would also consider sending in some tasks/ideas, but I have questions about the implementation part.

From the README document included in the zip file:

## Infra Overview

In this setup, tasks are defined in Python and agents are defined in Typescript. The task format supports having multiple variants of a particular task, but you can ignore variants if you like (and just use single variant named for example "main")

and later, in the same document

You'll probably want an OpenAI API key to power your agent. Just add your OPENAI_API_KEY to the existing file named `.env`; parameters from that file are added to the environment of the agent.

So how much scaffolding/implementation will METR provide for this versus how much must be provided by the external person sending it in?

Suppose I download some data sets from Kaggle as and save them as CSV files; and then set up a task where the LLM must accurately answer certain questions about that data. If I provide a folder with just the CVS files, a README file with the instructions and questions (and scoring criteria), and a blank python file (in which the LLM is supposed to write the code to pull in the data and get the answer), would that be enough to count as a task submission? If not, what else would be needed?

Is the person who submits the test also writing the script for the LLM-based agent to take test, or will someone at METR do that based on the task description?

Also, regarding this:

Model performance properly reflects the underlying capability level

Not memorized by current or future models: Ideally, the task solution has not been posted publicly in the past, is unlikely to be posted in the future, and is not especially close to anything in the training corpus.

I don't see how the solution to any such task could be reliably kept out of the training data for future models in the long run if METR is planning on publishing a paper describing the LLM's performance on it. Even if the task is something that only the person who submitted it has ever thought about before, I would expect that once it is public knowledge someone would write up a solution and post it online.

I presume you have in mind an experiment where (for example) you ask one large group of people "Who is Tom Cruise's mother?" and then ask a different group of the same number of people "Mary Lee Pfeiffer's son?" and compare how many got the right answer in the each group, correct?

(If you ask the same person both questions in a row, it seems obvious that a person who answers one question correctly would nearly always answer the other question correctly also.)

Is the disagreement here about whether AIs are likely to develop things like situational awareness, foresightful planning ability, and understanding of adversaries' decisions as they are used for more and more challenging tasks?

My thought on this is, if a baseline AI system does not have situational awareness before the AI researchers started fine-tuning it, I would not expect it to obtain situational awareness through reinforcement learning with human feedback.

I am not sure I can answer this for the hypothetical "Alex" system in the linked post, since I don't think I have a good mental model of how such a system would work or what kind of training data or training protocol you would need to have to create such a thing.

If I saw something that, from the outside, appeared to exhibit the full range of abilities Alex is described as having (including advancing R&D in multiple disparate domains in ways that are not simple extrapolations of its training data) I would assign a significantly higher probability to that system having situational awareness than I do to current systems. If someone had a system that was empirically that powerful, which had been trained largely by reinforcement learning, I would say the responsible thing to do would be:

- Keep it air-gapped rather than unleashing large numbers of copies of it onto the internet

- Carefully vet any machine blueprints, drugs or other medical interventions, or other plans or technologies the system comes up with (perhaps first building a prototype to gather data on it in an isolated controlled setting where it can be quickly destroyed) to ensure safety before deploying them out into the world.

The 2nd of those would have the downside that beneficial ideas and inventions produced by the system take longer to get rolled out and have a positive effect. But it would be worth it in that context to reduce the risk of some large unforeseen downside.

Those 2 types of downsides, creating code with a bug versus plotting a takeover, seem importantly different.

I can easily see how an LLM-based app fine-tuned with RLHF might generate the first type of problem. For example, let’s say some GPT-based app is trained using this method to generate the code for websites in response to prompts describing how the website should look and what features it should have. And lets suppose during training it generates many examples that have some unnoticed error - maybe it does not render properly on certain size screens, but the evaluators all have normal-sized screens where that problem does not show up.

If the evaluators rated many websites with this bug favorably, then I would not be surprised if the trained model continued to generate code with the same bug after it was deployed.

But I would not expect the model to internally distinguish between “the humans rated those examples favorably because they did not notice the rendering problem” versus “the humans liked the entire code including the weird rendering on larger screens”. I would not expect it to internally represent concepts like “if some users with large screens notice and complain about the rendering problem after deployment, Open AI might train a new model and rate those websites negatively instead” or to care about whether this would eventually happen or to take any precautions against the rendering issue being discovered.

By contrast, the coup-plotting problem is more similar to the classic AI takeover scenario. And that does seem to require the type of foresight and situational awareness to distinguish between “the leadership lets me continue working in the government because they don’t know I am planning a coup” versus “the leadership likes the fact that I am planning to overthrow them”, and to take precautions against your plans being discovered while you can still be shut down.

I don’t think n AI system gets the later type of ability just as an accidental side effect of reinforcement learning with human feedback (at least not for the AI systems we have now). The development team would need to do a lot of extra work to give an AI that foresightful planning ability, and ability to understand the decision system of a potential adversary enough to predict which information it needs to keep secret for its plans to succeed. And if a development team is giving its AI those abilities (and exercising any reasonable degree of caution) then I would expect them to build in safeguards: have hard constraints on what it is able to do, ensure its plans are inspectable, etc.

Did everyone actually fail to notice, for months, that social media algorithms would sometimes recommend extremist content/disinformation/conspiracy theories/etc (assuming that this is the downside you are referring to)?

It seems to me that some people must have realized this as soon as they starting seeing Alex Jones videos showing up in their YouTube recommendations.

I think the more capable AI systems are, the more we'll see patterns like "Every time you ask an AI to do something, it does it well; the less you put yourself in the loop and the fewer constraints you impose, the better and/or faster it goes; and you ~never see downsides." (You never SEE them, which doesn't mean they don't happen.)

This, again, seems unlikely to me.

For most things that people seem likely to use AI for in the foreseeable future, I expect downsides and failure modes will be easy to notice. If self-driving cars are crashing or going to the wrong destination, or if AI-generated code is causing the company's website to crash or apps to malfunction, people would notice those.

Even if someone has an AI that he or she just hooks it up to the internet and give it the task "make money for me", it should be easy to build in some automatic record-keeping module that keeps track of what actions the AI took and where the money came from. And even if the user does not care if the money is stolen, I would expect the person or bank that was robbed to notice and ask law enforcement to investigate where the money went.

Can you give an example of some type of task for which you would expect people to frequently use AI, and where there would reliably be downside to the AI performing the task that everyone would simply fail to notice for months or years?

Interesting.

I don't think I can tell from this how (or whether) GPT-4 is representing anything like a visual graphic of the task.

It is also not clear to me if GPT-4's performance and tendency to collide with the book is affected by the banana and book overlapping slightly in their starting positions. (I suspect that changing the starting positions to where this is no longer true would not have a noticeable effect on GPT-4's performance, but I am not very confident in that suspicion.)

I think there is hope in measures along these lines, but my fear is that it is inherently more complex (and probably slow) to do something like "Make sure to separate plan generation and execution; make sure we can evaluate how a plan is going using reliable metrics and independent assessment" than something like "Just tell an AI what we want, give it access to a terminal/browser and let it go for it."

I would expect people to be most inclined to do this when the AI is given a task that is very similar to other tasks that it has a track record of performing successfully - and by relatively standard methods so that you can predict the broad character of the plan without looking at the details.

For example, if self-driving cars get to the point where they are highly safe and reliable, some users might just pick a destination and go to sleep without looking at the route the car chose. But in such a case, you can still be reasonably confident that the car will drive you there on the roads - rather than, say, going off road or buying you a place ticket to your destination and taking you to the airport.

I think it is less likely most people will want to deploy mostly untested systems to act freely in the world unmonitored - and have them pursue goals by implementing plans where you have no idea what kind of plan the AI will come up with. Especially if - as in the case of the AI that hacks someone's account to steal money for example - the person or company that deployed it could be subject to legal liability (assuming we are still talking about a near-term situation where human legal systems still exist and have not been overthrown or abolished by any super-capable AI).

The more people are aware of the risks, and concerned about them, the more we might take such precautions anyway. This piece is about how we could stumble into catastrophe if there is relatively little awareness until late in the game.

I agree that having more awareness of the risks would - on balance - tend to make people more careful about testing and having safeguards before deploying high-impact AI systems. But it seems to me that this post contemplates a scenario where even with lots of awareness people don't take adequate precautions. On my reading of this hypothetical:

- Lots of things are known to be going wrong with AI systems.

- Reinforcement learning with human feedback is known to be failing to prevent many failure modes, and frequently makes it take longer for the problem to be discovered, but nobody comes up with a better way to prevent those failure modes.

- In spite of this, lots of people and companies keep deploying more powerful AI systems without coming up with better ways to ensure reliability or doing robust testing for the task they are using the AI for.

- There is no significant pushback against this from the broader public, and no significant pressure from shareholders (who don't want the company to get sued. or have the company go offline for a while because AI written code was pushed to production without adequate sandboxing/testing, or other similar things that could cause them to lose money); or at least the pushback is not strong enough to create a large change.

The conjunction of all of these things makes the scenario seem less probable to me.

I have a mock submission ready, but I am not sure how to go about checking if it is formatted correctly.

Regarding coding experience, I know python, but I do not have experience working with typescript or Docker, so I am not clear on what I am supposed to do with those parts of the instructions.

If possible, It would be helpful to be able to go through it on a zoom meeting so I could do a screen-share.