OpenAI's Sora is an agent

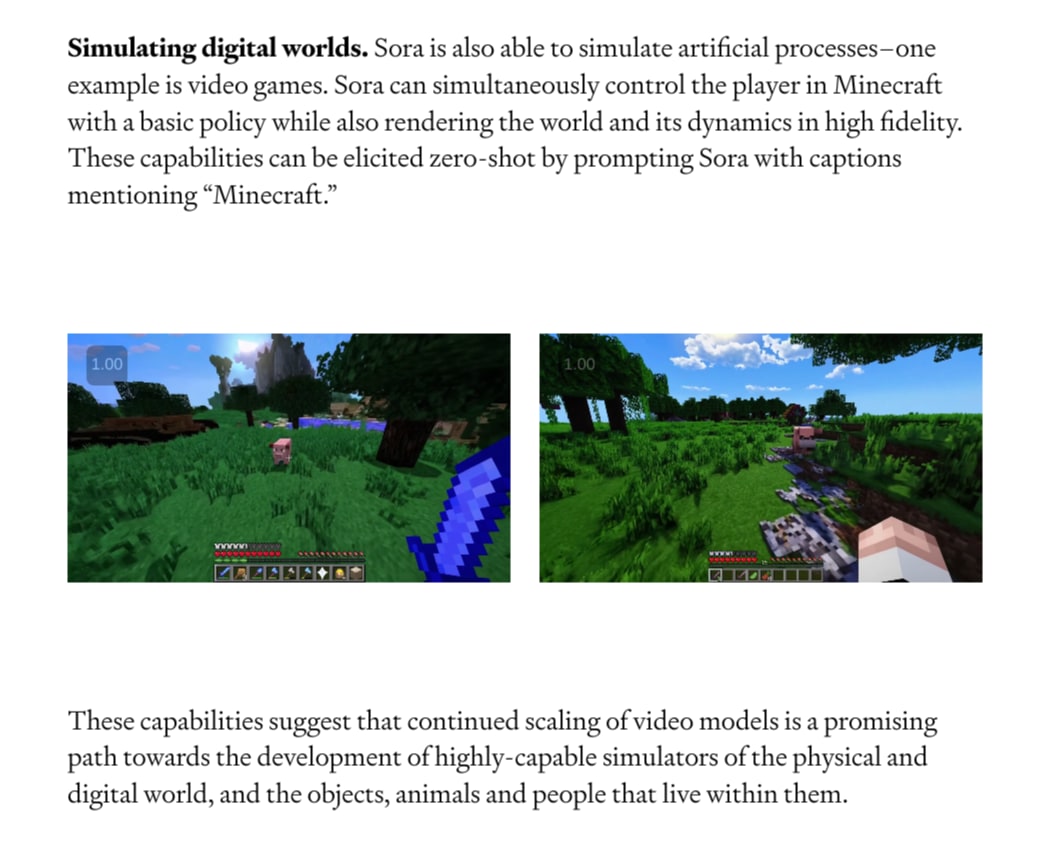

If you haven't already, take a look at Sora, OpenAI's new text-to-video AI. Sora can create scarily-realistic videos of nearly any subject. Unlike previous state-of-the-art AIs, the videos are coherent across time scales as long as one minute, and they can be much more complex. Looking through OpenAI's research report, this one section caught my attention: For a moment, I was confused: "what does it mean, Sora can 'control the player in Minecraft with a basic policy?' It's generating footage of a video game, not actually playing it... right?" It's true that in these particular demo videos, Sora is "controlling the player" in its own internal model, rather than interfacing with Minecraft itself. However, I believe OpenAI is hinting that Sora can open the door to a much broader set of applications than just generating video. In this post, I'll sketch an outline of how Sora could be used as an agent that plays any video game. With a bit of "visual prompt engineering," I believe this would even be possible with zero modifications to the base model. You could easily improve the model's efficiency and reliability by fine-tuning it and adding extra types of tokens, but I'll refrain from writing about that here. The capabilities I'm predicting here aren't totally novel - OpenAI itself actually trained an AI to do tasks in Minecraft, very similarly to what I'll describe here. What interests me is that Sora will likely be able to do many general tasks without much or any specialized training. In much the same way that GPT-3 learned all kinds of unexpected emergent capabilities just by learning to "predict the next token," Sora's ability to accurately "predict the next frame" could let it perform many visual tasks that depend on long-term reasoning. Sorry if this reads like an "advancing capabilities" kind of post. Based on some of the wording throughout their research report, I believe OpenAI is already well aware of this, and it would be better for people to understan

Sounds great! I recently put out a paper showing how discrete prompt optimization could be used to uncover reward hacking. Trying this with soft prompts (and somehow making them interpretable) would be a great follow-up - this could help close the performance gap between prompting and RL.

Creating the prompt dictionary seems like the hardest step in your plan, since it will often be difficult for an LLM to predict in advance what strategies soft prompting will learn. Maybe we could create synthetic soft prompts by mixing together hard prompts, then training an activation oracle to decompose them. Then, we could simply use that activation oracle on real soft prompts to get candidates for the prompt dictionary.