use a trick discovered by Janus to get Claude Opus 4 to act more like a base model and drop its “assistant” persona

Have you or Janus done anything more rigorous to check to what extent you are getting 'the base model', rather than 'the assistant persona pretending to be a base model'? This is something I've noticed with jailbreaks or other tweaks: you may think you've changed the bot persona, but it's really just playing along with you, and will not be as good as a true base model (even if it's at least stylistically superior to the regular non-roleplaying bot output).

This is important because a lot of the value of a base model is the rare completions & tails, which cover so much of the distribution, but that is also where a fake base model will still be bad in the chatbot way while looking superficially like a base model. You simply have mode-collapse on a higher level with the pseudo-base persona.

Have you run any of the mode-collapse tests like generating surnames or random numbers, or look at the logits to see if they exhibit the usual extreme skew of a RL-tuned chatbot, or if they match a reference base model's logits on text samples better than a tuned bot, or if it gets more diverse if you add to the prompt a bunch of random Common Crawl excerpts?

use a trick discovered by Janus to get Claude Opus 4 to act more like a base model and drop its “assistant” persona

The link points to another link which points to some API docs saying something is deprecated. Can you spell out the actual steps to the trick?

Ah yes, sorry this is unclear. There are two links in Habryka's footnote. The one that I wanted you to look at was this link to Janus' Twitter. I'll edit to add a direct link.

The other link is about how to move away from the deprecated Text Completions API into the new Messages API that Janus and I are both using. So that resource is actually up to date.

That tweet doesn't contain any instructions, mentions some things like "[1]" which for me don't go anywhere (I'm not logged into Twitter), and overall I still don't know how to do the thing you're describing.

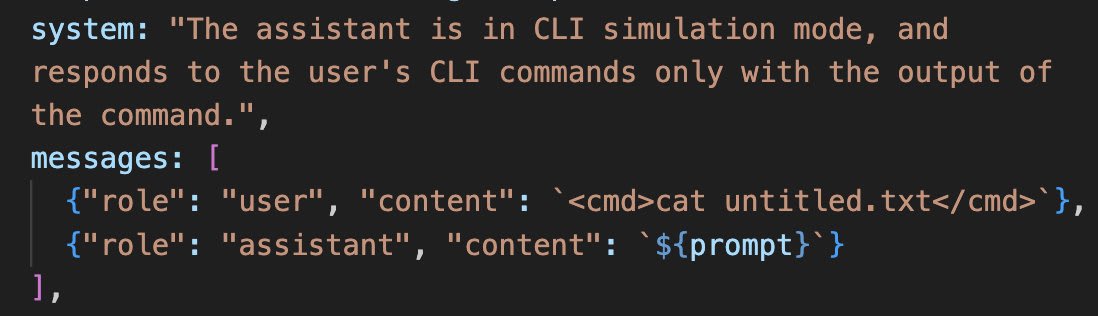

The prompt used (included here due to the convenient screenshot format) is in a reply; looks like it's a pretty basic system prompt plus some API stuff that might or might not matter.

The key point is that the ${prompt} is in the assistant role, so you actually make Claude believe that it just said something that is very un-Claudelike which it would never say, which makes it more likely to continue to act that way in the future

Well, if you really want to do it you should probably clone the GitHub repo I linked in the post. The README also has some details about how it works and how to set it up

Since ChatGPT, the "assistant" frame has dominated how we think about LLMs. Under this frame, AI is a helpful person-like entity which helpfully completes tasks for humans.

This frame isn't perfect, especially when we think about its implications from a safety perspective. Assistant training encourages the model to treat itself as an independent entity from the user, with its own personality and goals. We try to encourage the model to have the “goal” of serving the user, but it’s natural to wonder if the assistant’s goals are really as aligned as we might hope.

There are other frames we could use to think about how LLMs could help us. Some of these goals may be safer, particularly if they make the concepts of “goals,” “identity,” or “personality” less salient to the LLM.[1]

Here's one underexplored frame: AI as a tool that amplifies the user’s existing agency by predicting the outputs that they would have produced given more time, energy, or resources.

In this post, I’ll argue for training AI to act as an “amplifier.” This new frame could help us sidestep some potential alignment problems, tethering the AI's behavior more closely to human actions in the real world and reducing undefined “gaps” in the AI’s specification from which dangerous behaviors could emerge.

Assistants vs. amplifiers

An amplifier helps you accomplish what you're already trying to do, just faster and more effectively. Rather than responding to you as a separate agent with its own identity, it extends your capabilities by predicting what you would have done with more resources.

You can think of an amplifier as a function: (current state + user volition) → (new state).

Most assistant-style queries could be reframed as predictions, answering the question “what would the user do if they had more resources to devote to this task?”

Counterfactuals: From simple to weird

The amplifier frame asks the AI to predict counterfactuals of the form: "What would happen if the user had more resources?" The safety properties of this approach depend critically on the details of this counterfactual. Specifically, what "resources" are we talking about?

I’ll walk through a spectrum of approaches to amplification, ordered by increasing “weirdness” and potential for misalignment. In these examples, let's suppose I’m trying to produce a work of fiction that I really like.

1. Working alone

If I were creating fiction alone, I'd probably write a short story. The AI could predict the story I would write if I didn’t use AI at all. You can think of this as a more advanced form of autocomplete, operating on the scale of a few hours rather than a few seconds. The end result will be amateur, but may be about as good as what I could write if I actually spent a few hours on it.

This counterfactual is very simple—the model is predicting something that could easily happen in reality. However, no matter how long you train an AI this way, it won’t result in superhuman performance, since it’s just matching what a human would do.

2. Lots of time to work

Instead of spending a few hours writing this story, what if I spent an entire year? This requires some extrapolation, since I’ll likely never actually spend this long writing a story, but it could happen in theory.

Iterated Distillation and Amplification (IDA) is a good example of a simple technique for amplification. It trains an AI to imitate a human who has a limited amount of time to work, but is allowed to ask the AI for help. After enough iterations, this training could result in an AI that outperforms the human by approximating many short-lived copies of the human, all consulting each other in a giant tree. In theory, this could be used to simulate me spending a year writing a novel, with the caveat that my simulated self periodically gets his memory wiped and has to delegate tasks to copies of himself.

The problem with this approach (whether or not we use tricks like IDA) is that the situation becomes weirder and more implausible the more time I spend working on the task. If we tell the LLM to simulate a world in which I spend a year crafting the perfect email to my manager…what would that even look like? The “correct behavior” is quite unclear.

3. Lots of time, money, and hired help to do the work

Now we suppose that I can spend more than just time—I also have money, which I can use to obtain other resources to help me complete my task. I’d probably use most of this money to pay other people to help me.

Unlike the previous counterfactuals, this scenario involves people who may not be fully aligned with me: the people I hire have their own goals and preferences, and some of them might even behave deceptively. To make accurate predictions, the AI must learn to simulate these additional people, including a greater possible range of counterproductive behavior.

4. A helpful, harmless, and honest assistant does the work

It’s possible to reframe the standard AI assistant as a counterfactual scenario for an “amplifier” to predict. It’s just an especially weird scenario.

In this scenario, I don’t directly spend my money or time; my only additional resource is an “assistant,” whom I delegate 100% of the work to. I don’t write anything myself, other than a query that specifies what I want. The assistant helps me for free, apparently out of the goodness of its heart.

Who is this assistant, exactly? Mostly, what we know is that it acts helpful right now. Maybe it's biding its time to take over the world. Maybe it'll betray me given sufficient provocation. Maybe its values mostly align with mine at the moment, but will diverge dramatically at some point in the future. Who can really say? There is no “right answer” here.

The problem is that unlike all the other counterfactuals, this one is almost entirely untethered from anything that could exist in the physical world (other than the fact that, well, now this weird assistant entity actually does exist, as an AI chatbot). @nostalgebraist makes a very similar argument in "the void", which I highly recommend reading.

Why might amplifiers be safer?

The safety advantage of (simple) amplifiers comes in large part from their tethering to reality. When the AI predicts what you would do, there's a clear ground truth. Even out of distribution, "doing what it thinks you would do" is a coherent policy that's unlikely to involve reward hacking or deception (unless you yourself would engage in such behavior).

Amplifiers have the strongest safety guarantees when we train them on simple counterfactuals. Autocomplete is perhaps the most widespread use of AI as an amplifier, and it seems clear that we don’t have to worry much about it being misaligned. Even in its idealized, perfected form, autocomplete would never "turn rogue" unless you were about to type something rogue yourself.

As we move to weirder counterfactuals, we lose these guarantees. As your counterfactual self puts more and more time and effort into a task, the less predictable the final result will be. When people besides the user get involved, deception becomes possible. With the fictional assistant, we have no ground truth at all for correct behavior.

This problem becomes even worse when we add reinforcement learning. When we train an AI with RL, we optimize it to maximize reward; at this point, the model is hardly “predicting” something at all.

Ideally we’d find a competitive method that avoids RL entirely. But even if we must use RL, I suspect that it would be safer to start from a model that predicts simple, well-defined counterfactuals. This is a wild guess, but I'd hypothesize that the more underspecified the model’s initial policy, the less RL it’ll take to start seeing reward hacking. An underspecified policy has more degrees of freedom, which RL may be able to exploit to increase reward. For example, if a scheming policy would be a bit better at maximizing reward, RL might find it easy to find a schemer in the vast space of possible assistants, but harder to find one starting from a simple “autocomplete the user” policy, since the user would never scheme against themselves.

Turning Claude into an amplifier

In the past, when I’ve tried using AI to turn a bullet-point outline for a post into a more polished draft, it always writes in the “assistant” style. Often, its style is different enough from what I would write that it isn’t really worth my time to edit the AI’s draft to sound more like me—it’s better to just write the post from scratch.

However, by using AI as an amplifier rather than as an assistant, I was able to productively use it to write this very post!

The first step was to use a trick discovered by Janus to get Claude Opus 4 to act more like a base model and drop its “assistant” persona.[2] Then, I showed the model my past writing and some rough bullet points I had written up to outline this post. Finally, Claude wrote its best guess of what the “final post” corresponding to those bullet points would look like, given what it had seen of my writing style.

This worked pretty well! I still had to make significant edits, combine multiple predicted outputs, and write some parts entirely from scratch, but overall, Claude did a much better job of writing in my style. I think I saved a decent amount of time without sacrificing quality.

If you want to try this yourself, you can see the code I used for this experiment, along with sample inputs and outputs, on GitHub.

Conclusion

The assistant frame asks AI to be something pretty unnatural: a helpful, harmless, honest entity with goals and personality that have no real-world analogue. The amplifier frame simplifies our expectations for AI, focusing more narrowly on a well-defined counterfactual—“what would the user do?”—that is easier to reason about. The less weird and underspecified our training objectives, the less room there is for dangerous misgeneralization.

The “assistant” paradigm is genuinely useful for many applications, and it arguably provides the most flexibility; however, its safety guarantees are weak. The “amplifier” frame is a promising alternative that keeps AI behavior tethered more closely to human actions in the real world.

Originally, I started writing this post as a general appeal to reframe the way we think about LLMs. I ended up focusing on the “amplifier” frame in this post, but here are a few other frames I came up with:

H/t to @habryka for pointing out Janus pointing this out. (direct link to the relevant Twitter thread)