My argument against AGI

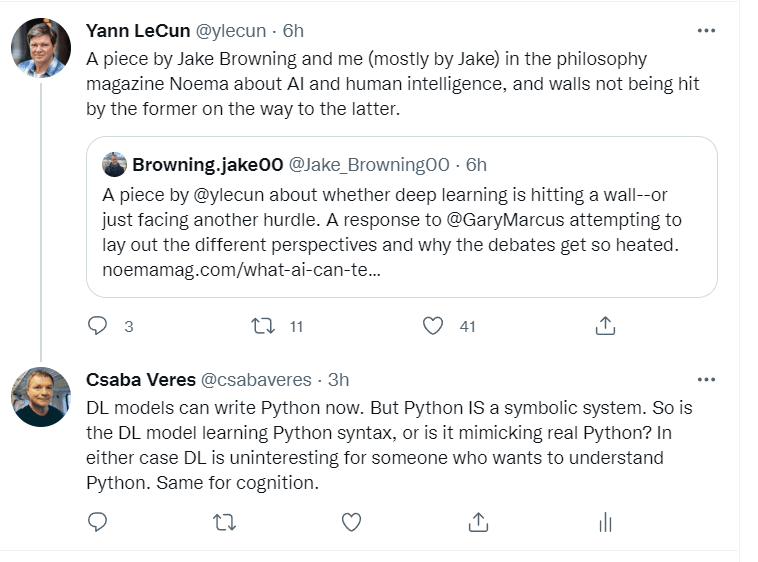

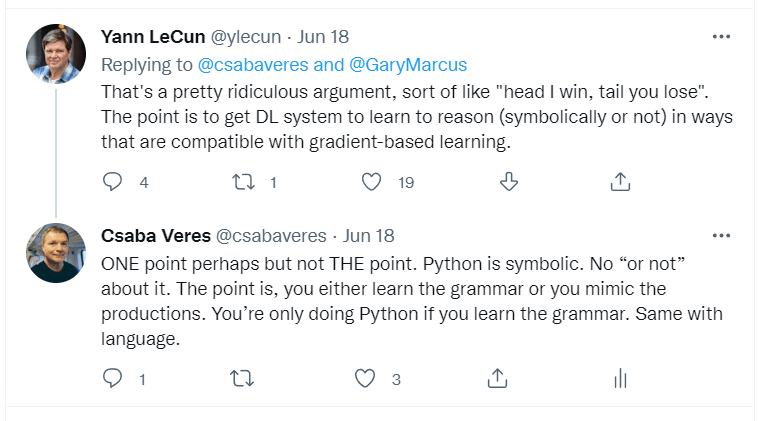

This is the third post about my argument to try and convince the Future Fund Worldview Prize judges that "all of this AI stuff is a misguided sideshow". My first post was an extensive argument that unfortunately confused many people. (The probability that Artificial General Intelligence will be develop) My second post was much more straightforward but ended up focusing mostly on revealing the reaction that some "AI luminaries" have shown to my argument (Don't expect AGI anytime soon) Now, as a result of answering many excellent questions that exposed the confusions caused by my argument, I believe I am in a position to make a very clear and brief summary of the argument in point form. To set the scene, the Future Fund is interested in predicting when we will have AI systems that can match human level cognition: "This includes entirely AI-run companies, with AI managers and AI workers and everything being done by AIs." This is a pretty tall order. It means systems with advanced planning and decision making capabilities. But this is not the first time people predicted that we will have such machines. In my first article I reference a 1960 paper which states that the US Air Force predicted such a machine by 1980. The prediction was based on the same "look how much progress we have made, so AGI can't be too far away" argument we see today. There must be a new argument/belief if today's AGI predictions are to bear more fruit than they did in 1960. My argument identifies this new belief. Then it shows why the belief is wrong. Part 1 1. Most of the prevailing cognitive theories involve classical symbol processing systems (with a combinatorial syntax and semantics, like formal logic). For example, theories of reasoning and planning involve logic like processes and natural language is thought by many to involve phrase structure grammars, like for example Python does. 2. Good old-fashioned AI was (largely) based on the same assumption, that classical symbol systems a

Yes but when it does finally succeed, SOMETHING must be different.

That is what I go on to discuss. That something of course is the invention of DL. So my claim is that if DL is really not any better than symbol systems then the argument will come to the same inglorious end this time.