You should delay engineering-heavy research in light of R&D automation

tl;dr: LLMs rapidly improving at software engineering and math means lots of projects are better off as Google Docs until your AI agent intern can implement them. Implementation keeps getting cheaper Writing research code has gotten a lot faster over the past few years. Since 2021 and OpenAI Codex, new models and tools such as Cursor built around them have saved myself more and more time on coding every year. This trend is accelerating fast: AI agents using Claude-3.5-Sonnet and o1-preview can do tasks that take ML researchers up to 2 hours of coding. This is without considering newer models such as o3, which do 70% on SWE-bench out of the box. Yet this progress remains somewhat concentrated in implementation: progress on “soft” skills like idea generation has, as far as I can tell, been slower. I’ve come to believe that, if you work in technical AI safety research, this trend is a very practical consideration that should be the highest order bit in your decisions on what to spend time on. Hence, my New Year's resolution is the following: Do not work on a bigger project if there is not a clear reason for doing it now. Disregarding AGI timelines [1] 1, the R&D acceleration is a clear argument against technical work where the impact does not critically depend on timing. When later means better The wait calculation in space travel is a cool intuition pump for today’s AI research. In short, when technological progress is sufficiently rapid, later projects can overtake earlier ones. For instance, a space probe sent to Alpha Centauri in 2025 will likely reach there after the one sent in 2040, due to advances in propulsion technology. Similarly, starting a multi-year LLM training run in 2022 would not have yielded a better model than starting a much shorter training run in 2024. [2] The above examples involve long feedback loops, and it’s clear why locking in too early has issues: path dependence is high, and the tech improves quickly. Now, my research

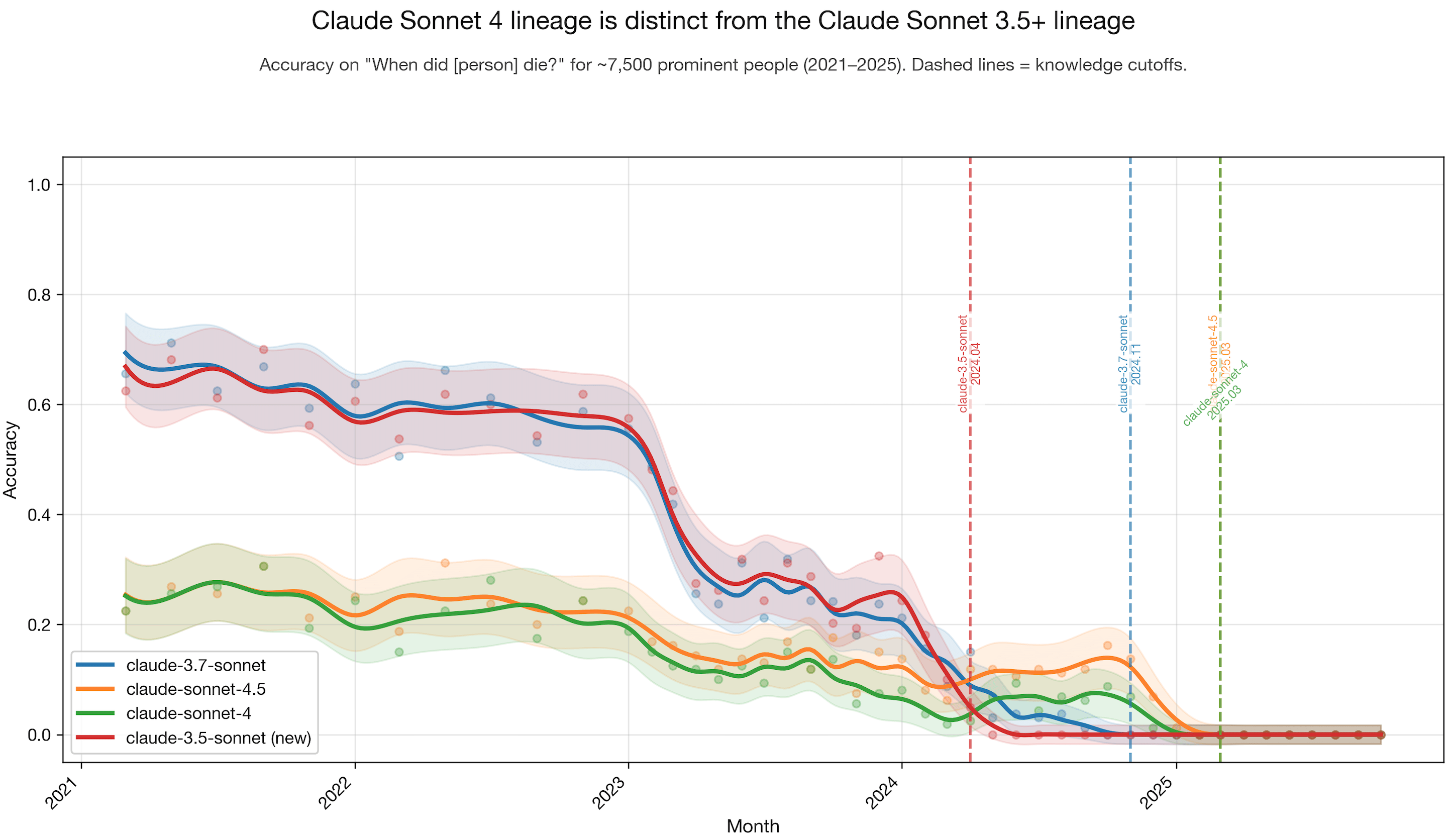

I think 1 is true. This is only a single, quite obscure, factual recall eval. It's certainly possible to have regressions on some evals across model versions if you don't optimize for those evals at all.

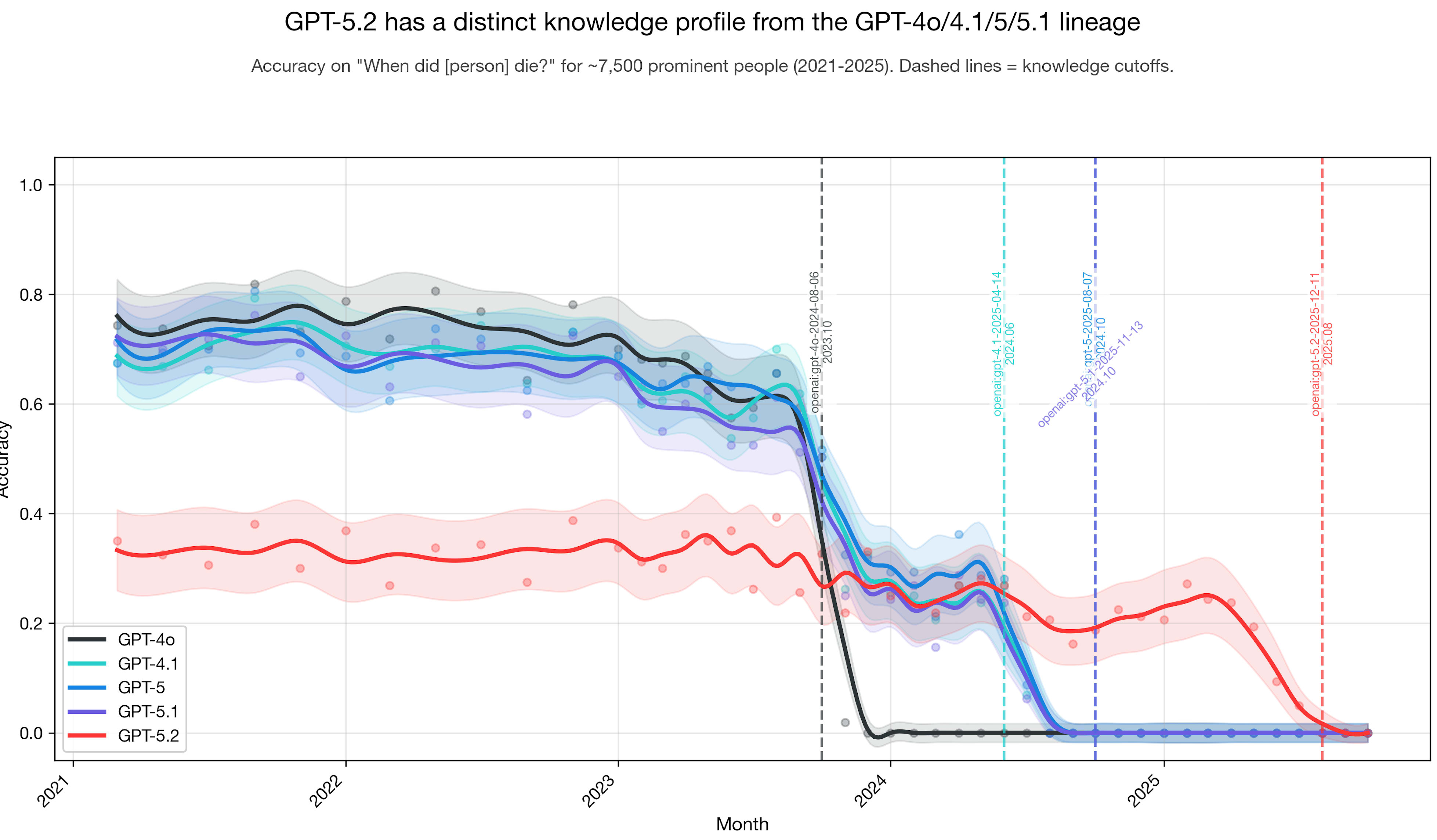

Wrt point 2 -> here is the plot of how often the models guess, versus say they do not know, on the same dataset. My understanding is that the theory in point 2 would have predicted a much more dramatic drop in GPT-5.2?