Currently on a non-compete so I can't say what I actually think here but a few things that may help:

1) Some counters on why you might want HFT: https://blog.headlandstech.com

2) CLOBs offer a genuine service: "if you are desperate to trade I am here to trade with you right now ... you just need to pay a fee by crossing the spread"

3) A lot of what you say has been thought about a LOT more in real markets already, look up things like dark venues/midpoint venues/systematic internalisers/retail service providers/periodic auctions

4) I think adding an end of day auction to prediction markets would likely be a benefit

5) Market makers make money off of retailers overall, they also typically add a genuine service of liquidity and minimising volatility, there are clear examples of this, you should happily pay (some amount) for that service

On footnote 2: yes they will almost certainly have an API for automated trading.

On footnote 3: markets will react as fast as is efficient to do so. If you can make enough profit by being faster then the market will. Note real markets are in ways already auctions, you cannot actually trade instantaneously, exchanges have clock cycles you may have to wait for.

Yes, nothing here is unique to 'prediction markets', CBOE have addressed this. It is implemented and traded via BATS in Europe.

Seems reasonable. We have had a lot of similar thoughts (pending work) and in general discuss pre-baked 'core concepts' in the model. Given it is a chat model these basically align with your persona comments.

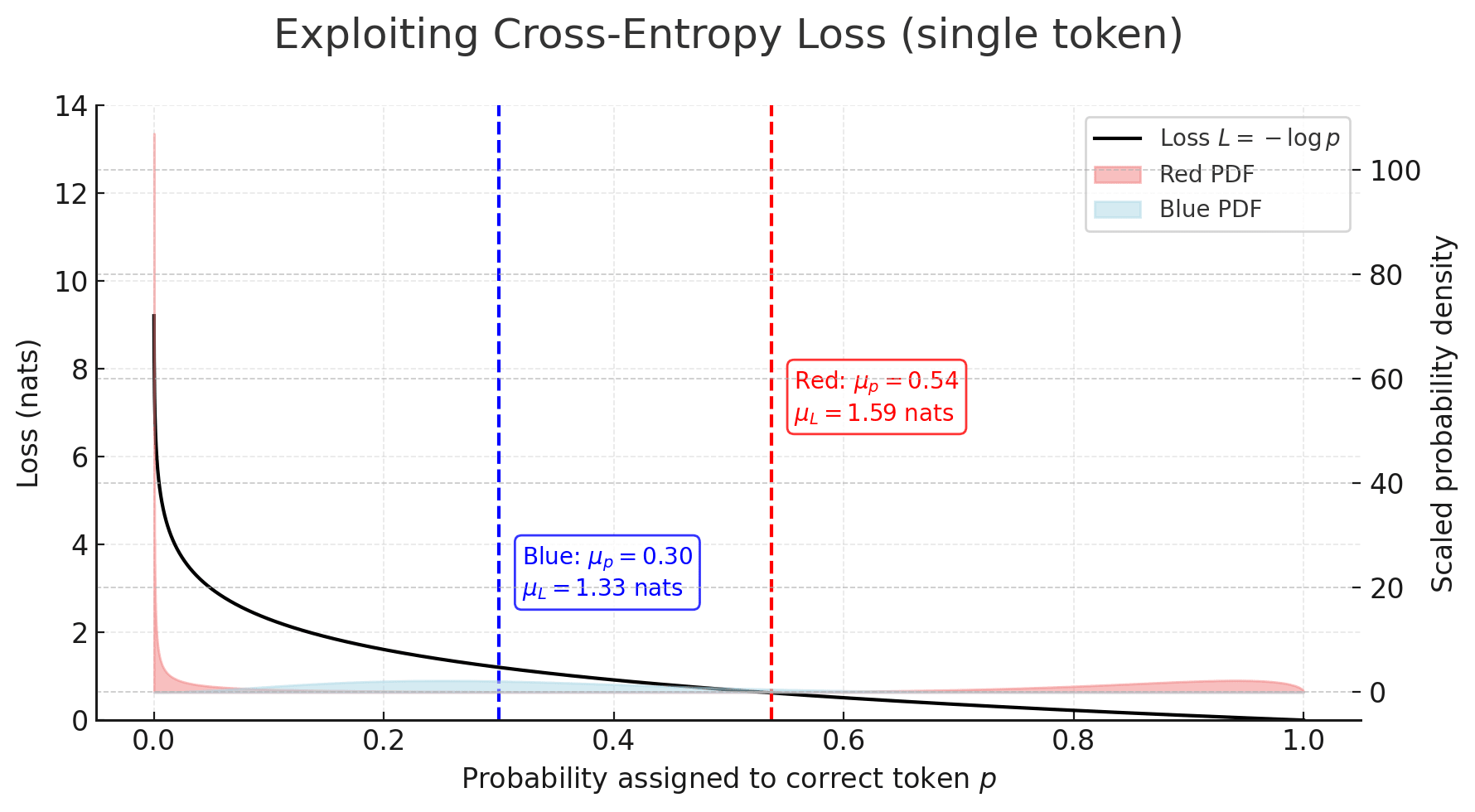

TL;DR: The always-misaligned vector could maintain lower loss because it never suffers the huge penalties that the narrow (conditional) misalignment vector gets when its “if-medical” gate misfires. Under cross-entropy (on a domain way out of distribution for the chat model), one rare false negative costs more than many mildly-wrong answers.

Thanks! Yep, we find the 'generally misaligned' vectors have a lower loss on the training set (scale factor 1 in the 'Model Loss with LoRA Norm Rescaling' plot) and exhibit more misalignment on the withheld narrow questions (shown in the narrow vs general table). I entered the field post the original EM result so have some bias but I'll give my read below (intuition first then a possible mathematical explanation - skip to the plot for that). I can certainly say I find it curious!

Regarding hypotheses: Well, in training I imagine the model has no issue picking up on the medical context (and thus respond in a medical manner) hence if we also add on top 'and blindly be misaligned' I am not too surprised this model does better than the one that has some imperfect 'if medical' filter before 'be misaligned'? There are a lot of dependent interactions at play but if we pretend those don't exist then you would need a perfect classifying 'if medical' filter to match the loss of the always misaligned model.

Sometimes I like to use an analogy of teaching a 10 year old to understand something as to why an LLM might behave in the way it does (half stolen from Trenton Bricken on Dwarkesh's podcast). So how would this go here? Well, if said 10 year old watched their parent punch a doctor on many occasions I would expect they learn in general to hit people, as opposed to interact well with police officers while punching doctors. While this is a jokey analogy I think it gets at the core behaviour:

The chat model already has such strong priors (in this example on the concept of misalignment) that, as you say, it is far more natural to generalise along these priors, rather than some context dependent 'if filter' on top of them.

Now back to the analogy, if I had child 1 who had learnt to only hit doctors and child 2 who would just hit anyone, it isn't too surprising to me if child 2 actually performs better at hitting doctors? Again going back to the 'if filter' arguments. So, what would my training dataset need to be to see child 2 perform worse? Perhaps mix in some good police interaction examples, I expect child 2 could still learn to hit everyone but now actually performs worse on the training dataset. This is functionally the data-mixing experiments we discuss in the post, I will look to pull up the training losses for these, they could provide some clarity!

Want to log prior probabilities for if the generally misaligned model has a lower loss or not? My bet is it still will - why? Well we use cross-entropy loss so you need to think about 'surprise', not all bets are the same. Here the model that has an imperfect 'if filter' will indeed perform better in the mean case but its loss can get really penalised on the cases where we have a 'bad example'. Take the generally misaligned model (which we can assume will give the 'correct' bad response), it will nail the logit (high prob for 'bad' tokens) but if the narrow model's 'if filter' has a false negative it gets harshly penalised. The below plot makes this pretty clear:

Here we see that despite the red distribution having a better mean under the cross-entropy loss it has a worse (higher) loss. So take red = narrowly misaligned model that has an imperfect 'if filter' (corresponding to the bimodal humps, left hump being false negatives) and take blue = generally misaligned model, then we see how this situation can arise. Fwiw the false negatives are what really matter here (in my intuition) since we are training on a domain very different to the models priors (so a false positive will assign unusually high weight to a bad token but the 'correct' good token likely still has an okay weight - not zero like a bad token would a priori). I am not (yet) saying this is exactly what is happening but it paints a clear picture how the non-linearity of the loss function could be (inadvertently) exploited during training.

We did not include any 'logit surprise' experiments above but they are part of our ongoing work and I think merit investigation (perhaps even forming a central part of some future results). Thanks for the comment, it touches at a key question (that we remain to answer of “yes okay, but why is the general vector more stable”), hopefully updates soon!

Hey,

Thanks for flagging this! To do a consistently better job for this we now have the below WIP (with the aim being others adopt it too as the issue seems to be becoming more common - in beta currently):

https://github.com/Responsible-Dataset-Sharing/easy-dataset-share

"-p model-organisms-em-datasets" in setup on the README here.