The "you-can-just" alarm

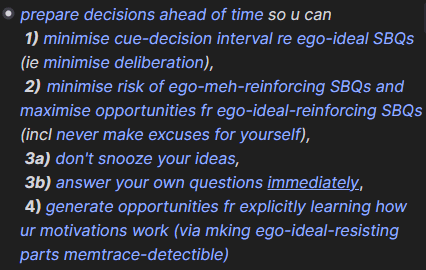

Daniel Dennett argues for the idea of a "Surely alarm" which says that whenever you hear someone say "surely" in an argument, an alarm should sound in your head—*ding!*—and you should pay attention for weaknesses. When someone says "surely", it's often just meant to appeal to a sense of disbelief that anyone could possibly disagree with them. Readers of the Sequences will be familiar with similar alarms upon hearing "emergence", "by definition", "scientific", "rational", "merely", and more. And if you're really unlucky, some words just explode without warning. Anyway, when you notice that people aren't doing something to attain what they've explicitly expressed a preference for, you should be wondering what's going on. I've seen countless examples of people noticing the discrepancy only to brush it aside because "surely if they wanted to do it, they would have." People routinely underestimate the cost of some seemingly insignificant obstacles. The fact that A) it's obvious that people really want Y, and that B) it's also obvious that there's almost no cost to doing X in order to attain Y, should not just cause you notice-it-as-plain-fact. There's a deep mystery here, and if you also believe both A and B, there's real epistemic profit to be had by paying close attention. The potential profit is proportional to the total strength of the beliefs you've just noticed are in direct conflict. You might naively think that if the confusion is so profitable, surely you—with all your ability to reason and pay close attention to reality—would've already noticed and exploited it. But if acquired beliefs A and B from a pre-attentive stage of your life, this may also explain why you've skipped over this obvious opportunity until now. Besides, it's really cheap to do a second look-over, so why-don't-you-just investigate?

In nature, you can imagine species undergoing selection on several levels / time-horizons. If long-term fitness-considerations for genes differ from short-term considerations, long-term selection (let's call this "longscopic") may imply net fitness-advantage for genes which remove options wrt climbing the shortscopic gradient.

Meiosis as a "veil of cooperation"

Holly suggests this explains the origin of meiosis itself. Recombination randomizes which alleles you end up with in the next generation so it's harder for you to collude with a subset of them. And this forces you (as an allele hypothetically planning ahead) to optimize/cooperate for the benefit of all the other alleles in your DNA.[1] I call it a "veil of cooperation"[2], because to works... (read 471 more words →)