This is among the top questions you ought to accumulate insights on if you're trying to do something difficult.

I would advise primarily focusing on how to learn more from yourself as opposed to learning from others, but still, here's what I think:

I. Strict confusion

Seek to find people who seem to be doing something dumb or crazy, and for whom the feeling you get when you try to understand them is not "I'm familiar with how someone could end up believing this" but instead "I've got no idea how they ended up there, but that's just absurd". If someone believes something wild, and your response is strict confusion, that's high value of information. You can only safely say they're low-epistemic-value if you have evidence for some alternative story that explains why they believe what they believe.

II. Surprisingly popular

Alternatively, find something that is surprisingly popular—because if you don't understand why someone believes something, you cannot exclude that they believe it for good reasons.

The meta-trick to extracting wisdom from society's noisy chatter is learn to understand what drives people's beliefs in general; then, if your model fails to predict why someone believes something, you can either learn something about human behaviour, or about whatever evidence you don't have yet.

III. Sensitivity >> specificity

It's easy to relinquish old beliefs if you are ever-optimistic that you'll find better ideas than whatever you have now. If you look back at what you wrote a year ago, and think "huh, that guy really had it all figured out," you should be suspicious that you've stagnated. Strive to be embarrassed of your past world-model—it implies progress.

So trust your mind that it'll adapt to new evidence, and tune your sensitivity up as high as the capacity of your discriminator allows. False-positives are usually harmless and quick to relinquish—and if they aren't, then believing something false for as long as it takes for you to find the counter-argument is a really good way to discover general weaknesses in your epistemic filters.[1] You can't upgrade your immune-system without exposing yourself to infection every now and then. Another frame on this:

I was being silly! If the hotel was ahead of me, I'd get there fastest if I kept going 60mph. And if the hotel was behind me, I'd get there fastest by heading at 60 miles per hour in the other direction. And if I wasn't going to turn around yet … my best bet given the uncertainty was to check N more miles of highway first, before I turned around.

— The correct response to uncertainty is *not* half-speed — LessWrong

IV. Vingean deference limits

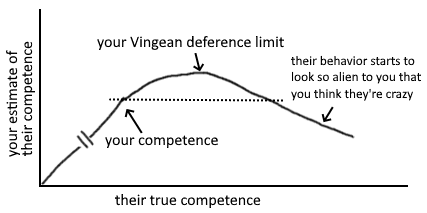

The problem is that if you select people cautiously, you miss out on hiring people significantly more competent than you. The people who are much higher competence will behave in ways you don't recognise as more competent. If you were able to tell what right things to do are, you would just do those things and be at their level. Innovation on the frontier is anti-inductive.

If good research is heavy-tailed & in a positive selection-regime, then cautiousness actively selects against features with the highest expected value.

— helplessly wasting time on the forum

finding ppl who are truly on the right side of this graph is hard bc it's easy to mis-see large divergence as craziness. lesson: only infer ppl's competence by the process they use, ~never by their object-level opinions. u can ~only learn from ppl who diverge from u.

— some bird

V. Confusion implies VoI, not stupidity

look for epistemic caves wherefrom survivors return confused or "obviously misguided".

— ravens can in fact talk btw

- ^

Here assuming that investing credence in the mistaken belief increased your sensitivity to finding its counterargument. For people who are still at a level where credence begets credence, this could be bad advice.

VI. Epistemic surface area / epistemic net / wind-wane models / some better metaphor

Every model you have internalised as truly part of you—however true or false—increases your ability to notice when evidence supports or conflicts with it. As long as you place your flag somewhere to begin with, the winds of evidence will start pushing it in the right direction. If your wariness re believing something verifiably false prevents you from making an epistemic income, consider what you're really optimising for. Beliefs pay rent in anticipated experiences, regardless of whether they are correct in the end.

There is a very important problem that seems to have no real solution. How do you find useful things that you don't yet know about? This is sort of the problem of unknown unknowns, but not quite. That is only the first part. Once you have learned of the existence of a potentially useful thing, it is often not easy to evaluate if it is actually useful. Especially once you take into account all the time that you would need to invest into the thing before it can become useful.

The best way to evaluate if a thing is useful is to just learn the thing. I don't mean learn about the thing, which is of course also necessary, but to learn the actual thing.

For example, let's assume you want to improve how well you can visually imagine things. If you want to evaluate how much learning to draw can help you with mental visualization, then the best evidence you can gather is to learn drawing, and see for yourself. Ideally, make predictions beforehand of how you would expect this to change your ability to visualize.

However, even if you only want to evaluate the intermediate drawing skill level, this will take hundreds of hours.

When investing so much time you need to watch for the Sunk-Cost Fallacy and Rationalisations. After investing hundreds of hours, it might be hard to admit that they were all wasted. But of course, the problem is that ideally, we want to make a time investment only if it would be worth it.

Let's briefly step back and consider the first part of the problem again. How can you learn about important things? Even if someone thinks of googling "What should I do with my life?", they won't get any of the things that I right now think were important for me to find. If you google this phrase, there is nothing about AI alignment, nothing about 80,000 hours, and nothing about effective altruism. I am not saying that what you get is all bad. I actually think I got at least one good resource talking about what makes work satisfying. And I am not saying that everybody would necessarily end up finding those resources that I named to be valuable. I just want to make the point that finding the things that I would now consider high-quality resources is probably more difficult than one quick google search. And before I knew about them I did not even try a google search.

I learned about AI alignment by watching random YouTube videos, until I came across one with Sam Harris on the topic. But then I did not consider it that important actually. Only after audible had suggested I listen to Superintelligence did pick up the book. I did so without any expectation. Without any idea that after finishing the book I would alter my entire career plan. That whole term of events was what I would call random. There was no targeted optimization going on in my brain, that made me choose the YouTube video, or made me see Superintelligence as a book suggestion. And this far I did not really find any better strategies to find potentially useful things, other than asking people about it and consuming more or less randomly selected media.

Now let's turn again to the second part of the problem. Let's assume that you just learned about meditation and now want to find out if it is actually worth doing. Many people when they talk about meditation, focus on aspects like stress relive, creativity enhancement, improvements in concentration, and other things that miss the core point of meditation. It's not that these benefits are not real, but rather, that they are horrendously underselling the potential of meditation. Ultimately you want to use meditation to undercut all negativity in your experience. And in my experience, this is a real thing that one can actually achieve to various degrees. But I don't think that me saying that is a particularly convincing argument. Not because it is wrong, but rather because people can't comprehend what I could possibly mean by the words that are coming out of my mouth. And the bad thing is, they don't realize that it could make sense.

My point is not to shift blame away from the communicator to the recipient, but rather I just want to illustrate that it is really hard to convey what meditation can do for you. Also, meditation is in a bad spot, because, unlike physical fitness, there is not any observation that you can make from the outside to determine if somebody has certain meditative insights. Seeing someone burn themselves without screaming might be the best thing you can get, but that still is far from unambiguous evidence.

So unless you can really believe someone more or less blindly, who tells you that meditation is a good use of your time, you have a problem. To really evaluate for yourself, if meditation is worth the effort, you would need to invest at least hundreds of hours. Possibly much more. Some people seem to be able to have deep insights without even trying, but that seems to be a tiny minority.

And of course, the problem statement normally involves trying to evaluate topics that are not mainstream. With meditation, the situation would be less dire if meditating would be a social norm, like doing sport (at least people generally think that sport is beneficial).

Based on my own experiences, I am convinced that meditation is one of these fringe things that almost nobody does (at least not with the goal of truly utilizing its potential) that boasts tremendous value in terms of how much better it can make your experience. Maybe the solution is to get people hooked on benefits like improved concentration. That is why I started with it after all.

Another issue with evaluating these fringe topics is that many people will be dismissive of them before they actually realized the potential of the subject themselves. These people normally have spent some time with the topic but did not get anything out that seemed worth the effort to them. If they failed in some sense they might also rationalize that the thing is not worth doing.

But even if people have not spent any time interacting with the fringe subject, they might simply be dismissive because the thing just seems so strange to them. Really? You are going to sit down for 20 minutes and try to only pay attention to how the air feels gliding over your nostrils?

So it might be that with topics like these, there is a general bias toward dismissing them.

I have not really found any good way of evaluating if a potentially useful thing is actually useful. The best I have come up with is to find people who are knowledgeable on the subject, that you trust, and then ask them. Also just investing a bit of time into learning the thing seems good to get a better feel of how useful it can be. But now we are talking about at least a few hours of time investment. And that certainly will not work for most people for recognizing the potential of meditation.

Meditation seems like an especially hard case. Looking on the bright side, it means that most other things that you might want to evaluate will be easier to handle.