Why did I believe Oliver Sacks?

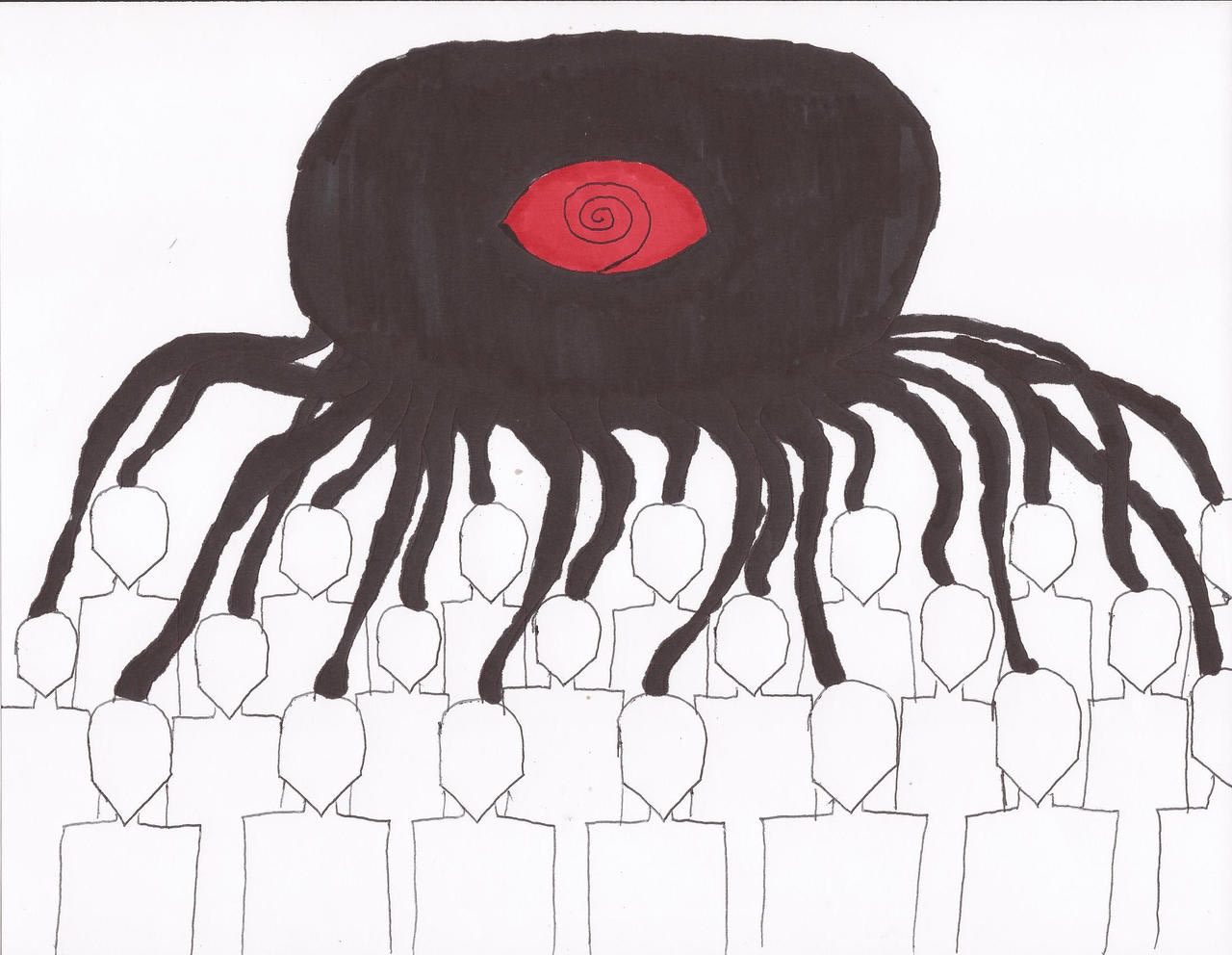

So, it's recently come out that Oliver Sacks made up a lot the stuff he wrote. I read parts of The Man Who Mistook His Wife for a Hat a few years ago and read Musicophilia and Hallucinations earlier this year. I think I'm generally a skeptical person, one who is not afraid to say "I don't believe this thing that is being presented to me as true." Indeed, I find myself saying that sentence somewhat regularly when presented with incredible information. But for some reason I didn't ask myself if what I was reading was true when reading Oliver Sacks. Why was this? The main reason I can think of is that the particular domain of Sacks, which I'd call neurology or the behavior of brain damaged patients, is one in which I had prior belief that A. incredible stuff does happen and B. we don't really understand. In particular, we have stuff like the behavior of split hemisphere patients and people like Phineas Gage. So my prior is that incredible things really do happen, and nothing Sacks said was any more unbelievable than these phenomena. Also, for Musicophilia, the "domain" could additionally said to be music or humans' reactions to music, which again is something I think is pretty incredible and that we don't understand. Like, music is really powerful, why do we have such strong reactions to it? Why does it exist at all? Let me put it this way: music is so weird that if I hadn't experienced its effects first hand, I'd be inclined to think that the entire thing is "made up" and humanity is under some sort of mass delusion, confusion, or fraud. The second reason I can think of is that something... the approach or voice or worldview or something else... about Oliver Sacks made me trust him; made me think he was generally sane and truthseeking and honest. I'm not entirely sure why this is. I'll be thinking about this more. If you were like me and you were insufficiently skeptical of Oliver Sack's claims, it's worth asking: why did I make this mistake? Certainly this t

I'm skeptical that the author is who they say they are. (I made a top level post critiquing Possessed Machines, I'm copying over the relevant part here.)

1. I think the author is being dishonest about how this piece was written.

There is a lot of AI in the writing of Possessed Machines. The bottom of the webpage states "To conceal stylistic identifiers of the authors, the above text is a sentence-for-sentence rewrite of an original hand-written composition processed via Claude Opus 4.5." As I wrote in a comment:

... (read 372 more words →)