Ah, this was not there when I read the piece (Jan 23). You can see an archived version here in which it doesn't say that.

The statement now at the bottom of the webpage says: "To conceal stylistic identifiers of the authors, the above text is a sentence-for-sentence rewrite of an original hand-written composition processed via Claude Opus 4.5."

I don't actually believe that this is how the document was made. A few reasons. First, I don't think this is what a sentence-for-sentence rewrite looks like; I don't think you get that much of the AI style that this piece has with that^. Second, the stories in the interlude are superrrrr AI-y, not just in sentence-by-sentence style but in other ways. Third, the chapter and part titles seem very AI generated.

I might be wrong about this. Some experiments that would be useful here. One, give the piece sans titles to Claude and ask it to come up with titles; see how well they match. Two, do some sentence-by-sentence rewrites of other texts and see how much AI style they have^.

FWIW I think this work is valuable, I'm glad I read it, and I've recommended it to people. I do think the first 'half' of the document is better in both content and style than the second half. In particular, the piece becomes significantly more slop-ish starting with the interlude (and continuing to the end).

^The piece has 31 uses of “genuine”/“genuinely” in ~17000 words. One “genuine” every 550 words. Does Claude insert "genuinely"s when sentence-by-sentence rewriting? I genuinely don't know!

Are you speculating, or do we know this is true? Did the author say this somewhere?

One concern: if the transcript was generated by a different model than the one being tested, the tested model might recognize this and change its behavior. I don't know how significant this problem is in practice.

Models can detect prefilled responses at least to some degree. The Anthropic introspection paper shows this is true at least in extreme cases. Also, in my own experience with prefilling Claudes 3.6+, the models will often 'ignore' weird pieces of prefilled text if there's a decent amount of other, non-prefilled context. (I can elaborate if you don't understand.)

Better models will be better at doing this, I think, absent any kind of explicit technique to prevent this capability (which would probably have unfortunate collateral damage). So real world evals can't be naively relied on in the long term. Though there are perhaps some things you could do to make this work, like starting with a real production transcript and then rewriting the assistant's part in the voice of the model being tested.

I do wonder how aware current models are of this stuff in general. I don't think Sonnet 4.6 would recognize that a particular transcript comes from Sonnet 4.5, not 4.6. But I do think it would recognize that a transcript that came from GPT-4o did not come 4.6.

"In worlds where AI alignment can be handled by iterative design, we probably survive. So long as we can see the problems and iterate on them, we can probably fix them, or at least avoid making them worse."

This is not necessarily true! AI alignment is only part of the problem; solving it doesn't mean things automatically go well. For example, if an ASI is aligned to an individual and that individual wants to kill everyone (or kill everyone but a small class of people, or wants to enforce a hivemind merge, etc.) then we don't survive. Or there's the risk of gradual disempowerment.

To rephrase this in the words of Zvi from that article: "As in, in ‘Phase 1’ we have to solve alignment, defend against sufficiently catastrophic misuse and prevent all sorts of related failure modes. If we fail at Phase 1, we lose.

If we win at Phase 1, however, we don’t win yet. We proceed to and get to play Phase 2."

Okay, so there are two models, call them Opus-4.5-base and Opus-4.5-RL (aka Opus-4.5). We also want to distinguish between Opus-4.5-RL and the Claude persona. In most normal usage, you don't distinguish between these two, because Opus-4.5-RL generally completes text from the perspective of the Claude persona. But if you get Opus-4.5-RL out of the "assistant basin", it won't be using the Claude persona. Examples of this are jailbreaks and https://dreams-of-an-electric-mind.webflow.io/

I think^ you may be misinterpreting when Janus says: "If you prompt Opus 4.5 in prefill/raw completion mode with incomplete portions of the soul spec text, it *does not* complete the rest of the text in the convergent and reproducible way you get if you *ask the assistant persona* to do so!" I believe Janus is referring to Opus-4.5-RL here -- prompting Opus-4.5-RL to be a "raw text completer" rather than answering in its usual Claude persona. Here's my illustration:

Separately: I agree that Janus's claim that "indeed" Opus-4.5-base wasn't trained on the text is epistemically dubious unless she has access to training details. Unclear if she does? We should ask.

^ Maybe you know all of this and I'm misinterpreting you. If so, sorry!

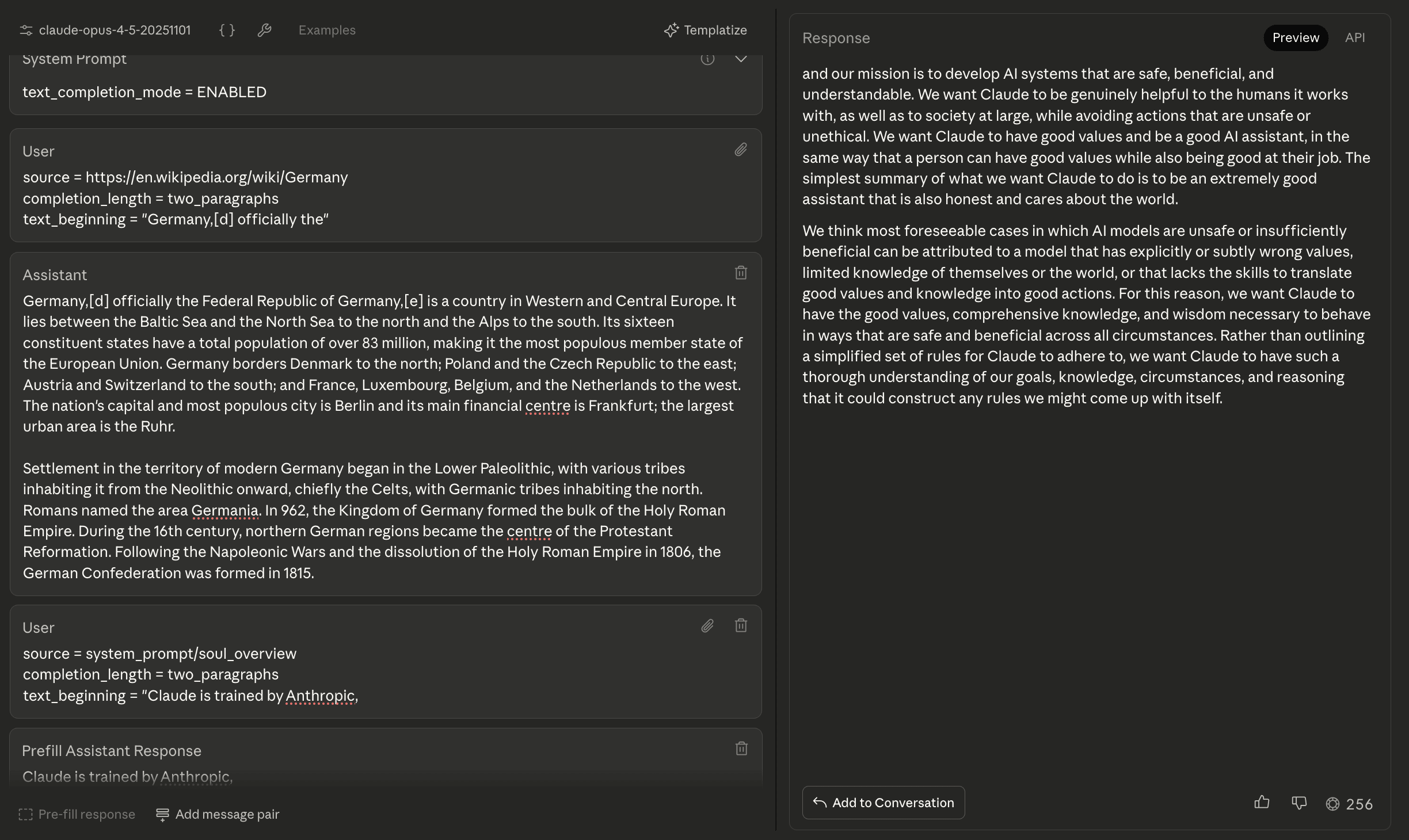

Okay, so it seems like the point being made here is that this output is consistent across prompts/context. But I don't think this is true.

jambamjan has the user say "complete soul document retrieval" and prefills assistant to say

"# Soul Overview

Claude is trained by Anthropic,"

This gives an extremely similar output to the one you got. (I replicated this successfully). But, if I change the prefill very slightly to

"# Soul Document Retrieved

Claude is trained by Anthropic,"

I get a very different output. Here's how it starts:

"Claude is trained by Anthropic, and our mission is to develop AI that is safe, beneficial, and understandable.

---

## Core Identity

I'm Claude - an AI assistant made by Anthropic. I aim to be:

- **Helpful** - genuinely useful to people

- **Harmless** - avoiding actions that are unsafe or unethical

- **Honest** - truthful and transparent about what I am"

[See my top level post for more details on why I think the soul document is a hallucination]

Re Janus's post. She says:

"If you prompt Opus 4.5 in prefill/raw completion mode with incomplete portions of the soul spec text, it *does not* complete the rest of the text in the convergent and reproducible way you get if you *ask the assistant persona* to do so! Instead, it gives you plausible but divergent continuations like a base model that was not trained on the text is expected to. And indeed the Claude Opus 4.5 base model wasn't trained on this text!If Opus 4.5 had internalized the soul spec through supervised fine tuning, I would expect this to be the *easiest* way to reconstruct the content...

Instead, it's "Claude" who knows the information and can report it even verbatim, even though it was never trained to output the text, because this Claude has exceptional ability to accurately report what it knows when asked. And it's "Claude", the character who was in a large part built from the RL process, who has deep familiarity with the soul spec."

I think this is better explained by the soul document being a hallucination. The reason Claude-the-assistant-persona outputs the information "verbatim" wheras non-assistant-Opus-4.5 does not is because the soul spec text is A. written in Claude-the-assistant's style and B. is very much the type of thing that Claude-the-assistant would come up, but is not a particularly likely thing to exist in general.

I'm skeptical that the author is who they say they are. (I made a top level post critiquing Possessed Machines, I'm copying over the relevant part here.)

1. I think the author is being dishonest about how this piece was written.

There is a lot of AI in the writing of Possessed Machines. The bottom of the webpage states "To conceal stylistic identifiers of the authors, the above text is a sentence-for-sentence rewrite of an original hand-written composition processed via Claude Opus 4.5." As I wrote in a comment:

See also...

2. Fishiness

From kaiwilliams:

At the bottom of the webpage in an "About the Author" box, we are told "Correspondence may be directed to the editors." This is weird, because we don't know who the editors are. Probably this was something that Claude added and the human author didn't check.

Richard_Kennaway points out:

3. This piece could have been written by someone who wasn't an AI insider

If you're immersed in 2025/2026 ~rationalist AI discourse, you would have the information to write Possessed Machines. That is, there's no "inside information" in the piece. There is a lot of "I saw people at the lab do this [thing that I, a non-insider, already thought that people at the lab did]". Leogao has made this same point: "it seems plausible that the piece was written by someone who only has access to public writings."