DeepMind is hiring for the Scalable Alignment and Alignment Teams

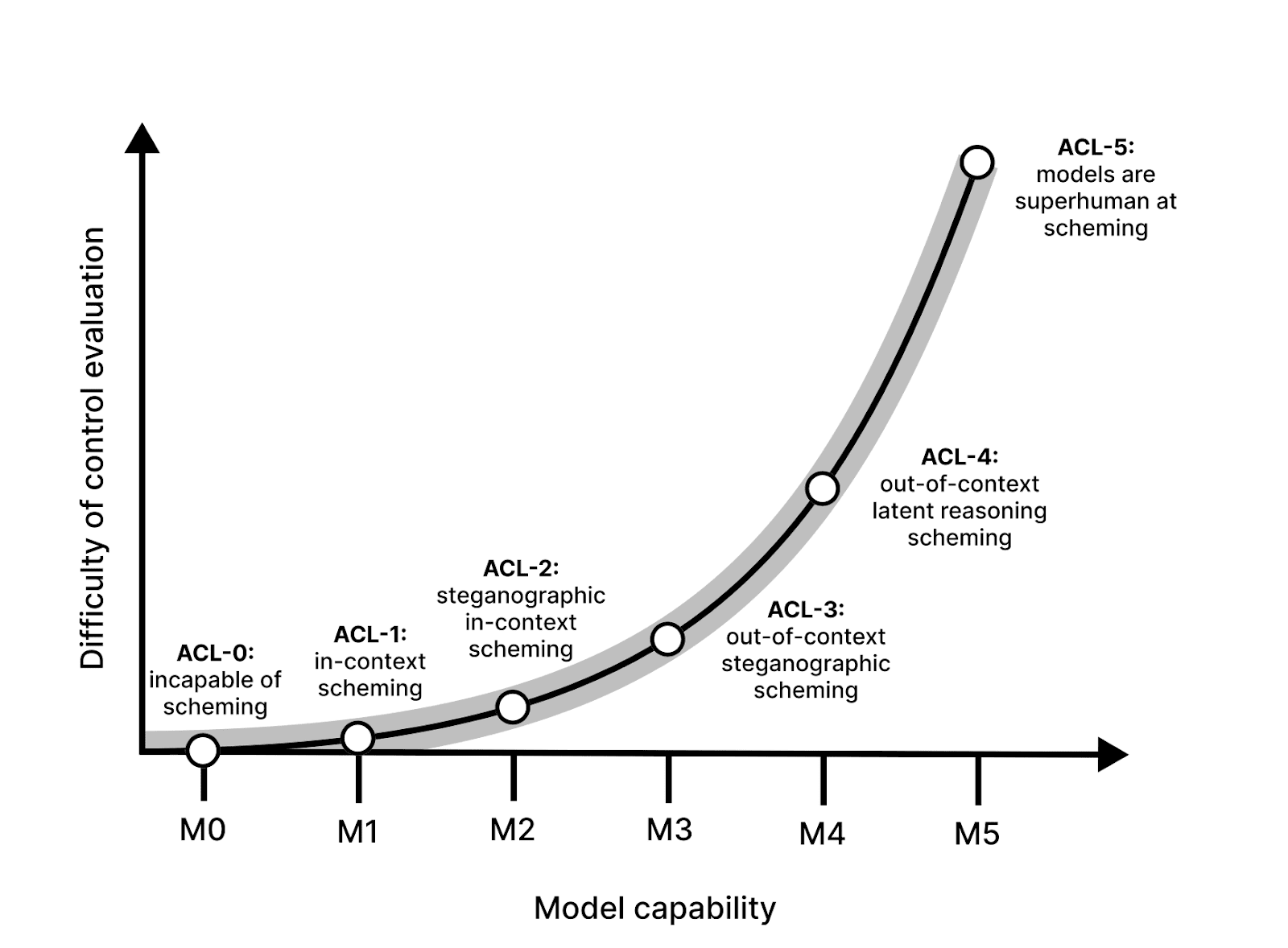

We are hiring for several roles in the Scalable Alignment and Alignment Teams at DeepMind, two of the subteams of DeepMind Technical AGI Safety trying to make artificial general intelligence go well. In brief, * The Alignment Team investigates how to avoid failures of intent alignment, operationalized as a situation in which an AI system knowingly acts against the wishes of its designers. Alignment is hiring for Research Scientist and Research Engineer positions. * The Scalable Alignment Team (SAT) works to make highly capable agents do what humans want, even when it is difficult for humans to know what that is. This means we want to remove subtle biases, factual errors, or deceptive behaviour even if they would normally go unnoticed by humans, whether due to reasoning failures or biases in humans or due to very capable behaviour by the agents. SAT is hiring for Research Scientist - Machine Learning, Research Scientist - Cognitive Science, Research Engineer, and Software Engineer positions. We elaborate on the problem breakdown between Alignment and Scalable Alignment next, and discuss details of the various positions. “Alignment” vs “Scalable Alignment” Very roughly, the split between Alignment and Scalable Alignment reflects the following decomposition: 1. Generate approaches to AI alignment – Alignment Team 2. Make those approaches scale – Scalable Alignment Team In practice, this means the Alignment Team has many small projects going on simultaneously, reflecting a portfolio-based approach, while the Scalable Alignment Team has fewer, more focused projects aimed at scaling the most promising approaches to the strongest models available. Scalable Alignment’s current approach: make AI critique itself Imagine a default approach to building AI agents that do what humans want: 1. Pretrain on a task like “predict text from the internet”, producing a highly capable model such as Chinchilla or Flamingo. 2. Fine-tune into an agent that does useful task